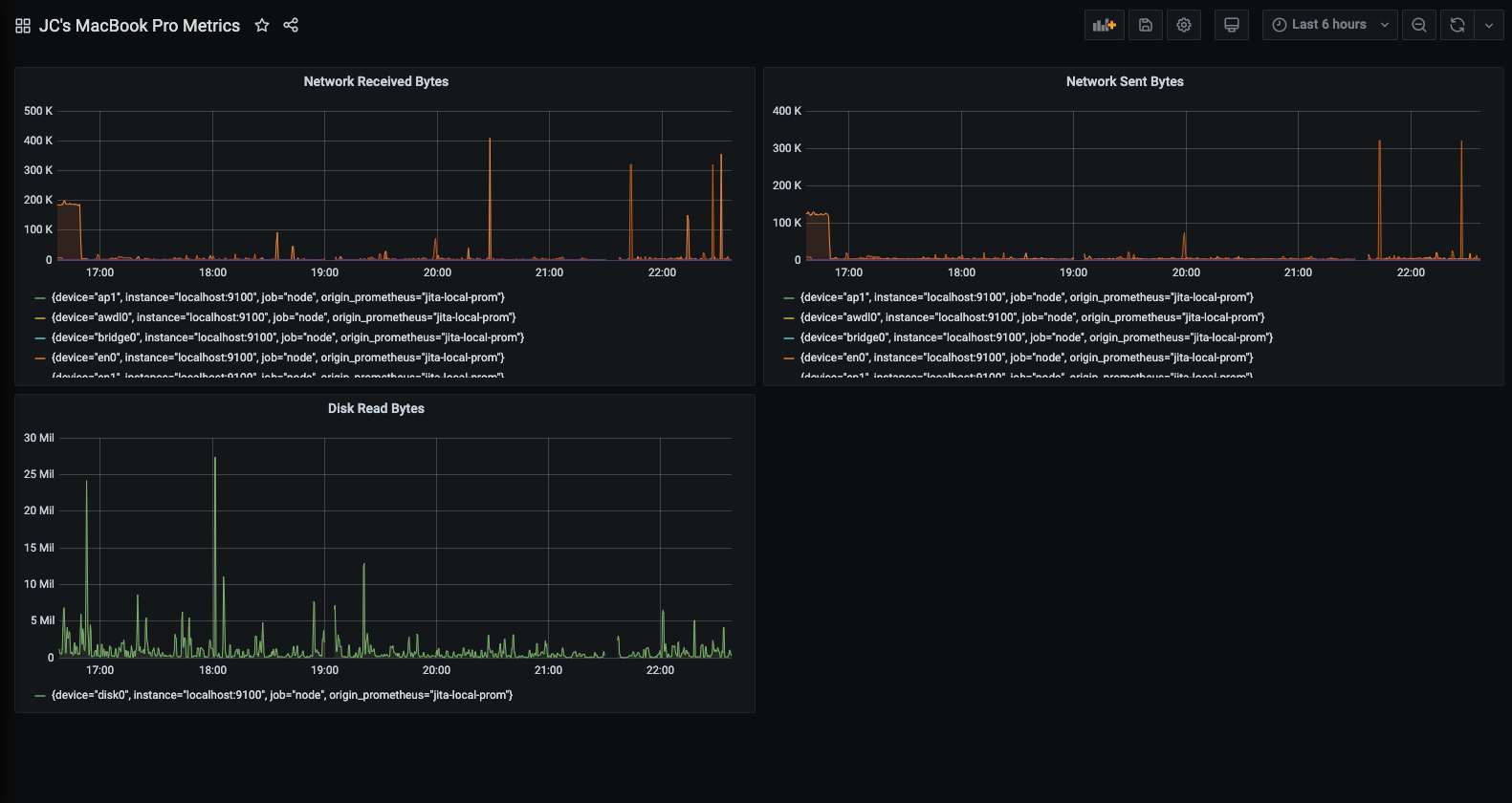

Your pretty CPU graphs are useless when users can't check out because the database connection pool is maxed out. Been there, done that, got the 3am pager duty t-shirt.

Middle of the night last week, checkout was broken for like 40 minutes, maybe more. CPU was at 12%, memory looked fine, but we spent forever figuring out the payment API was timing out. Those infrastructure graphs don't tell you anything about user experience.

The Gap Between Infrastructure and User Experience

Users don't care about your server's CPU being at 15%. They care that clicking "Buy Now" takes 15 seconds instead of 2. That's the difference between a sale and an abandoned cart.

Performance monitoring needs to track what actually breaks:

- Application-level metrics like request latency, error rates, and throughput

- Business metrics like conversion rates, transaction success rates, and user journey completion

- Service Level Indicators (SLIs) that reflect actual user experience

- Dependencies between services that can cause cascading failures

Why Prometheus and Grafana Don't Suck (As Much)

DataDog will bankrupt you faster than AWS NAT gateway costs. Prometheus and Grafana at least let you keep your salary while actually measuring things that matter.

Prometheus advantages for performance monitoring:

- Pull-based metrics collection that doesn't impact application performance

- Powerful query language (PromQL) for calculating percentiles, rates, and correlations

- Native support for histograms to track latency distributions

- Service discovery that automatically adapts to dynamic environments

Grafana advantages for performance visualization:

- Dashboard templates that work across different environments

- SLO tracking capabilities built specifically for performance management

- Alerting that integrates with your workflow instead of spam

- Variables and templating that let you drill down from service-level to individual instances

The Four Pillars of Effective Performance Monitoring

Google figured this shit out after years of outages, and here's what actually works in production. Don't try to monitor everything at once though - you'll just confuse yourself.

1. Latency Metrics (How slow is your shit?)

- Response time distributions (P50, P95, P99)

- Time to first byte and total request time

- Database query execution times

- External service dependency latency

2. Throughput Metrics (How much traffic can you handle?)

- Requests per second across services

- Transaction rates and business operation throughput

- Data processing rates and batch job completion

Here's the thing - most teams focus only on latency and forget about the other stuff. Big mistake.

3. Error Metrics (What's actually broken?)

- HTTP error rates by status code

- Application exception rates

- Failed business transactions

- Circuit breaker activations and retry attempts

4. Saturation Metrics (When will everything explode?)

- Resource utilization approaching limits

- Queue depths and connection pool usage

- Memory pressure and garbage collection impact

- Network bandwidth and connection limits

Setting Up for Success: The Instrumentation Foundation

Before your monitoring stops sucking, your applications need to expose the right metrics. This means instrumenting your code to track what actually breaks, not just CPU graphs that look pretty in meetings.

Essential application instrumentation:

## Request duration histogram

http_request_duration_seconds{method="GET",handler="/api/users"}

## Request rate counter

http_requests_total{method="GET",handler="/api/users",status="200"}

## Error rate tracking

http_requests_total{method="GET",handler="/api/users",status="500"}

## Business metric examples

user_registrations_total

order_processing_duration_seconds

payment_transaction_success_total

Database performance metrics:

## Query execution time

db_query_duration_seconds{operation="SELECT",table="users"}

## Connection pool metrics

db_connections_active

db_connections_idle

db_connections_max

## Slow query tracking

db_slow_queries_total{query_type="SELECT"}

The key is measuring what affects user experience, not just infrastructure health. Users don't care that your server has low CPU usage if their login request times out because the database connection pool is exhausted. I read somewhere that even 100ms of added latency can cost sales, and loads of users abandon sites that take more than 3 seconds to load.

How to Fuck Up Performance Monitoring (Learn From Our Pain)

The Average Trap: When 200ms Hides 5-Second Timeouts

Average response times are lies. We had "great" 150ms averages while some users were waiting forever because someone wrote this horrible query that selected like a million users inside a loop. Took us hours to figure out that was the problem. P99 latency showed the truth - people were hitting that 30-second Nginx timeout. Google's SRE teams focus on percentiles for good reason, and Netflix monitors P99.9 to catch the worst user experiences.

Ignoring Business Metrics: When Perfect Uptime Means Zero Revenue

Our API had 99.9% uptime but conversion went to hell during what we thought was an optimization. Turns out the payment flow was returning HTTP 200 with error messages inside the JSON response body - classic "successful failure" mess. Mobile users were seeing "Payment failed" while our monitoring showed perfect green dots. Companies like Spotify track business metrics alongside technical ones, and Etsy measures conversion rates as their primary SLI for good reason.

Alert Spam Hell: When Your Team Stops Caring

We got like 40-something CPU alerts last week for servers sitting at 51% usage. None of them mattered. Meanwhile, the auth service was silently failing for 20% of login attempts - Redis connection pool exhaustion, turns out - and nobody noticed until users started complaining on Twitter. Alert on user pain, not server feelings. Effective alerting principles from Google emphasize symptom-based alerting, and PagerDuty found that alert fatigue affects most teams.

The Dependency Blindness: When Your Stack is Only as Strong as Stripe

Your beautiful 50ms API response time doesn't mean anything if the payment gateway takes 12 seconds to authorize a credit card. We learned this during Black Friday when Stripe was having issues and our entire checkout flow looked broken. Spent 3 hours debugging our "broken" code while Stripe's status page said everything was green. Our monitoring showed perfect internal metrics while customers were complaining about "your broken site." The error was stripe.exception.APIConnectionError: Failed to establish a new connection but their status page was lying.

Anyway, that's the foundation. Now let's get into the technical stuff so you can build monitoring that actually helps when things go sideways.