Getting New Relic set up properly takes way longer than they claim, but here's how to actually do it without wanting to throw your laptop out the window. There are several ways to install this thing, depending on how much pain you want to experience upfront versus ongoing.

Why setup always takes longer than they claim

The Guided Installer Lies About Everything

New Relic's "guided installation" works fine if you're running vanilla Ubuntu with a standard Node.js app. The moment you have custom network policies, Docker Compose setups, or anything remotely interesting, it fails spectacularly. Takes 30 minutes if you're lucky and nothing breaks (spoiler: something always breaks).

Agent Installation Reality Check

Here's what actually happens with each language:

Infrastructure Agent Problems

The Infrastructure agent looks innocent but will:

- Use 200MB+ RAM on busy hosts (they claim "minimal overhead") - version 1.44.0 introduced a memory leak that took us weeks to track down

- Send way more data than you expect - a single server can generate 50GB+/month.

NRINFRA-1734: Error sending data to collector means you're probably hitting rate limits

- Break on systems with custom systemd configurations. Ubuntu 22.04 with non-standard service paths = 3 hours of debugging

- Require root access, which your security team will love

Kubernetes - Where Dreams Go to Die

New Relic's Kubernetes monitoring with Pixie integration sounds amazing in theory. In practice:

- Pixie crashes on nodes with less than 1GB free memory - you'll see

OOMKilled in your pod logs and wonder why your monitoring died along with your app

- Network policies block everything - spent 6 hours debugging

context deadline exceeded errors before realizing our policies blocked the collector

- The cluster agent needs way more permissions than documented. That RBAC config they provide? It's missing half the required permissions

- Data explosion - a medium K8s cluster easily generates 100GB+/month. Delete your

node_modules folders or they'll index everything

If you have Istio service mesh, prepare for a week of troubleshooting. The Pixie integration breaks with custom CNI plugins about 50% of the time.

The Implementation Timeline Nobody Talks About

First few days: Install agents, everything looks fine, pat yourself on the back

Next week or two: Realize you're getting 500 alerts per day, all useless. Your Slack channels are flooded with garbage notifications

Month 1: Spend 40+ hours tuning alert thresholds and learning NRQL. I spent an entire weekend debugging why Pixie kept crashing our staging cluster - turns out it needs way more memory than documented

Sometime later: Discover your bill jumped from $100 to $2000 because one microservice had debug logging enabled and you didn't notice for 3 weeks. This is why we can't have nice things

2-3 months in: Actually start getting useful insights once you figure out which metrics matter vs which are just noise

Real Customer Story Commentary

Those success stories they love to cite? Let's be honest about them:

- Kurt Geiger improved Core Web Vitals - impressive, but this took their team 6 months of tuning, not the "quick win" implied

- BlackLine's $16 million savings - take these marketing numbers with an industrial-sized grain of salt. They consolidated 15 tools, so of course costs went down

- Forbes solves problems faster - mainly because they have a dedicated platform team and unlimited budget

What Actually Breaks in Production

Data Retention Surprises

That Data Plus pricing at $0.60/GB with "enhanced retention" sounds reasonable until you realize:

- Default retention is only 8 days for metrics

- 90-day retention sounds great until your 500GB/month usage costs $300/month extra

- Log forwarding will murder your network bandwidth - 1 busy app server generates 10GB+/day of logs

Memory Leak Detection Agent

The infrastructure agent has its own memory issues - we've seen it grow to 1GB+ RAM usage on busy hosts. I learned this the hard way when it took down our prod API for 2 hours at 3am. Restart it monthly or it'll eventually OOM kill your actual applications.

Alert Fatigue is Real

Default thresholds are garbage:

- CPU alerts trigger during normal load spikes

- Memory alerts fire when your app uses more than 80% RAM (which is normal)

- Error rate alerts activate on single 404s

The 2025 Feature Marketing Reality

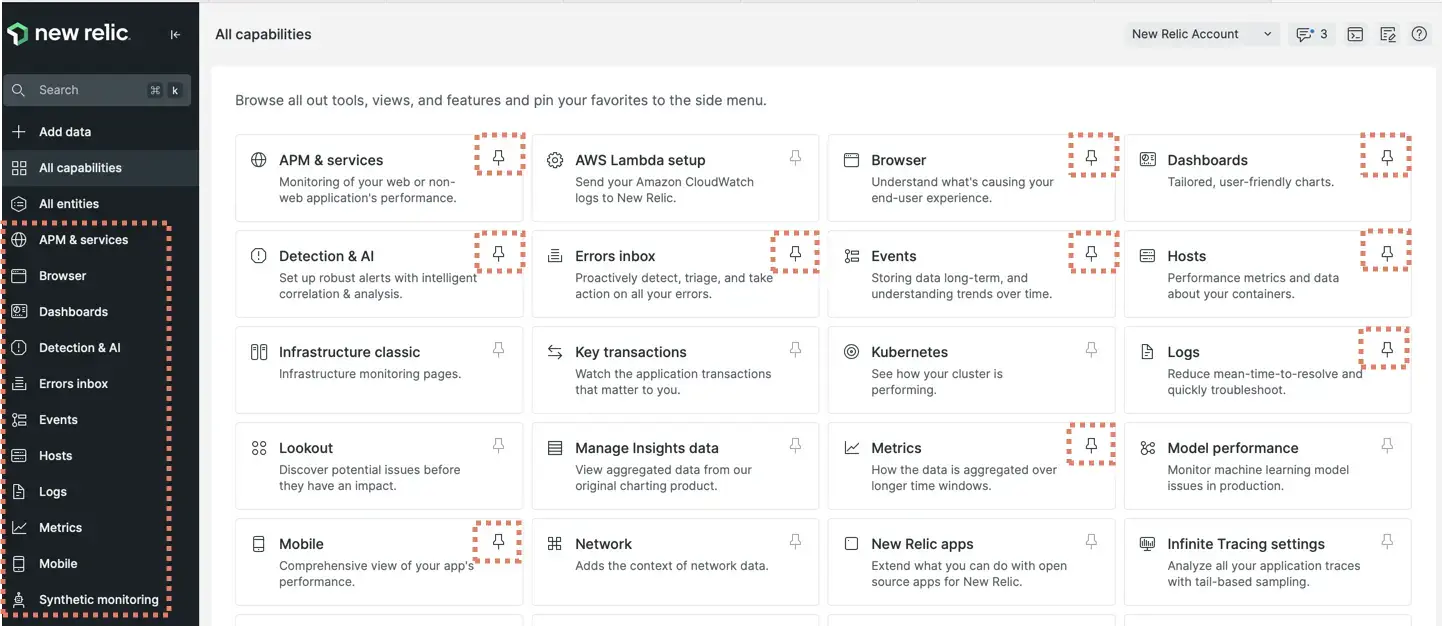

"Service Architecture Intelligence"

This fancy feature is basically service discovery with better UI. It's nice but won't magically organize your microservices mess.

"Transaction 360"

Claims to reduce MTTR by 5x - in reality, it's distributed tracing with better correlation. Useful if you have complex request flows, but won't fix fundamental monitoring issues.

"AI-Powered" Everything

The AIOps features mostly tell you obvious shit you already figured out. "Your app is slow because CPU is high" - thanks, AI. I spent 3 hours waiting for it to "intelligently" correlate issues that any engineer would spot in 30 seconds looking at a graph.

How to Actually Succeed With New Relic

Start Small and Stupid

- Install ONE agent on your least critical service first

- Monitor only errors and response times initially

- Gradually add more monitoring as you understand the data volume impact

- Never enable debug logging in production without watching your bill

Set Up Billing Alerts Immediately

- Alert at 50GB, 75GB, and 90GB monthly usage

- Monitor per-service data usage daily for the first month:

curl -H "Api-Key: $NEW_RELIC_USER_API_KEY" "https://api.newrelic.com/v2/usages.json"

- That "transparent pricing" isn't so transparent when your bill arrives

Accept the Learning Curve

Seriously, whoever wrote that "quick setup" marketing copy has never actually installed monitoring software in their life. Plan 2-3 weeks minimum to get anything useful working.