Azure Container Instances (ACI) is Microsoft's attempt at serverless containers - think AWS Fargate but with more Azure-specific quirks. As of September 2025, it runs your containers in what they call \"hypervisor-level isolation\", which sounds fancy but basically means each container gets its own VM underneath.

The promise is simple: skip the Kubernetes YAML hell and VM management nightmare. Just az container create and boom - your container is supposedly running. Reality check: it works great for hello-world demos, then breaks in creative ways when you try to do anything real in production.

Here's what actually happens: You define your container, Azure spins up a VM behind the scenes, pulls your image (sometimes), starts your container (usually), and charges you per second whether your app is doing anything or just sitting there eating memory.

Why Engineers Actually Use ACI (And Why They Regret It)

Speed (When It Works): Containers supposedly start in \"seconds\" - true if your image is tiny. Got a real-world .NET image? Time to make coffee while you wait. The "image caching" is a lie - cold starts are unpredictable as hell. Sometimes quick, sometimes I question my career choices while waiting.

Simplicity (Ha!): Yes, az container create is one command. But wait until you need persistent storage, networking that doesn't suck, or logging that actually works. Suddenly you're writing ARM templates that make Kubernetes YAML look elegant.

Cost Efficiency (Your CFO Will Hate You): Per-second billing sounds great until your container gets stuck in a restart loop during your Black Friday deployment. At $0.045/vCPU-hour, a misbehaving 2-vCPU container racked us up $800 over a weekend because nobody noticed it was crash-looping every 30 seconds. And those "scale to zero" benefits? Only work if your containers actually stop instead of hanging with zombie Node.js processes that never quite die.

How ACI Actually Works Under the Hood

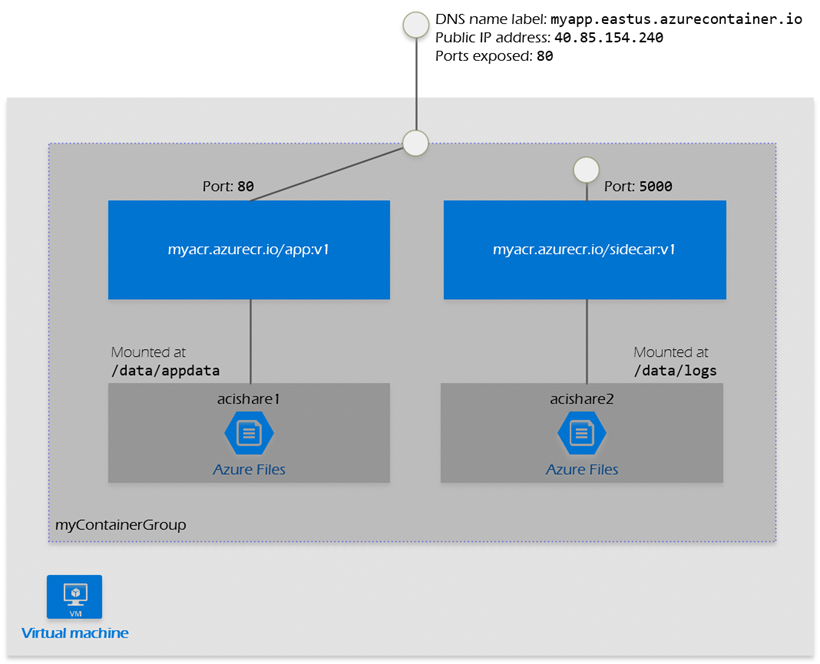

Microsoft's \"hypervisor-level security\" marketing speak translates to: "We spin up a VM for your container group." Each container group gets its own VM underneath, which explains the cold start times and why you can't do privileged operations.

The Good: True isolation means no noisy neighbors screwing with your performance. Your 2 vCPU container actually gets 2 vCPUs, not 2 vCPUs "best effort" like some platforms.

The Bad: VM overhead means you're paying for a hypervisor whether you need it or not. And when that VM decides to restart for "platform maintenance" (usually during your demo), your container goes down hard with no warning.

Production Reality: During our Q4 launch, our ACI containers randomly restarted at 2 AM because the underlying VM got reclaimed for "platform maintenance." No warning, no migration. Just poof - 3 hours of batch processing lost. The \"guaranteed resources\" are real, but so are the surprise reboots that wipe out your work and leave you explaining to executives why the quarterly reports are delayed.

What ACI Can (And Can't) Do in September 2025

Features That Actually Work:

- Confidential containers - if you need TEE and have money to burn

- Spot containers - 70% savings until Azure kills them during important batch jobs

- Both Linux and Windows containers - Windows support is surprisingly decent

- VNet integration - requires NAT gateways nobody tells you about

Features That Were Murdered:

- GPU support got axed July 2025 - screwed over every ML team using ACI

What's Still Missing (And Why You'll Hit These Walls):

- Service Discovery: Containers can't find each other without hard-coding IPs

- Load Balancing: You need external load balancers, adding complexity and cost

- Persistent Storage: Azure Files mounting is clunky and expensive

- Auto-scaling: Manual container groups only - no HPA or KEDA magic

- Health Checks: Basic liveness/readiness probes that barely work

When to Use ACI: Simple batch jobs, CI/CD runners, development environments where downtime doesn't matter.

When to Run Away: Microservices, anything needing service mesh, production workloads where 99.9% uptime matters. Use AKS or Container Apps instead.

Here's how ACI compares to alternatives...

When you need alternatives: Check Container Apps vs ACI comparison to see if you should switch. Use the Azure pricing calculator to calculate your actual bills before committing.