Some engineer at Google got fucking tired of babysitting 10,000 containers that crashed every time someone sneezed in Mountain View. So they built a robot overlord to restart everything automatically. Built from the ashes of Google's internal Borg system - they opensourced a slightly worse version and watched the world struggle with it. Misery loves company.

The Container Chaos Problem

Before Kubernetes, running containers in production was like herding cats while blindfolded:

- Manual Restarts: Someone had to wake up at 3am when containers crashed (spoiler: they always crash)

- Random Failures: Services would die and nobody knew where they were supposed to be running

- Resource Waste: Half your servers were idle while the other half were on fire

- Deployment Hell: Rolling updates meant praying nothing broke and having a rollback script ready

- Network Nightmares: Services couldn't find each other without hardcoded IPs that changed every restart

How This Clusterfuck Actually Works

Kubernetes is like that micromanager who checks on everything every 2 seconds and panics when anything changes. You tell it what you want, and it obsessively makes sure that's exactly what you get - even if it has to restart things 100 times.

The \"Desired State\" Obsession

You write YAML files describing what you want, and Kubernetes becomes a control loop that never stops checking if reality matches your description. YAML files are the devil's configuration format - one wrong indent and everything explodes:

## This YAML will either work perfectly or destroy your weekend

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-that-will-definitely-work

spec:

replicas: 3 # Kubernetes: \"I'll give you 2 and restart the 3rd every 5 minutes\"

selector:

matchLabels:

app: my-app

template:

spec:

containers:

- name: my-app

image: nginx:1.24 # Pro tip: never use :latest unless you enjoy surprises

resources:

requests:

memory: \"64Mi\" # It'll actually use 2GB but hey, who's counting?

cpu: \"250m\"

limits:

memory: \"128Mi\" # This is where your app gets OOMKilled

cpu: \"500m\"

The Scheduler's Black Magic

The scheduler decides where your pods go using logic that would make a chess grandmaster weep. It considers resource requests, node affinity, and about 50 other factors you've never heard of.

Reality check: Your pod is Pending? 99% of the time it's because:

- You requested more memory than any node has

- Your node selector is wrong

- Taints and tolerations are misconfigured

- The scheduler is having an existential crisis

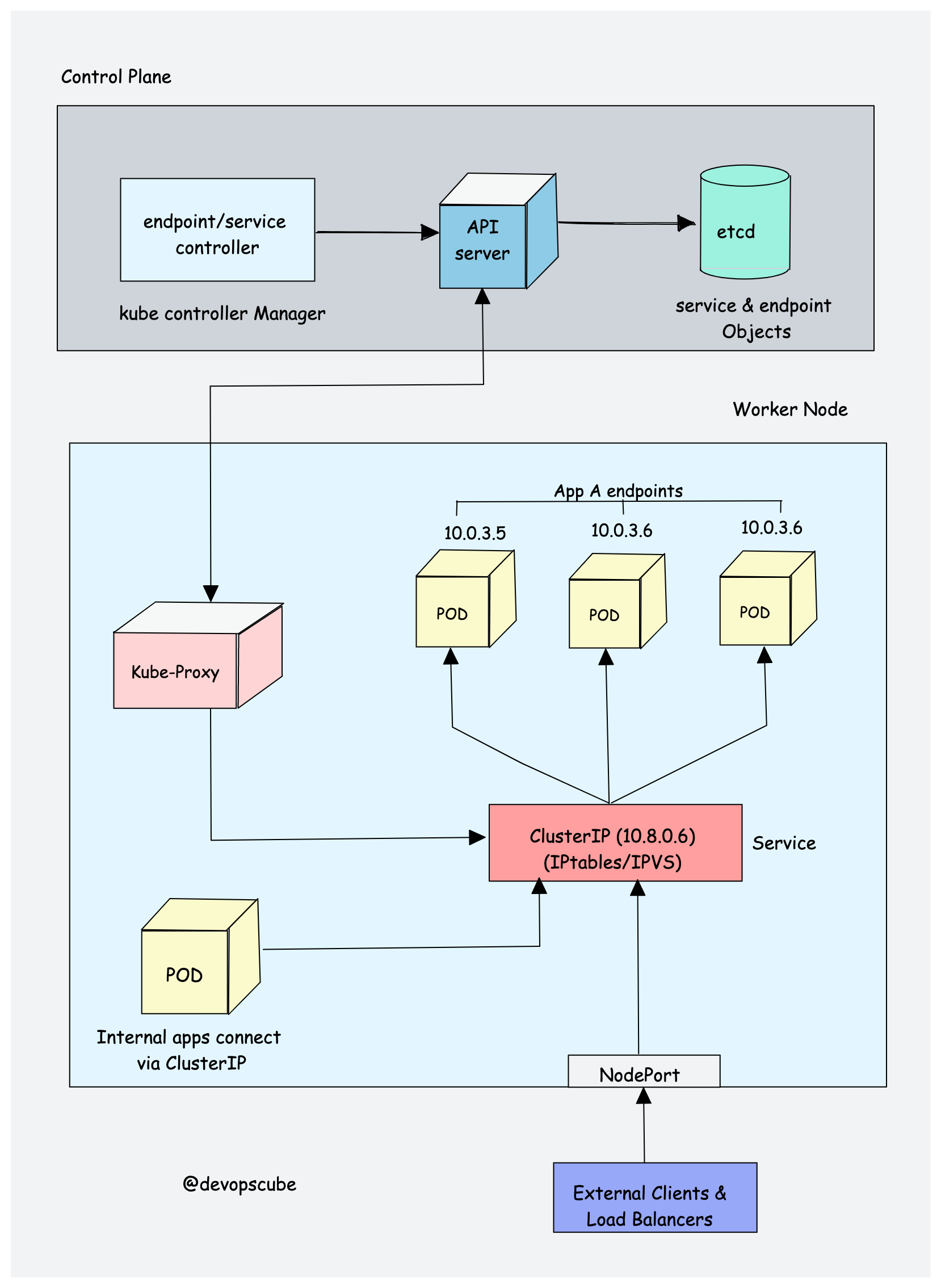

Networking That Actually Works (Sometimes)

Every pod gets its own IP from the CNI plugin. Services provide stable endpoints so your apps can find each other without hardcoded IPs. It's elegant in theory, a debugging nightmare in practice.

The Current State of Kubernetes (August 2025)

Current Version: Kubernetes v1.33.4 (released August 12, 2025). Version 1.34 drops August 27 - pin your versions now before the inevitable breaking changes.

Market Reality: 82% of enterprises plan to use cloud native as their primary platform within 5 years, and 58% are already running mission-critical applications in containers. About half actually know what they're doing. The other half are running one app on a 20-node cluster because "cloud native" sounds good in meetings and looks impressive on quarterly reports.

Version Support: Kubernetes supports the latest 3 minor versions. Translation: you have about 12 months before your cluster becomes a security liability and every vendor stops returning your calls.

Breaking Changes That Fucked Everyone:

- v1.24: Docker runtime deprecated - broke our entire CI pipeline and nobody warned us

- v1.25: Pod Security Policies removed with 6 months notice - security configs went poof

- v1.26: CronJob API changed - batch jobs started failing silently

- v1.31: In-tree storage drivers deprecated - persistent volumes got weird

What This Means For You:

- Financial Reality: $2.57 billion market means lots of consulting fees

- Technical Reality: 88% container adoption means your resume better mention K8s

- Operational Reality: You'll spend more time managing Kubernetes than your actual applications

Who Actually Uses This Thing (And Why They Regret It)

The Success Stories: Netflix, Spotify, Airbnb, Uber, and Pinterest all run massive Kubernetes deployments. They also have teams of 50+ platform engineers to keep it running.

The Reality Check: Most companies have 2-3 developers trying to run Kubernetes with a Stack Overflow tab permanently open.

Industry Horror Stories:

- E-commerce: Black Friday traffic spike? Your pods are still starting up while customers abandon their carts

- Financial Services: Spent 6 months on compliance only to discover Pod Security Standards changed everything

- Startups: Burned through Series A funding on AWS EKS costs for a single web app that gets 100 visitors/day

- Healthcare: HIPAA audit found your secrets stored in plaintext because nobody read the docs

- Gaming: Auto-scaling worked great until players figured out how to DDOS your cluster by creating accounts

The Honest Assessment: Kubernetes solves problems you didn't know you had, and creates problems you never imagined. But once you're in, you're stuck - because "we already invested so much in this platform."

K8s 1.24 broke our entire CI pipeline because they removed dockershim and nobody told our Jenkins agents. Spent a weekend migrating to containerd while the CTO asked why our deployments were 'temporarily disabled.' That migration included updating every Jenkins agent, rewriting build scripts, and explaining to management why "this critical update" nobody planned for was taking down our entire delivery pipeline.

The version churn is real - you'll upgrade every 6 months or get left behind with security vulnerabilities that make pentesters drool. Each upgrade brings breaking changes disguised as "improvements," and the documentation assumes you've memorized every GitHub issue from the past 2 years.

Now that you understand why K8s exists and who's actually using it, here's how this beautiful disaster actually works under the hood.