ArgoCD solves a specific problem: your production Kubernetes clusters slowly drifting away from what you think they should look like. Someone kubectl applies a hotfix, a deployment fails halfway through, or a ConfigMap gets manually edited - suddenly your "identical" staging and prod environments aren't identical anymore.

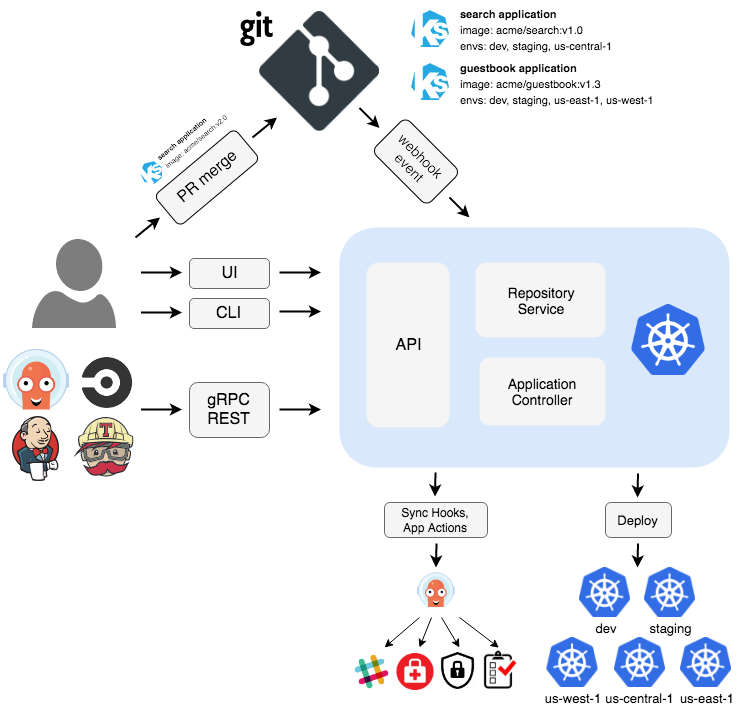

GitOps changes this by making Git your single source of truth. ArgoCD is a Kubernetes controller that watches your Git repos and automatically syncs changes to your clusters. Originally built at Intuit (now a CNCF graduated project), it's basically a robot that never gets tired of checking if your cluster matches Git.

The big win is visibility. ArgoCD's web UI shows you exactly what's deployed, what's out of sync, and what broke during the last deployment. Instead of running kubectl get pods and hoping for the best, you get a visual dashboard that actually tells you what's happening across all your applications.

The ArgoCD dashboard shows each application as a tree of Kubernetes resources - you can see pods, services, deployments, and their relationships at a glance. When something's broken, the UI highlights it in red and shows you exactly what failed.

How ArgoCD Actually Works

Traditional CI/CD tools push changes to your cluster - Jenkins runs kubectl apply, CircleCI hits the Kubernetes API, whatever. ArgoCD flips this around using a "pull" model. It runs inside your cluster and continuously polls Git repositories (every 3 minutes by default, configurable with repository settings).

When ArgoCD finds changes in Git, it renders your manifests (Helm charts, Kustomize, plain YAML) and compares them with what's actually running in the cluster. If there's a difference, it syncs automatically (if you enable auto-sync) or waits for you to click the sync button.

This means your cluster can't drift without ArgoCD noticing. Someone manually edits a Deployment? ArgoCD sees the drift and can either fix it automatically (self-heal) or alert you. Your Git history becomes your deployment audit trail - no more "who changed the replica count" mysteries.

Traditional CI/CD pushes changes to clusters from external systems (push model), while GitOps pulls changes from Git repositories running inside the cluster (pull model). This architectural difference makes GitOps more secure and auditable.

The Reality of Using ArgoCD in Production

ArgoCD v3.1 was released in June 2025 with OCI registry support (still beta - I wouldn't use it in prod yet) and multi-source applications. The multi-source feature actually solves a real problem if you're splitting app code from environment configs across different Git repos.

According to the latest CNCF survey, 97% of ArgoCD users run it in production, which makes sense since it's stable and battle-tested. It manages clusters at companies like Intuit, RedHat, and thousands of others according to adoption tracking.

But here's what the adoption stats don't tell you: ArgoCD's learning curve is steeper than it looks. The basic concepts are simple - Git as source of truth, pull-based deployments - but the devil's in the details. Application health checks fail silently, sync policies are confusing until you get burned by them, and the UI gets sluggish with hundreds of applications.

I've been running ArgoCD for 2 years across multiple clusters and I still occasionally discover gotchas. The resource hooks are powerful but they fail without clear error messages. RBAC configuration is a pain if you're not already a Kubernetes RBAC expert. Multi-cluster setup works great until you hit networking edge cases.

The big benefit though? When something breaks in production, ArgoCD's web UI immediately shows you what's different from Git. Instead of debugging with kubectl, you get a visual diff of what went wrong. That alone makes it worth the learning curve for most teams managing more than a handful of applications.

ArgoCD consists of several key components: the API Server (handles UI and CLI), Application Controller (watches Git and manages sync), Repository Server (clones repos and renders manifests), and Redis (caches Git state for performance).