When shit breaks in production, you don't have time to read documentation. Here's the exact 5-step process I use to debug any Istio issue, whether it's traffic routing, security policies, or resource problems.

Step 1: Verify Istio Health (30 seconds)

First, check if the control plane is actually working:

## Quick health check - should show all components ready

istioctl proxy-status

## If any proxy shows NOT READY, you found your problem

kubectl get pods -n istio-system

If `istioctl proxy-status` shows sidecars as STALE or NOT READY, the control plane can't reach those proxies. This is usually networking or resource exhaustion.

Red flag: If istiod pods are crashlooping or show high memory usage (>4GB), your cluster is too big for your control plane resources. Scale up or you'll keep having problems.

Step 2: Check Your Configuration (90 seconds)

Most Istio problems are configuration fuckups. Run the analyzer on the specific namespace where you're seeing issues:

## Analyze configuration - shows YAML errors before they kill traffic

istioctl analyze -n production

## Check specific resources that commonly break

kubectl get virtualservices,destinationrules,peerauthentications -n production

The analyzer catches obvious errors like:

- VirtualService routes that don't match any services

- DestinationRules with non-existent subsets

- mTLS policy conflicts between namespaces

- Missing ServiceEntry resources for external services

Pro tip: If you see `IST0101` or `IST0102` errors, fix those immediately - they mean your traffic routing is broken.

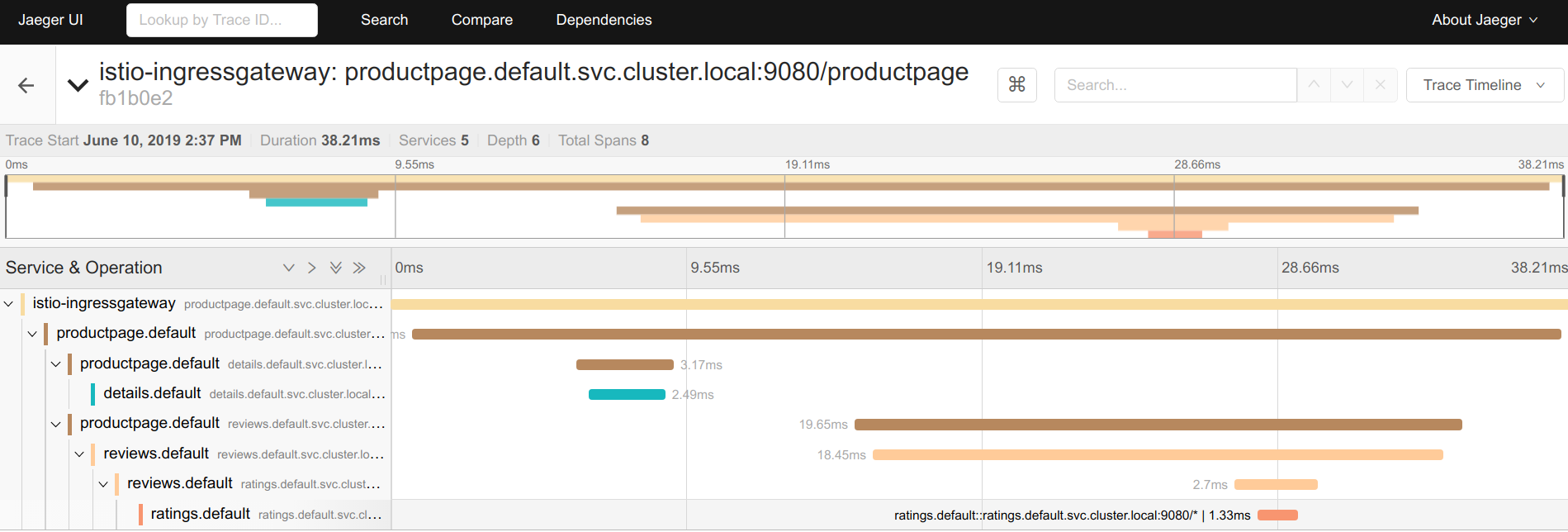

Step 3: Trace the Traffic Path (2 minutes)

Now verify that traffic is actually reaching the sidecars and following your routing rules:

## Pick a pod that's having issues

POD_NAME=$(kubectl get pods -l app=your-broken-service -o jsonpath='{.items[0].metadata.name}')

## Check if Envoy is receiving the right config

istioctl proxy-config cluster $POD_NAME | grep your-target-service

istioctl proxy-config routes $POD_NAME --name 8080

If `proxy-config cluster` doesn't show your target service, the sidecar doesn't know it exists. If `proxy-config routes` shows no routes or wrong routes, your VirtualService is broken.

Common gotcha: Routes are case-sensitive and exact-match by default. api/v1/users won't match api/v1/Users or /api/v1/users/.

Step 4: Check Sidecar Logs (1 minute)

When configuration looks correct but traffic still fails, check what Envoy is actually doing:

## Get sidecar logs - look for errors, not info messages

kubectl logs $POD_NAME -c istio-proxy --tail=50

## Enable debug logging if you need more detail (warning: verbose)

istioctl proxy-config log $POD_NAME --level debug

Key log patterns to watch for:

upstream connect error: Can't reach target service (networking issue)no healthy upstream: Circuit breaker tripped or all endpoints downstream closed: Usually a certificate or mTLS problemno route matched: VirtualService routing rules don't match the request

Step 5: Test mTLS and Certificates (30 seconds)

Certificate issues are silent killers - traffic works until certs expire, then everything breaks:

## Check certificate validity

istioctl authn tls-check $POD_NAME your-target-service.production.svc.cluster.local

## If certificates are broken, check root CA status

kubectl get configmap istio-ca-root-cert -n istio-system -o yaml

tls-check should show OK for both client and server certificates. If you see PERMISSIVE when you expect STRICT, your mTLS policies aren't working.

Emergency fix: If certificates are expired and you can't rotate them quickly, temporarily switch to PERMISSIVE mode:

apiVersion: security.istio.io/v1beta1

kind: PeerAuthentication

metadata:

name: emergency-permissive

namespace: production

spec:

mtls:

mode: PERMISSIVE

When This Workflow Doesn't Work

If following these 5 steps doesn't identify the problem, you're dealing with one of the nasty edge cases:

- Ambient mode issues: Different debugging tools and failure modes

- Multi-cluster problems: Cross-cluster certificate or DNS issues

- Resource exhaustion: Out of file descriptors, memory, or CPU

- Network policy conflicts: CNI or firewall rules blocking sidecar traffic

- Version incompatibilities: Mixed Istio versions or k8s API changes

These require specialized debugging techniques covered in the advanced troubleshooting section below.