If you've ever built an AI agent that worked perfectly in demos but crashed the moment real users touched it, you already understand why LangGraph exists. Most AI agents are basically fancy if/then chains that work great until they don't. I've built plenty of these - they demo beautifully, then shit the bed in production the moment a user does something unexpected.

LangGraph fixes this fundamental problem by letting agents think in graphs instead of straight lines. Instead of rigid step-by-step execution, your agents can adapt, backtrack, and handle the chaos that real users inevitably create. This isn't just a nice-to-have feature - it's what separates toys from production-ready systems.

Companies like Elastic, Replit, and Norwegian Cruise Line are using it in production, and having worked with similar setups, I can tell you why.

Why Linear Chains Suck in Production

Picture this: you build a customer service bot that works like step 1 → step 2 → step 3. Looks great in testing. Then a customer asks something that requires step 2.5, or wants to go back to step 1 after step 3, and your agent has a mental breakdown. I've debugged this shit at 3am more times than I care to count.

Linear chains fail because:

- They can't adapt when intermediate results change the plan

- No memory of what happened before (every interaction starts fresh)

- When something breaks, the whole chain crashes - no recovery

- Getting human approval in the middle? Good fucking luck

- Multiple agents working together? Forget about it

What LangGraph Actually Does

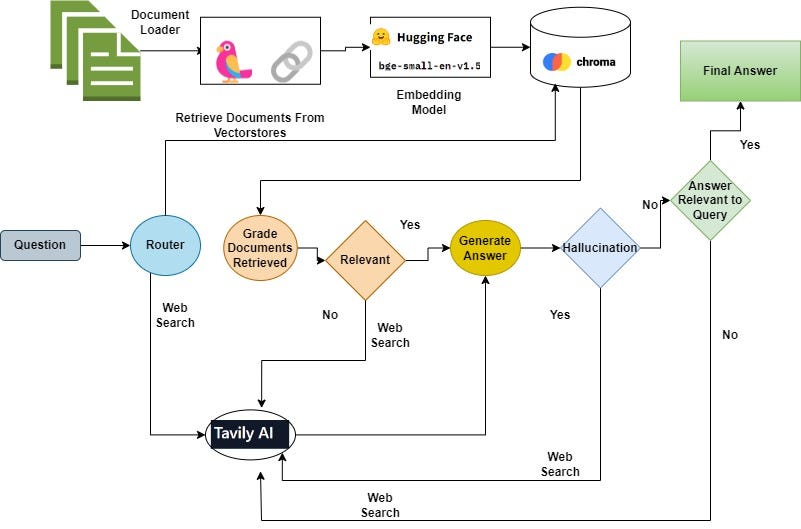

LangGraph uses graphs instead of chains, which sounds fancy but basically means your agent can think and backtrack like a human. Instead of "do A, then B, then C" it's "do A, check the result, maybe do B or skip to D, oh shit that failed so go back to A but with different params."

State Management: Your agent remembers shit. Unlike other frameworks where every interaction starts from scratch, LangGraph keeps track of context across the whole conversation. Finally.

Conditional Routing: The agent can change direction based on what actually happened, not what you hoped would happen. Customer angry? Route to human. API call failed? Try the backup. Simple concept that took forever to implement properly.

Checkpointing: This is the killer feature - automatic state saving at every step. When your agent breaks (and it will), you can rewind and see exactly where it went wrong. Saved me countless hours of debugging.

Where This Actually Helps

LangGraph shines when you need agents that don't follow a script. Companies using it in production aren't building simple chatbots:

Research Assistants: Multi-step research that adapts based on what it finds. Search → analyze → decide if more research needed → synthesize → get human approval. Try doing that with a linear chain.

Code Generation: Write code → test → debug → iterate. The key word is "iterate" - most other frameworks can't loop back and fix their mistakes.

Customer Support: Start with AI → escalate to specialist → maybe back to AI → human approval. Real customer service isn't linear.

Document Processing: Parse → validate → route based on content type → maybe flag for review. Again, the routing is conditional, not predetermined.

Budget a full week to stop thinking in linear chains and start thinking in graphs. Your brain needs to rewire from "do A then B then C" to "maybe do A, check the result, possibly skip to D, oh shit that failed so go back to A but with different parameters." The documentation makes it sound easy, but unlearning 20 years of procedural thinking takes time.

Production Gotchas I Learned the Hard Way

Memory usage explodes faster than a poorly written React app - Our user documents averaged 2MB each, and we were brilliant enough to store 50+ docs in state. That's 100MB per workflow, which sounds fine until you have 20 concurrent workflows and suddenly your 4GB containers are swap-thrashing themselves to death. We crashed production twice before someone suggested "maybe store just the document IDs, dipshit."

Error messages are about as helpful as a chocolate teapot - You'll get "Node execution failed" and have to dig through 47 lines of stack trace to find the actual problem. The error happened 6 nodes deep in a conditional branch that only triggers when the API response contains emoji. Add extensive logging or you'll be debugging in production at 2 AM with a flashlight.

The checkpointing DB chokes way sooner than you think - Started getting connection refused errors at 87 concurrent workflows because each one holds a database connection during execution. Our DBA was not amused when we casually mentioned we might need "a few hundred" connections. Turns out LangGraph checkpointing is chattier than a junior dev on their first PR review.

State serialization will fuck you in mysterious ways - Spent 4 hours debugging random workflow failures that happened maybe 30% of the time. Turned out some genius (me) left a database connection object in the state dict. The error message was about as helpful as a chocolate teapot: "Object of type 'Connection' is not JSON serializable." Thanks, Python. Really narrowed it down.

Relevant Resources:

- LangGraph persistence documentation

- State management best practices

- Memory management patterns

- Error handling strategies

- Production deployment guide

- PostgreSQL configuration

- Monitoring and observability

- Scaling LangGraph applications

- Database connection pooling

- Performance optimization guide

- Memory leak detection

- Production case studies