Testing multi-agent systems is a special kind of hell. Nothing works the way you expect, everything times out randomly, and Docker containers develop a personal vendetta against you. I learned this when our test environment worked perfectly but production died from network issues, timing problems, and cascade failures. Here's how to make it suck less.

Local Testing: The Sweet Lies

Local testing gives you false confidence. Everything works perfectly until you deploy to production and watch it burn. But you still need to test locally to catch the obvious bugs.

Create test_basic.py:

## test_basic.py

import pytest

import asyncio

import httpx

import time

## Test individual agents first (they'll all be broken differently)

@pytest.mark.asyncio

async def test_coordinator_doesnt_crash():

"""Test that coordinator starts without dying immediately"""

try:

async with httpx.AsyncClient(timeout=5.0) as client:

response = await client.get("http://localhost:8000/health")

assert response.status_code == 200

assert "healthy" in response.json().get("status", "")

except httpx.ConnectError:

pytest.skip("Coordinator not running. Start it first.")

@pytest.mark.asyncio

async def test_agents_can_register():

"""Test that agents don't crash when registering"""

try:

async with httpx.AsyncClient(timeout=10.0) as client:

response = await client.post(

"http://localhost:8000/register_agent",

json={

"name": "test_agent",

"endpoint": "http://localhost:9999",

"capabilities": ["test"]

}

)

# Any response is good - at least it didn't crash

assert response.status_code in [200, 201, 202]

except Exception as e:

pytest.fail(f"Registration crashed: {e}")

@pytest.mark.asyncio

async def test_simple_task_doesnt_hang():

"""Test that a simple task completes (or fails gracefully)"""

try:

async with httpx.AsyncClient(timeout=30.0) as client:

start_time = time.time()

response = await client.post(

"http://localhost:8000/execute_task",

json={"task_description": "test task", "timeout_seconds": 20}

)

duration = time.time() - start_time

# We don't care if it succeeds, just that it returns something

assert duration < 25.0 # Should not hang forever

assert response.status_code in [200, 400, 500] # Any response is better than timeout

except httpx.TimeoutException:

pytest.fail("Task hung - this is bad")

except Exception as e:

# Other errors are fine, at least it didn't hang

print(f"Task failed but didn't hang: {e}")

Run tests: pytest test_basic.py -v

What actually happens:

- Tests pass locally, fail in CI

- Agents start in wrong order, everything breaks

- Network timeouts everywhere

- Tests are flaky and fail randomly

Integration Testing: Mock Everything

Don't test against real APIs in integration tests. They'll rate-limit you, go down during your demos, and generally ruin your day. Mock everything. I use pytest-mock and responses libraries for HTTP mocking.

## test_integration.py

import pytest

import asyncio

from unittest.mock import patch, AsyncMock

@pytest.mark.asyncio

async def test_end_to_end_workflow_mocked():

"""Test the whole workflow with mocked external calls"""

# Mock all the things that break

with patch('httpx.AsyncClient.post') as mock_post:

# Mock successful agent responses

mock_response = AsyncMock()

mock_response.status_code = 200

mock_response.json.return_value = {

"results": ["mock result 1", "mock result 2"],

"status": "completed"

}

mock_post.return_value = mock_response

# Mock search API that doesn't rate limit

with patch('researcher.search_api') as mock_search:

mock_search.return_value = [{"title": "Mock result", "url": "http://test.com"}]

# Now test the workflow

async with httpx.AsyncClient(timeout=15.0) as client:

response = await client.post(

"http://localhost:8000/execute_task",

json={"task_description": "research AI safety", "timeout_seconds": 10}

)

# Test that mocked system works

assert response.status_code == 200

result = response.json()

assert "status" in result

Load Testing: How to Break Your System

Run multiple tasks simultaneously to find where it breaks:

## load_test.py

import asyncio

import httpx

import time

async def spam_coordinator():

"""Send many requests to find the breaking point"""

async def send_request(task_id):

try:

async with httpx.AsyncClient(timeout=30.0) as client:

start = time.time()

response = await client.post(

"http://localhost:8000/execute_task",

json={"task_description": f"task {task_id}", "timeout_seconds": 15}

)

duration = time.time() - start

return {"id": task_id, "status": response.status_code, "duration": duration}

except Exception as e:

return {"id": task_id, "error": str(e), "duration": 999}

# Start with 5 concurrent requests, increase until system breaks

for num_concurrent in [5, 10, 20]:

print(f"

Testing {num_concurrent} concurrent requests...")

tasks = [send_request(i) for i in range(num_concurrent)]

results = await asyncio.gather(*tasks, return_exceptions=True)

successful = sum(1 for r in results if isinstance(r, dict) and r.get("status") == 200)

avg_duration = sum(r.get("duration", 0) for r in results if isinstance(r, dict)) / len(results)

print(f"Success rate: {successful}/{num_concurrent} ({successful/num_concurrent*100:.1f}%)")

print(f"Average duration: {avg_duration:.2f}s")

if successful < num_concurrent * 0.8: # Less than 80% success

print(f"System breaks at {num_concurrent} concurrent requests")

break

if __name__ == "__main__":

asyncio.run(spam_coordinator())

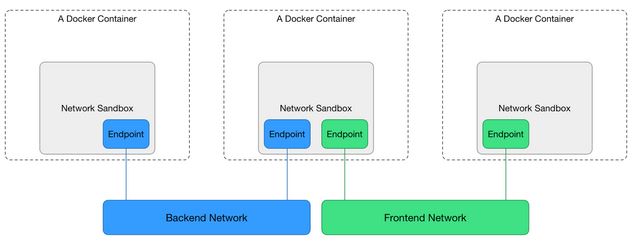

Docker Deployment: Pain and Suffering

Here's a docker-compose.yml that actually works (sometimes):

## docker-compose.yml

version: '3.8'

services:

coordinator:

build: .

command: python coordinator.py --port 8000

ports:

- "8000:8000"

environment:

- PYTHONUNBUFFERED=1 # See logs immediately

- LOG_LEVEL=DEBUG # See everything that breaks

restart: unless-stopped # Auto-restart when it crashes

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:8000/health"]

interval: 30s

timeout: 10s

retries: 3

start_period: 30s # Give it time to start

depends_on:

- researcher

- analyzer

networks:

- agent-network

researcher:

build: .

command: python researcher.py --port 8001

ports:

- "8001:8001"

environment:

- PYTHONUNBUFFERED=1

- COORDINATOR_URL=http://coordinator:8000

restart: unless-stopped

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:8001/health"]

interval: 30s

timeout: 10s

retries: 3

networks:

- agent-network

analyzer:

build: .

command: python analyzer.py --port 8002

ports:

- "8002:8002"

environment:

- PYTHONUNBUFFERED=1

restart: unless-stopped

mem_limit: 2g # Prevent memory bombs

networks:

- agent-network

reporter:

build: .

command: python reporter.py --port 8003

ports:

- "8003:8003"

environment:

- PYTHONUNBUFFERED=1

restart: unless-stopped

networks:

- agent-network

networks:

agent-network:

driver: bridge

## Don't forget volumes for persistent data if needed

Dockerfile that doesn't suck:

FROM python:3.9-slim

## Install curl for health checks

RUN apt-get update && apt-get install -y curl && rm -rf /var/lib/apt/lists/*

WORKDIR /app

## Copy requirements first for better caching

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

## Copy source code

COPY *.py .

## Create non-root user (security best practice)

RUN useradd -m appuser && chown -R appuser:appuser /app

USER appuser

## Default command (override in docker-compose)

CMD ["python", "coordinator.py"]

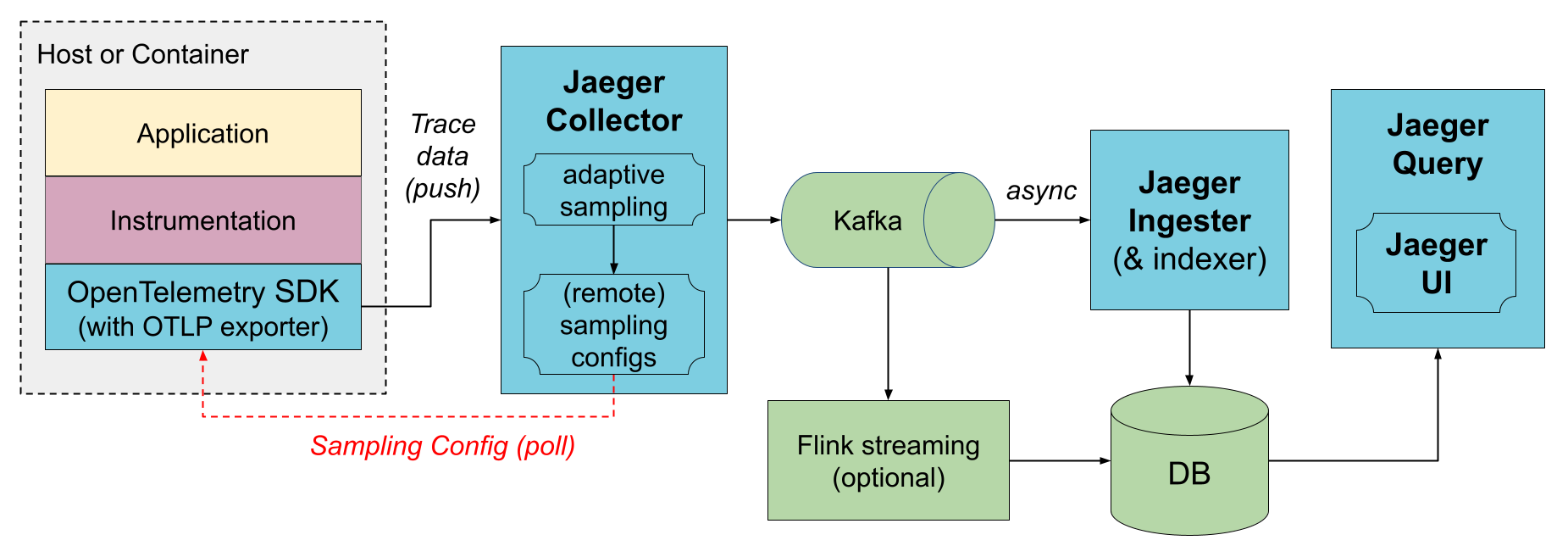

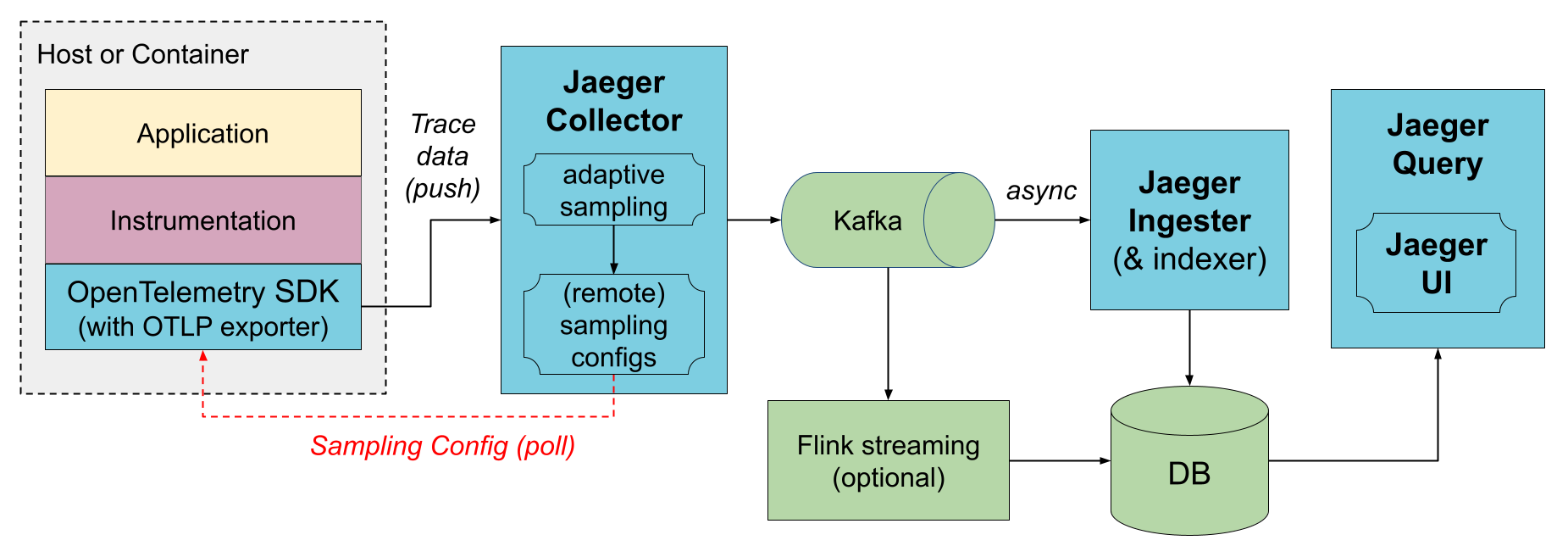

Monitoring: Know When Things Break

Add basic monitoring because you need to know when (not if) things break:

## monitoring.py

import time

import logging

from collections import defaultdict

class SimpleMetrics:

def __init__(self):

self.request_count = 0

self.error_count = 0

self.total_time = 0

self.last_error = None

self.start_time = time.time()

def record_request(self, duration, success=True):

self.request_count += 1

self.total_time += duration

if not success:

self.error_count += 1

def record_error(self, error_msg):

self.error_count += 1

self.last_error = error_msg

logging.error(f"Error recorded: {error_msg}")

def get_stats(self):

uptime = time.time() - self.start_time

avg_time = self.total_time / self.request_count if self.request_count > 0 else 0

error_rate = self.error_count / self.request_count if self.request_count > 0 else 0

return {

"uptime_seconds": uptime,

"total_requests": self.request_count,

"error_count": self.error_count,

"error_rate": f"{error_rate:.2%}",

"avg_response_time": f"{avg_time:.2f}s",

"last_error": self.last_error

}

## Use in your agents:

metrics = SimpleMetrics()

## In tool functions:

start_time = time.time()

try:

# do work

result = do_something()

metrics.record_request(time.time() - start_time, success=True)

return result

except Exception as e:

metrics.record_request(time.time() - start_time, success=False)

metrics.record_error(str(e))

raise

Environment Variables: The Sane Way

## .env.production

## Keep it simple - exotic configs break in production

LOG_LEVEL=INFO

MAX_CONCURRENT_TASKS=10 # Start low, increase carefully

REQUEST_TIMEOUT=30 # 30 seconds is enough

HEALTH_CHECK_INTERVAL=60 # Check agents every minute

## Database/Cache (if you add them later)

REDIS_URL=redis://localhost:6379

DATABASE_URL=postgresql://user:pass@localhost/agents

## API Keys (set these as environment variables, not in files)

OPENAI_API_KEY=${OPENAI_API_KEY}

SEARCH_API_KEY=${SEARCH_API_KEY}

Deployment Checklist (From Hard Experience)

Before deploying:

- Test locally with Docker -

docker-compose up and test everything

- Check health endpoints - Every service needs

/health

- Test restart behavior - Kill containers, see if they recover

- Monitor memory usage -

docker stats to see which agents are memory hogs

- Test network failure - Disconnect containers, see what breaks

- Check logs -

docker logs container_name should show useful info

Production deployment commands:

## Deploy

docker-compose -f docker-compose.prod.yml up -d

## Check status

docker-compose ps

## Watch logs

docker-compose logs -f

## Restart broken service

docker-compose restart coordinator

## Nuclear option (when everything is broken)

docker-compose down && docker-compose up -d

Common production failures:

- Agents can't reach each other → Check Docker networking

- Memory leaks crash containers → Add memory limits

- Logs fill up disk → Configure log rotation

- Health checks fail → Increase timeout values

- Everything works then stops → Check resource limits

Testing and deployment will humble you. The code that works perfectly on your laptop will find new ways to break in production. Plan accordingly.