The Multi-API Clusterfuck is Finally Over

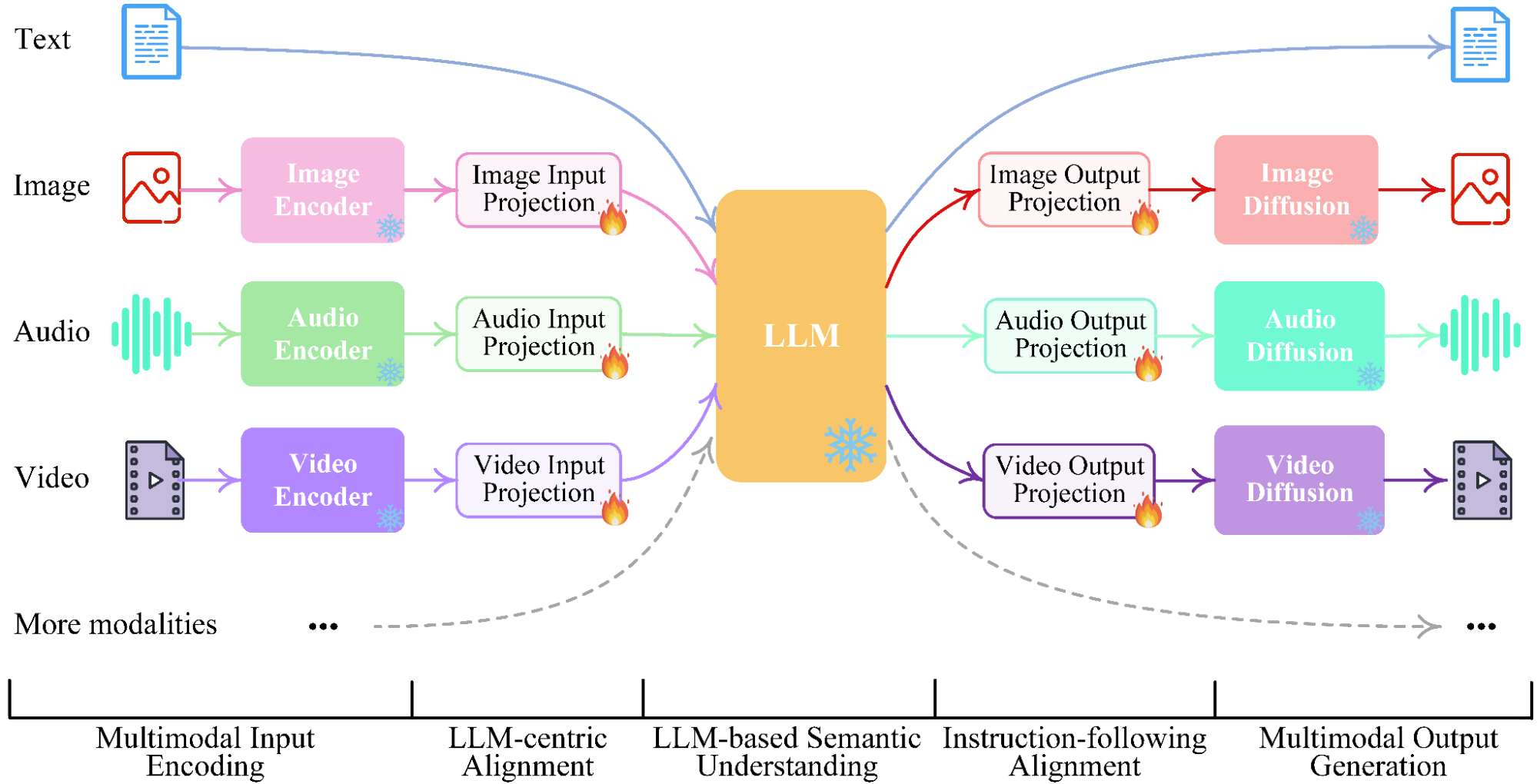

I was running this nightmare where we'd hit one API for text, another for images, then try to make sense of the results. Half the time the context vanished and users got responses that sounded like they came from different conversations entirely.

GPT-5 fixed that mess. One call now handles everything. No more trying to sync three different rate limits or debugging why the image analysis forgot what the text was about.

The integration I was dreading? Took maybe 30-45 minutes instead of the full day I'd blocked off. Just point your API calls at the new endpoint and... it actually works? I kept waiting for the other shoe to drop. Kept refreshing the logs expecting to see some catastrophic failure, but nope.

What stopped being complete garbage:

- Context doesn't vanish between calls anymore

- Response times cut roughly in half - used to be 4-5 seconds, now like 1.5-2 seconds

- One error handler instead of three different "what the hell went wrong" scenarios

- AWS bill went down maybe 15-20% from fewer roundtrips

The Context Window Actually Works Now

GPT-5 has a 400K context window and for once it doesn't forget shit halfway through. I dumped an entire Django project into it - maybe 50K lines of code - and it could still reference functions from the beginning when analyzing stuff at the end.

Before, I'd have to chunk everything up and lose context between pieces. Now I can throw whole codebases at it and it just works. Game changer for code reviews and refactoring.

The Pricing Will Hurt Your Feelings

Here's where it gets expensive: $1.25 input, $10 output per million tokens. Sounds reasonable until you realize GPT-5 won't shut the fuck up. Where GPT-4 might give you three sentences, GPT-5 writes entire essays explaining why water is wet.

My API costs went up like 35-45% the first week because every response turned into a philosophy lecture. You can throw "be concise" or "one sentence only" in your prompts, but GPT-5 still wants to show its work like it's getting graded on participation. I swear it's like asking a junior dev a yes/no question and getting a 20-slide presentation.

What Broke During Migration

Three things that immediately fucked up:

Rate limiting got weird - Images suddenly count as like 3-5 requests each, which nobody bothered mentioning in the docs. Burned through my quota in maybe two days and spent half an hour confused why I was getting

HTTP 429: Rate limit exceeded for requests per minuteinstead of the usual daily quota message.Response parsing exploded - Our JSON parsing couldn't handle GPT-5's verbose responses. What used to be

{"answer": "yes"}became{"answer": "yes", "reasoning": "Well, considering the various factors..."}. Spent four hours thinking the API was broken when it was just our regex choking on the extra verbosity.Error codes from hell - Error handling that worked fine with GPT-4 started throwing exceptions I'd never seen. Got hit with

content_policy_violationon requests that worked fine in GPT-4, plus some mysteriousprocessing_errorthat isn't even documented yet. Pro tip: add a generic catch-all because you will hit undocumented errors.

Is It Actually Better?

For multimodal stuff? Absolutely. The unified API saved me weeks of integration hell. For pure text generation? Honestly, GPT-4o is probably fine unless you need the bigger context window.

The reasoning is genuinely better - I can ask complex questions and get explanations that actually make sense instead of confident bullshit. But you pay for it in tokens and response time.

Should You Migrate?

Depends if you're actually solving problems or just want to play with the shiny new toy. If you're juggling multiple APIs and losing context between calls, GPT-5 will save your sanity. If your current setup works and you don't have bandwidth for migration headaches, stick with what doesn't break.

I migrated because our multimodal stuff was held together with duct tape and hope. Three weeks later, I'm glad I suffered through the weekend debugging session, but I probably should have waited another month for other people to find the edge cases first.