kubeadm is a fucking nightmare. Install kubelet, then kubectl, then kubeadm, then containerd, then debug why they're all fighting over different socket paths. Spend an afternoon figuring out why the API server crashes on boot because some systemd unit didn't start in the right order.

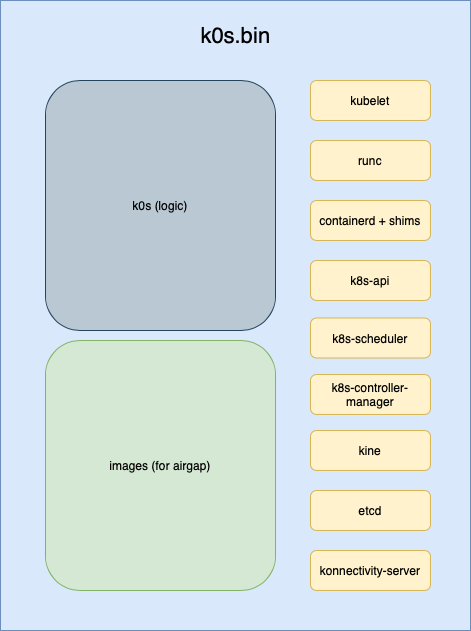

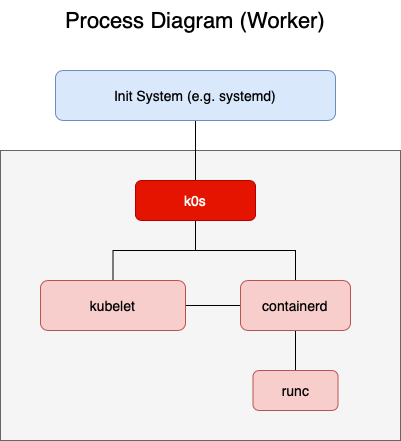

k0s throws all that in one 165MB binary. Same Kubernetes APIs, just packaged by people who apparently understand how software distribution works. Download it, run it, cluster exists. No version compatibility matrix or hunting down the right containerd release.

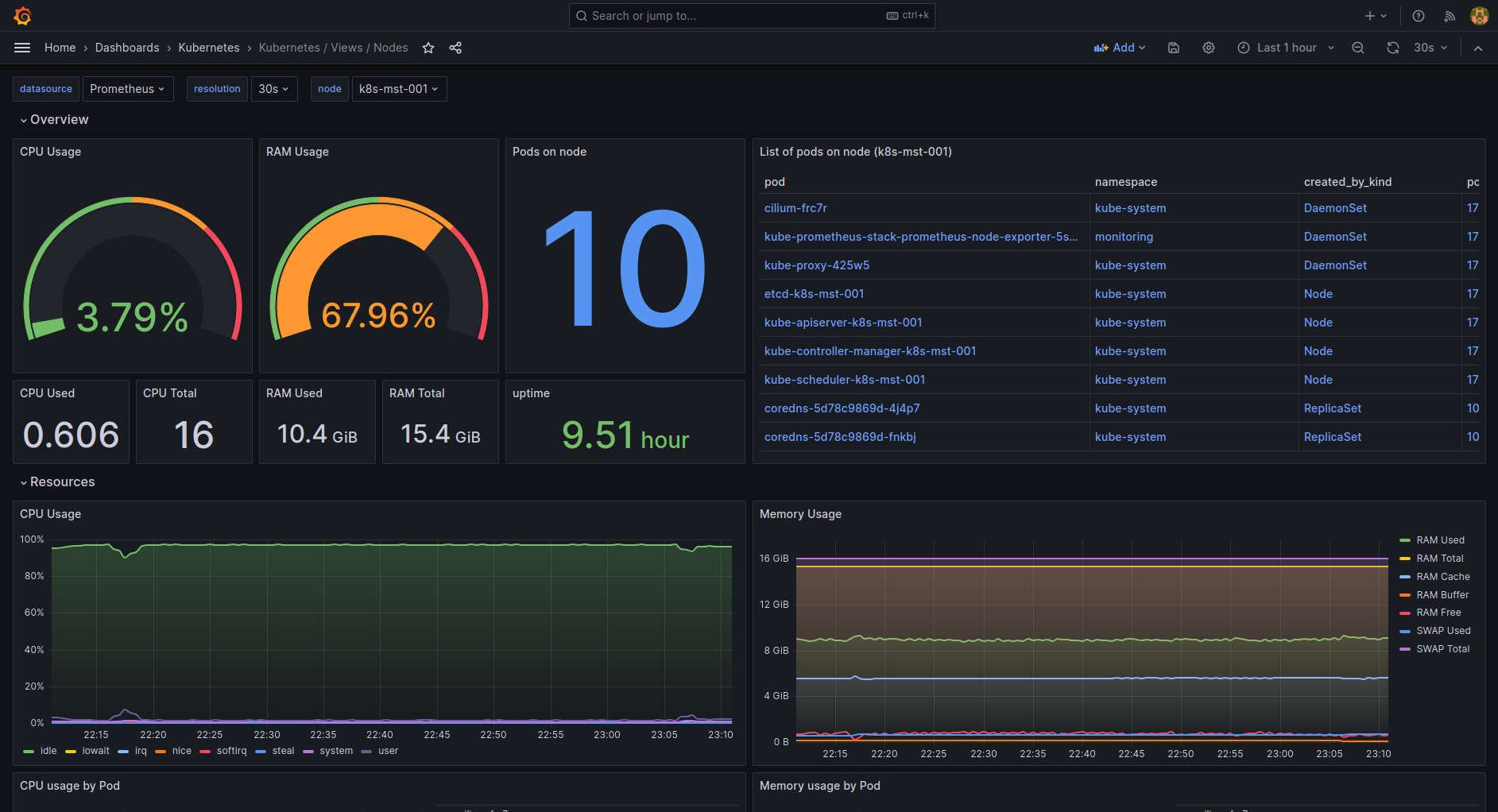

Been testing it for a few months now on staging environments. The current release (v1.30.x series) is pretty solid - way less painful than our old kubeadm setup. Mirantis maintains it so it's not some random side project that'll disappear when the maintainer gets bored.

Everything crammed into 165MB

It's bigger than k3s but includes actual Kubernetes components instead of weird Rancher replacements. kube-apiserver, etcd, kube-scheduler, controller-manager, kubelet, containerd, CoreDNS - all the stuff that usually breaks when you mix versions.

containerd is way better than Docker's runtime. Docker would shit itself on networking edge cases or when you tried scaling past like 8 nodes. containerd just works without randomly dying when you restart pods.

Zero dependencies (mostly)

Works on pretty much any Linux with kernel 3.10+. That covers everything newer than CentOS 7, which is already ancient. Tested it on:

x86-64, ARM64: Runs fine. ARM64 Pi 4 handles small workloads well.

ARMv7: Pi 3 works but gets slow with more than a few pods.

Ubuntu/Debian: Just works.

CentOS/RHEL: Need to fuck with firewalld rules but runs fine after that.

SUSE: Haven't broken it yet.

The main gotcha is enterprise Linux with custom security shit. Spent 3 hours debugging one company's "hardened" RHEL where SELinux blocked containerd from accessing its socket. Had to figure out the right security context rules. Ubuntu just works without the security theater.