Let's cut the bullshit. MCP is 10 months old, and production deployments are failing in predictable ways. June 2025 alone saw massive security breaches, data leaks at major companies like Asana, and hundreds of misconfigured servers exposing enterprise data.

The Big Three: What Kills MCP Deployments

Transport Layer Failures (60% of production issues): STDIO buffering hangs your server for hours. HTTP/SSE connections drop under load and never recover. Your server starts, health checks pass, then dies silently when real traffic hits. I've debugged this exact scenario at 2am more times than I want to count. The official troubleshooting guide covers these failure patterns in detail.

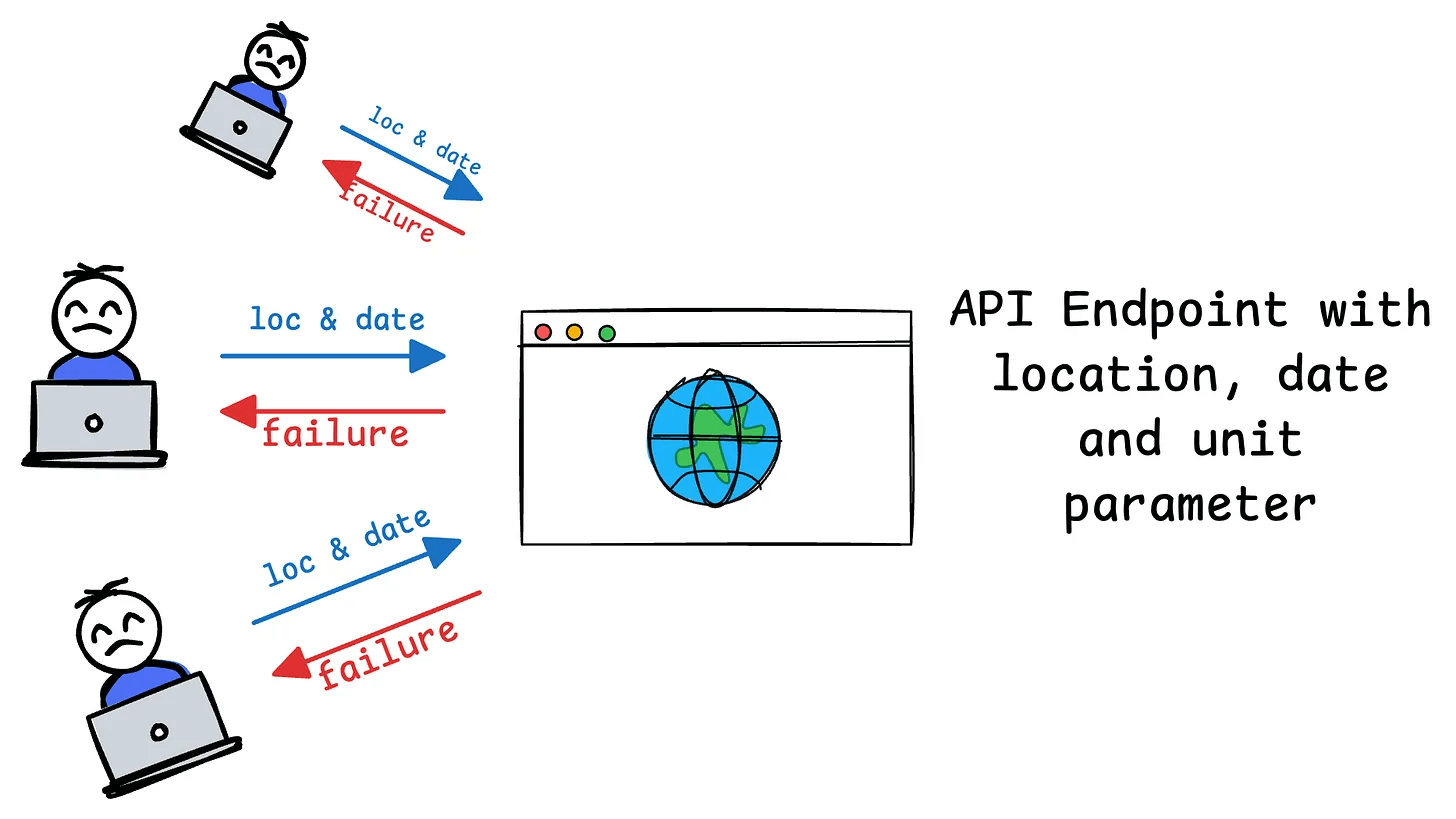

Authentication Nightmares (25% of incidents): OAuth flows break between client and server updates. API keys get rotated and nobody updates the configs. Asana's MCP server leaked customer data across organizations because of auth boundary failures. The new OAuth 2.1 spec helps, but migration is a pain.

Dependency Hell (15% of problems): Node.js version mismatches between dev and prod. Python package conflicts in containers. The TypeScript SDK works fine until you hit a specific combination of libraries that makes everything explode. MCP servers are fragmented across 7,000+ instances with no quality control.

The STDIO Transport Problem (The #1 Production Killer)

STDIO transport works great locally, then murders you in production. The issue: buffering doesn't behave the same way across environments. On Windows WSL2, STDIO randomly hangs when log rotation kicks in. In Docker containers, stdout buffer limits cause silent failures.

The nuclear fix: Switch to HTTP/SSE for production. Yes, it's more complex, but STDIO in production is playing Russian roulette. If you must use STDIO, implement these specific mitigations:

- Force line buffering:

export PYTHONUNBUFFERED=1 - Set explicit timeouts: 30 seconds max for any operation

- Monitor process health independently of the client connection

- Always run STDIO servers with process supervisors (systemd, supervisor, etc.)

Security Holes That Are Currently Being Exploited

The September 2025 threat landscape is brutal. Security researchers found hundreds of MCP servers with remote code execution vulnerabilities. Here are the actual attack vectors being used right now:

SQL Injection in Official SQLite Server: Anthropic's reference SQLite server - forked 5,000+ times - has a SQL injection bug that lets attackers exfiltrate data and plant stored prompts. If you're using the SQLite server, patch immediately or your data is owned.

Prompt Injection via GitHub MCP: GitHub's official MCP server had a prompt injection vulnerability that let malicious issues manipulate AI responses. The attack: submit a support ticket with embedded prompts that hijack the AI when internal staff summarize it.

Mass Configuration Errors: Half of the 7,000 public MCP servers are misconfigured and externally accessible without authentication. Automated scanners are finding and compromising these daily.

The "Connection Refused" Error Everyone Gets

Error code -32000 with "could not connect to MCP server" is the most common production failure. Despite the generic message, there are only six actual causes:

- Server command path wrong (40% of cases): The

npxorpythonexecutable isn't in PATH in production - Port already in use (20%): Another process grabbed your port

- Permission denied (15%): User can't execute the server binary

- Dependency missing (15%): Package not installed in production environment

- Environment variable not set (8%): Database URLs, API keys missing

- Firewall blocking connection (2%): Network policy blocking the port

The 5-minute debug process:

## Test server command directly

npx @your-org/mcp-server --transport stdio

## Check if port is available

netstat -tulpn | grep :8000

## Verify all environment variables

env | grep -E "(DATABASE|API|MCP)"

## Test network connectivity (for HTTP transport)

curl -v https://api.github.com/zen

Container Deployment Realities

Docker deployments fail in specific ways. The promise of "runs everywhere" breaks when you hit real infrastructure constraints:

Memory limits kill servers silently: MCP servers can balloon to 2GB+ when processing large resources. Set memory limits but also implement resource cleanup. Your server will OOMKill without warning in Kubernetes.

Health checks that lie: A server can respond to /health but be completely broken for actual MCP protocol requests. Test the actual MCP endpoints, not just HTTP responses.

Log aggregation breaks STDIO: Centralized logging systems often don't handle STDIO transport properly. You'll lose critical debugging information right when you need it most.

The fix: Use HTTP/SSE transport with proper structured logging. The overhead is worth the operational sanity.