Amazon EKS is AWS's managed Kubernetes offering that costs $0.10/hour ($73/month) just for the control plane before you even run a single pod. Launched in 2018 after everyone begged AWS to stop making us choose between self-hosting Kubernetes or using their proprietary ECS bullshit.

The deal is simple: AWS runs the master nodes (API server, etcd, scheduler, controller-manager) across multiple AZs so they don't fail, and you handle everything else. It's not "eliminating operational complexity" - you still need to understand VPCs, security groups, IAM roles, CNI plugins, and why your pods keep getting OOMKilled. But at least when the control plane breaks, you can blame AWS instead of your teammate who thought editing etcd directly was a good idea.

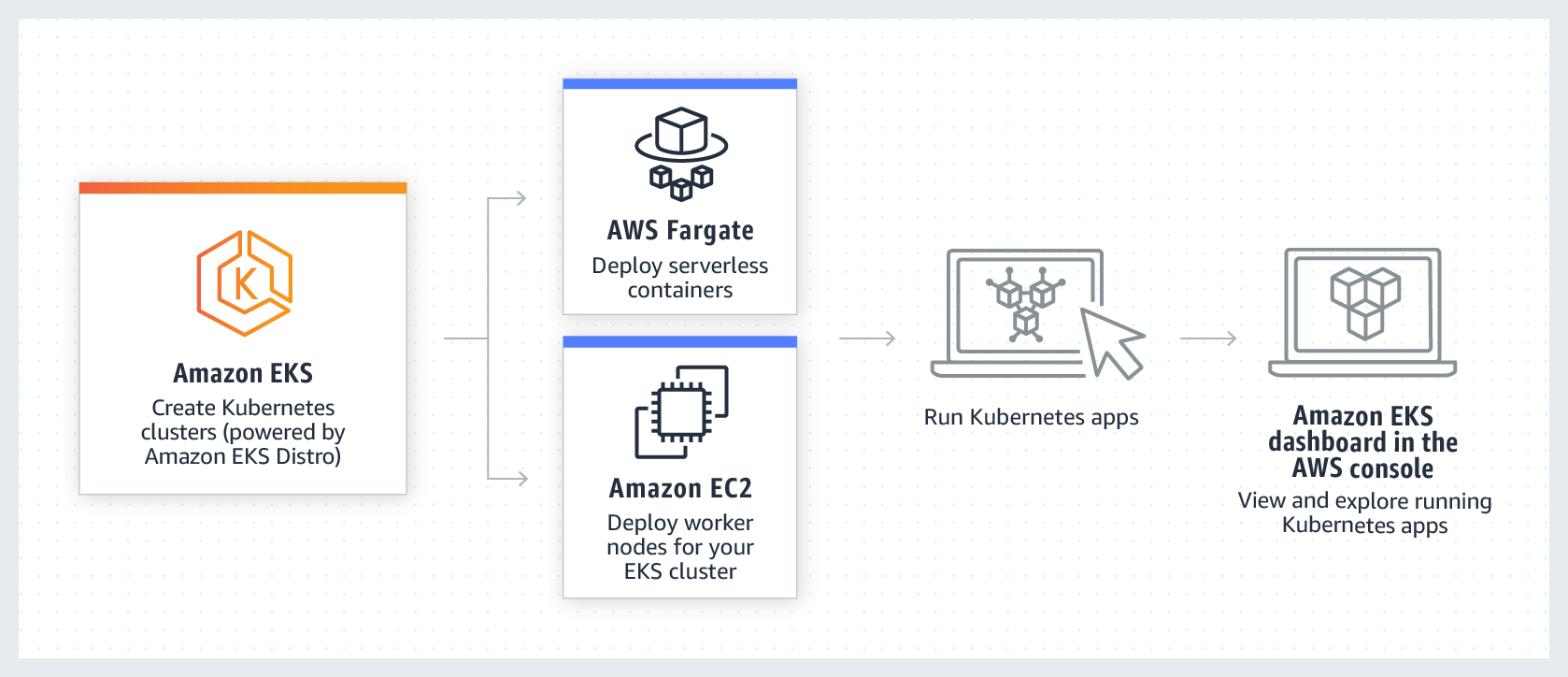

How EKS Actually Works

EKS runs the Kubernetes control plane in AWS's account while your worker nodes run in your VPC. The masters live in a service VPC you can't access. Great when it works, nightmare when AWS breaks something and you can't debug it.

Your worker nodes can be:

- EC2 instances: You manage the OS, security patches, and get to debug why kubelet won't start after the latest AMI update

- Fargate: AWS manages everything but charges you 4x more and takes 30+ seconds to cold start, making it useless for anything latency-sensitive

- Hybrid Nodes: Run on-premises if you enjoy the complexity of both cloud and on-prem simultaneously

Pro tip: Start with EC2 unless you really hate managing servers. Fargate sounds great until you realize every pod restart is a 30-second timeout waiting for AWS to find you a server.

Why Use EKS Instead of DIY Kubernetes

It's Actually Kubernetes: EKS is CNCF certified, so your kubectl commands work and you're not learning another AWS-specific API. Your Helm charts won't randomly break because AWS decided to "improve" the Kubernetes API.

AWS Integration That Actually Helps: The IAM integration is solid once you figure out the RBAC mapping (plan 2-3 hours for this). EBS volumes just work, ALBs can route to your services without hacking ingress controllers, and VPC networking mostly makes sense if you already understand AWS networking.

Security You Don't Have to Think About: Control plane gets patched automatically, etcd is encrypted, API server has TLS, and you can integrate with AWS security theater like GuardDuty if your compliance team demands it. Pod Security Standards work, network policies work, and you don't have to convince your security team that you've hardened everything correctly.

EKS Auto Mode: AWS Picks Your Servers

Launched in late 2024, EKS Auto Mode is AWS's attempt to manage even more of your infrastructure. They pick your EC2 instance types, configure your networking, and manage storage - basically Fargate but with EC2 instances you can't see.

It sounds amazing until you need a specific instance type, want to tune networking performance, or need to install custom drivers. Auto Mode is great for "just run my app" workloads but terrible when you need control. The cost savings are real (20-40% reduction in compute costs) but you're trading flexibility for AWS magic.

When to use it: Microservices that don't care about the underlying infrastructure

When to avoid it: Anything that needs custom AMIs, specific instance families, or non-standard networking setups