I've been running Kubernetes clusters for six years, and I can tell you that cost monitoring isn't optional anymore. It's the difference between explaining a reasonable infrastructure budget and having a very uncomfortable conversation with finance about why your "small microservices experiment" costs more than a Tesla.

The $50K Surprise Bill Problem

Here's what happens without cost monitoring: Someone spins up a "quick test" with GPU nodes, forgets about it over the weekend, and Monday morning you're staring at surprise AWS bills that can hit five figures. I've seen this exact scenario destroy entire Q4 budgets. Cost optimization studies show 32% of cloud spending is wasted, and Kubernetes makes visibility even worse.

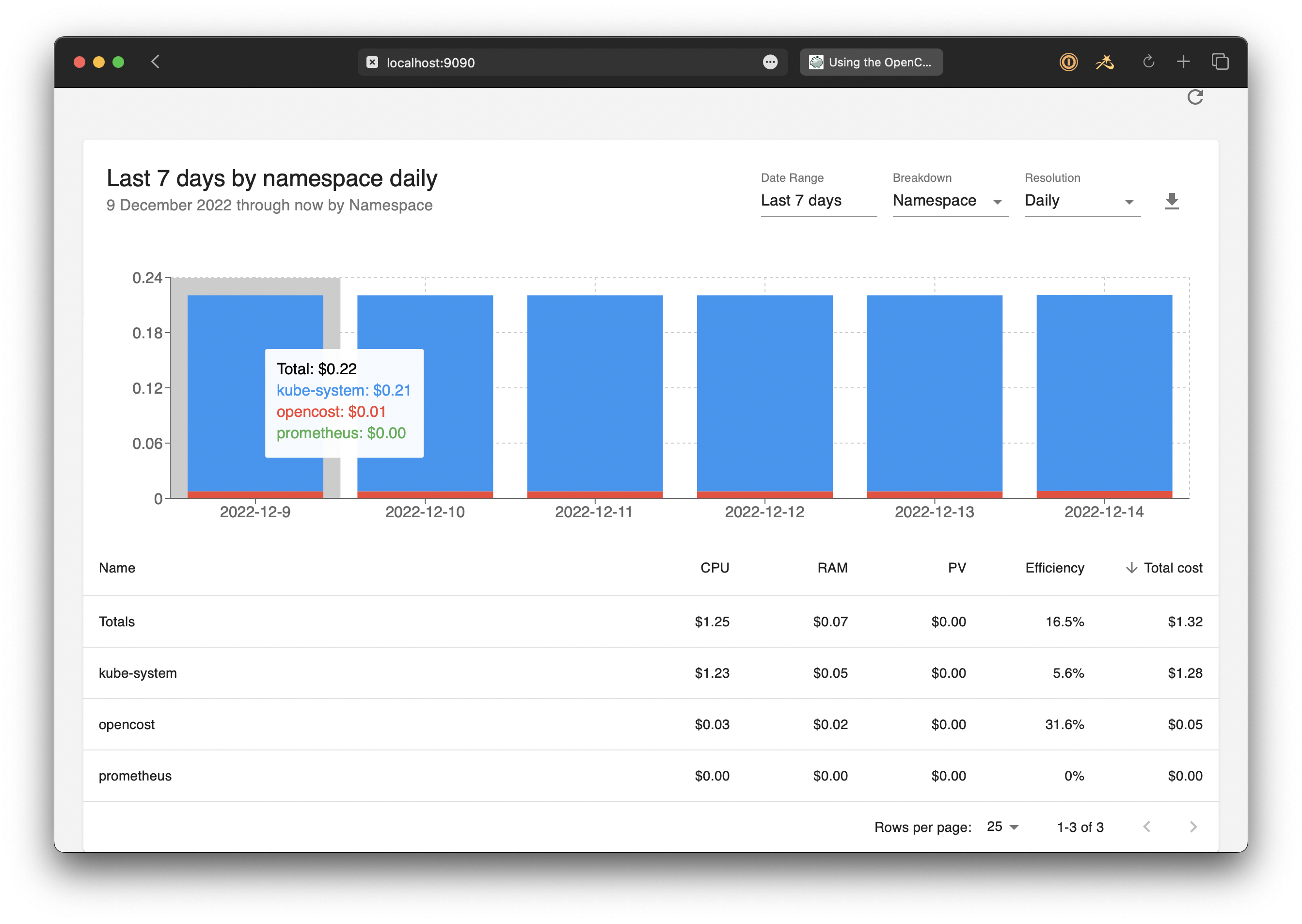

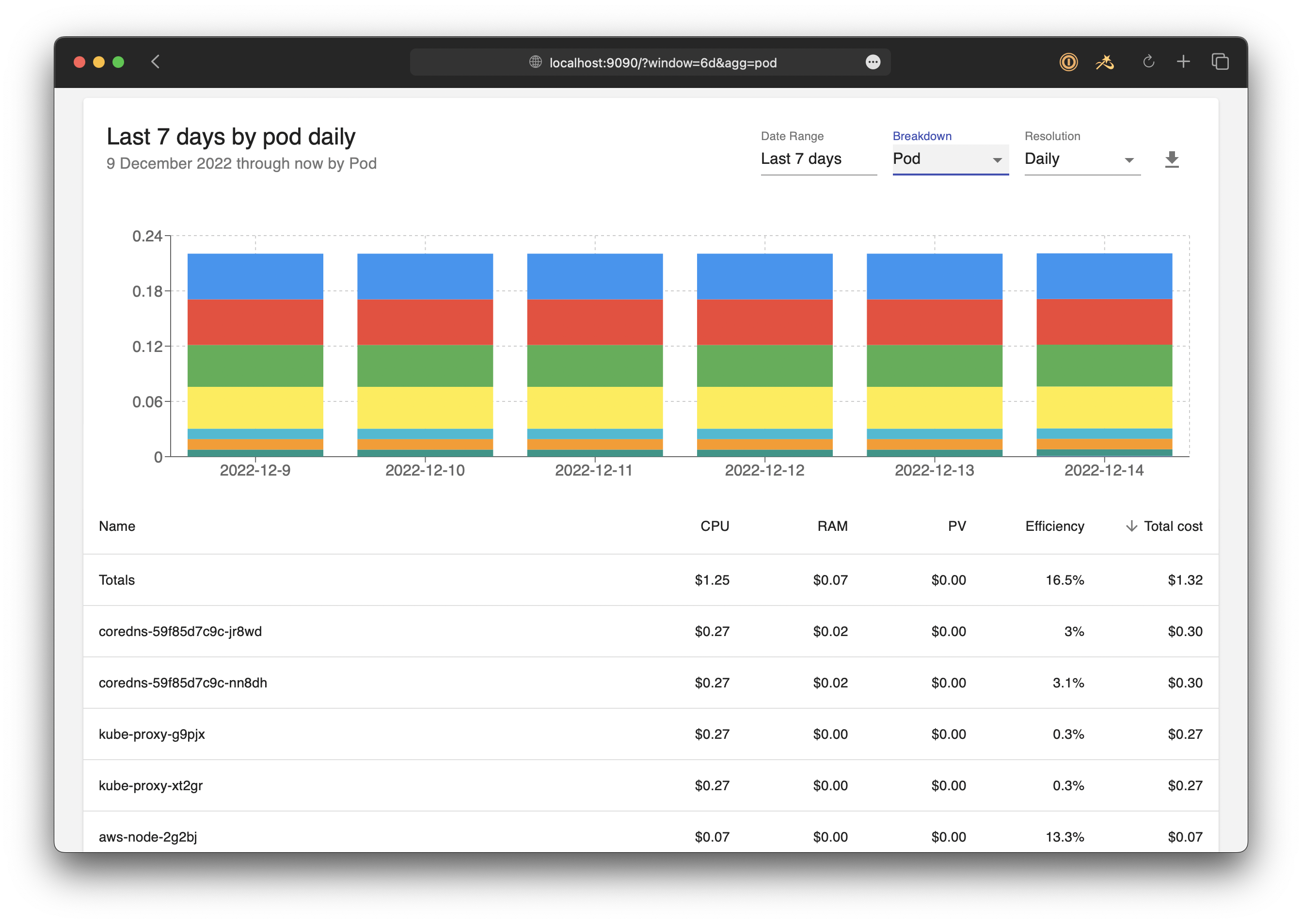

Kubernetes makes this worse because traditional cloud billing shows you're paying for "EC2 instances" but doesn't tell you that your ChatGPT API wrapper is using 80% of your cluster resources. AWS Cost Explorer is useless for K8s workloads - it'll show you node costs but not which pods are burning money. The same problem exists with Azure Cost Management and Google Cloud Billing - they're great at showing you VM costs but completely blind to application-level resource consumption.

OpenCost Actually Works (Most of the Time)

OpenCost started as the open source guts of Kubecost before becoming its own CNCF project in June 2022 and advancing to CNCF Incubating status in October 2024. The core difference: it tracks cost allocation down to individual containers using actual Kubernetes resource requests and limits.

The magic happens because OpenCost integrates with cloud billing APIs to get real pricing data - reserved instances, spot pricing, enterprise discounts, all of it. No more bullshit static pricing models that are wrong by 40%. This is crucial when AWS spot instances can be 90% cheaper than on-demand, or when Azure Reserved Instances provide up to 72% savings that traditional tools completely miss.

What Actually Gets Tracked

- Container-level costs: Finally know which pod is eating your budget

- GPU costs: Critical if you're doing any ML work (spoiler: NVIDIA A100s cost $3.06/hour and GPUs are expensive as fuck)

- Persistent volume costs: That 10TB database you forgot about shows up here, including AWS EBS costs and persistent disk fees

- Network costs: Load balancer and data transfer fees that AWS loves to hide, plus NAT gateway costs that can surprise you

- Out-of-cluster resources: RDS, S3, anything your apps actually use via custom cost sources

The allocation model is pretty solid - it proportionally distributes node costs based on resource requests, which means if you're not setting requests/limits properly, your cost data will be garbage. But you should be setting those anyway if you're not a complete amateur. This follows the Kubernetes resource model and aligns with FinOps best practices for cloud cost allocation.

Production Reality Check

Version 1.117.3 is the latest as of September 2024. It's been stable in my production clusters for over a year. The main gotcha is Prometheus integration - if your Prometheus is fucked, OpenCost will be fucked too.

The RBAC permissions need read access to basically everything, which makes security teams nervous. But that's the price of actually knowing where your money goes. Check the security considerations and OpenSSF scorecard if your compliance team needs convincing.

Works with Kubernetes 1.21+ but honestly, if you're running anything older than 1.26 in production, cost monitoring is the least of your problems. The latest versions support Kubernetes 1.30 and integrate well with modern CNI plugins for accurate network cost tracking.

![]()