I've deployed Istio to production way too many times, and I'm here to save you from the pain I went through. The official docs make it sound simple - it's not. Here's what actually happens and how to survive it.

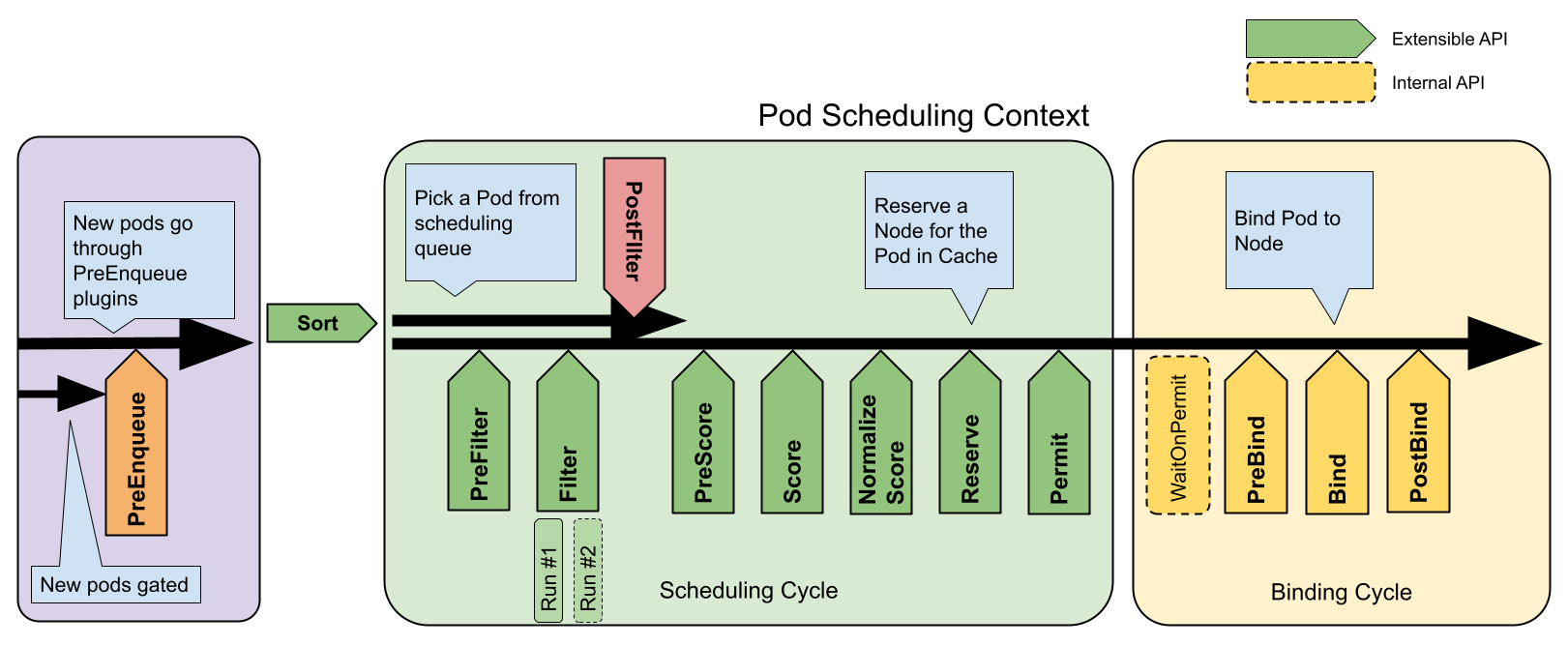

Before you get excited about that clean Istio architecture diagram, understand that each of those components will try to kill your cluster in unique and creative ways.

Memory Requirements Are Complete Lies

The Control Plane Will Eat Your Resources:

The docs say 2GB for small clusters? Bullshit. I learned this the hard way during our second production outage when istiod kept OOMing. Here's what actually happens:

- "Small" clusters (10-50 services): Start with 4GB minimum or you'll be restarting istiod every few hours. I shit you not. Check the Istio resource requirements and control plane sizing guide.

- Medium clusters (50-200 services): 8GB baseline, plan for 12GB when configuration gets complex. Read the production deployment best practices for scaling guidance.

- Large clusters (200+ services): 16GB+ and pray your networking team doesn't change routes during peak hours. The large scale deployment docs have optimization tips.

Real Sidecar Overhead (From My Pain):

Each Envoy sidecar starts at 128MB but that's fantasy. Real numbers:

- Basic workloads: 200MB minimum (learned this during Black Friday when everything fell over)

- With distributed tracing: 400-600MB easily - killed our staging cluster twice

- Heavy traffic patterns: Up to 1GB per sidecar when shit hits the fan

The official performance docs are conservative estimates. Real production with actual traffic? Double everything. Check the CNCF performance benchmarks for realistic numbers, and follow Kubernetes resource best practices for proper resource allocation. The Istio deployment models documentation covers different scaling approaches, while Envoy performance tuning provides sidecar optimization details. Check out the Kubernetes cluster autoscaler for dynamic resource scaling.

The Networking Nightmare

Ports That Will Ruin Your Day:

## These are the ports that'll fuck you over if they're blocked

kubectl get svc -n istio-system istiod # Port 15010-15014 better be open

## Check webhook ports - these fail silently and drive you insane

kubectl get validatingwebhookconfiguration istio-validator

kubectl get mutatingwebhookconfiguration istio-sidecar-injector

Your firewall/security team will block these without telling you:

- Port 15010 (XDS) - When this is blocked, sidecars can't get configuration and everything looks fine until it isn't

- Port 15011 (TLS) - Certificate distribution fails, mTLS breaks randomly

- Port 15014 (monitoring) - Health checks fail, you lose visibility into what's broken

- Port 15017 (webhooks) - Sidecar injection silently stops working, new pods don't get proxies

I spent 6 hours debugging why new deployments weren't getting sidecars. Webhook port was firewalled. Check the Istio ports documentation and make sure your Kubernetes network policies don't block these.

Kubernetes Version Compatibility (Ignore at Your Peril):

The compatibility matrix isn't a suggestion - it's a hard requirement. Istio 1.20 on Kubernetes 1.29? Works fine. Istio 1.20 on Kubernetes 1.24? You'll get weird CRD errors that take days to debug.

## Check your Kubernetes version first, save yourself pain later

kubectl version --short

## Make sure these API groups exist or you're fucked

kubectl api-resources | grep -E "(networking.istio.io|security.istio.io|install.istio.io)"

The Shit You Need to Check First

Node-level Requirements (Skip These and Cry Later):

## These kernel modules better exist or traffic interception fails spectacularly

lsmod | grep -E "(ip_tables|iptable_nat|iptable_mangle)"

## Existing network policies will fuck up Istio - I guarantee it

kubectl get networkpolicies --all-namespaces

## Container runtime matters - docker vs containerd vs cri-o have different gotchas

kubectl get nodes -o wide

RBAC and Service Account Setup:

## Create dedicated namespace with proper labeling

kubectl create namespace istio-system

kubectl label namespace istio-system istio-injection=disabled

## Verify cluster-admin permissions (required for installation)

kubectl auth can-i "*" "*" --all-namespaces

Certificate Authority Planning:

Production Istio deployments require careful certificate management planning. Decide early:

- Self-signed CA: Simpler setup, requires root CA distribution. Read the root CA certificate management guide.

- External CA integration: More complex, integrates with existing PKI. Check external CA integration and cert-manager integration.

- Certificate rotation strategy: Automated vs manual certificate lifecycle. Review certificate lifecycle management and mTLS configuration.

Resource Limits and Quality of Service

Control Plane Resource Configuration:

## Production istiod resource limits

resources:

requests:

cpu: 500m

memory: 2Gi

limits:

cpu: 1000m

memory: 4Gi

Data Plane Sidecar Defaults:

## Configure sidecar resource defaults globally

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

metadata:

name: production-control-plane

spec:

values:

global:

proxy:

resources:

requests:

cpu: 100m

memory: 128Mi

limits:

cpu: 200m

memory: 256Mi

Production-specific Pre-flight Checks

Cluster Health Validation:

## Node resource availability

kubectl describe nodes | grep -A5 "Allocated resources"

## Storage class configuration for persistent workloads

kubectl get storageclass

## DNS configuration verification

kubectl run test-pod --image=busybox:1.35 --rm -it --restart=Never -- nslookup kubernetes.default

CNI Plugin Compatibility (Choose Your Pain):

Different CNI plugins will break in unique and wonderful ways:

- Calico: Works but needs specific Istio config changes. Expect weird routing issues during upgrades. Check the Tigera documentation for cloud-specific issues and Calico network policies for policy integration.

- Cilium: Has better integration but good luck finding anyone who's actually run it in prod with Istio. Read Cilium service mesh docs for configuration details and eBPF integration guide.

- Flannel: Just works. No fancy features, no weird edge cases. Boring and reliable. Follow the official Flannel guide for setup and Flannel networking concepts for backend options.

- Weave: Don't. Just don't. Performance is shit and debugging is hell. If you're stuck with it, read the Weave troubleshooting docs and Weave Net architecture.

## Figure out what CNI you're stuck with

kubectl get pods -n kube-system | grep -E "(calico|cilium|flannel|weave)"

## These network policies will conflict with Istio policies - guaranteed confusion

kubectl get networkpolicies --all-namespaces -o wide

This prep work takes 2-4 hours if you're lucky, but I've seen teams spend 2 weeks debugging certificate bullshit that could've been prevented. Don't skip the resource planning - memory pressure in production cascades into a complete shitstorm where everything fails at once and you can't figure out why.

Once you've done this prep work, you need to pick your installation method. The approach you choose determines how much control you have and how much pain you'll endure during upgrades.