PostgreSQL breaks in basically four ways, and figuring out which one you're dealing with saves you hours of random troubleshooting. After debugging the same stupid issues hundreds of times, here's what actually goes wrong and how to tell the difference.

Connection Refused - PostgreSQL's Favorite "Fuck You"

'Connection refused' is PostgreSQL's way of telling you absolutely nothing useful about what's broken. Could be the service is down, could be firewall, could be config - you get to guess!

The bullshit error messages you'll see:

psql: could not connect to server: Connection refused- Service isn't running or can't reach itFATAL: the database system is starting up- Database is recovering, give it a minutetimeout expired- Network is fucked or server is overloaded- Random connection drops - Usually connection limits or network flakiness

Start with the obvious shit first: Is the service actually running? sudo systemctl status postgresql will tell you. If it's not running, start it. If it keeps dying, check the logs at /var/log/postgresql/ because PostgreSQL actually puts useful info there (unlike most software).

Network issues are next. `telnet hostname 5432` or `nc -zv hostname 5432` will tell you if you can reach the damn thing. If that fails, it's network or firewall - not a PostgreSQL problem. Google Cloud's troubleshooting guide covers most connectivity scenarios you'll encounter. This medium article walks through both easy and complex connection fixes.

Authentication Type 10 Not Supported (The SCRAM-SHA-256 Disaster)

This one kills production regularly because PostgreSQL 13+ defaults to SCRAM-SHA-256 authentication but older JDBC drivers (anything before 42.2.0) don't support it. The Stack Overflow thread has 268k views because everyone hits this.

These errors mean your client is too old:

The authentication type 10 is not supportedFATAL: password authentication failed for user(after SCRAM upgrade)FATAL: no pg_hba.conf entry for host(when pg_hba.conf is correct)

Fix it by upgrading your JDBC driver to 42.2.0 or newer. Don't downgrade PostgreSQL security to MD5 unless you hate your security team.

The pg_hba.conf file will make you want to quit: Entries are processed top to bottom, case sensitivity matters, and one wrong character breaks everything. The order is TYPE, DATABASE, USER, ADDRESS, METHOD. Get it wrong and nothing works.

Performance Issues (When Everything Runs Like Molasses)

Slow queries are usually missing indexes, but PostgreSQL gives you actual tools to figure out what's broken instead of guessing.

Signs your database is dying:

- Queries that used to take seconds now take minutes

- CPU usage constantly pegged at 100%

- Users complaining everything is slow

- Your monitoring dashboard looks like a red Christmas tree

- Memory usage climbing steadily without dropping after queries finish

- Active connections near max_connections limit

EXPLAIN ANALYZE is your best friend: It tells you exactly where the bottleneck is. Look for "Seq Scan" on large tables - that's usually your problem. If you see that, create an index and watch performance magically improve.

Lock contention happens when transactions block each other. Check pg_stat_activity for queries stuck in "waiting" state. Kill the blocker with SELECT pg_terminate_backend(pid) if needed. Monitor lock statistics to catch blocking before it kills performance. The official kernel resources documentation explains shared memory and lock table limits that cause these issues.

Memory Errors (When PostgreSQL Eats Everything)

"Out of shared memory" usually means too many connections doing stupid things or locks gone wild. The OOM killer loves PostgreSQL processes because they're big, juicy targets.

Memory death symptoms:

- `ERROR: out of shared memory` - Lock table is full

- `FATAL: sorry, too many clients already` - Hit connection limit

ERROR: could not extend file: No space left on device- Disk full, not memory- PostgreSQL processes getting killed - OOM killer activated

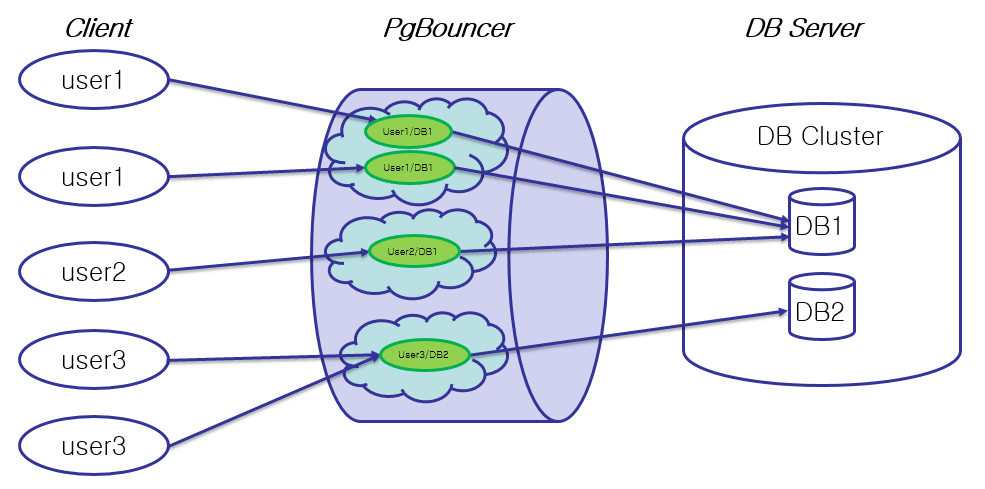

shared_buffers at 25% of RAM works until you need the other 75% for something else. Don't set `max_connections` to 1000 unless you want to see what "thrashing" really means. The comprehensive troubleshooting guide from Dev.to explains exactly how TOO_MANY_CONNECTIONS errors happen and what to do about them. DataDog's troubleshooting guide covers monitoring setup that prevents memory disasters.

Start With the Dumb Shit First

Before diving into complex diagnostics, check the obvious things that waste hours:

- Is the service running? (

systemctl status postgresql) - Can you reach it? (

nc -zv hostname 5432) - Is the disk full? (

df -h) - Are there any recent config changes? (check git history)

- Did someone restart something? (check logs)

This catches 60% of "mysterious" PostgreSQL issues and saves you from looking stupid when the problem is a stopped service.