Your storage is about to screw you over, and most performance comparisons won't tell you this. I've deployed PostgreSQL 17, MySQL 8.4 LTS, and MariaDB 11.8 LTS on everything from spinning rust to NVMe arrays, and the performance differences will surprise you.

MariaDB Wins on Slow Storage (For Now)

Here's the thing nobody talks about: MariaDB crushes PostgreSQL by 1.8x on slow storage (125 MiB/s). Put them on fast NVMe and that lead drops to 1.2x. Why? PostgreSQL's MVCC is a RAM-hungry beast that writes full row versions for every update. MariaDB only writes what changed.

I learned this hard way when we migrated from MySQL to PostgreSQL on AWS gp2 volumes. Our 4-core RDS instance with 1000 IOPS turned into a crawling disaster. Same queries that took 50ms in MySQL were hitting 500ms in PostgreSQL because of VACUUM overhead.

The real cost: 3 weeks of debugging, $8,000 in consultant fees, and upgrading to a $400/month instance with provisioned IOPS that should've cost $150/month with proper planning. That "free" migration cost more than my car.

The exact error that destroyed our weekend:

ERROR: canceling statement due to conflict with recovery

DETAIL: User query might have needed to see row versions that must be removed.

Translation: PostgreSQL's MVCC created 50GB of dead rows that VACUUM couldn't clean fast enough. Here's the configuration that saved our ass:

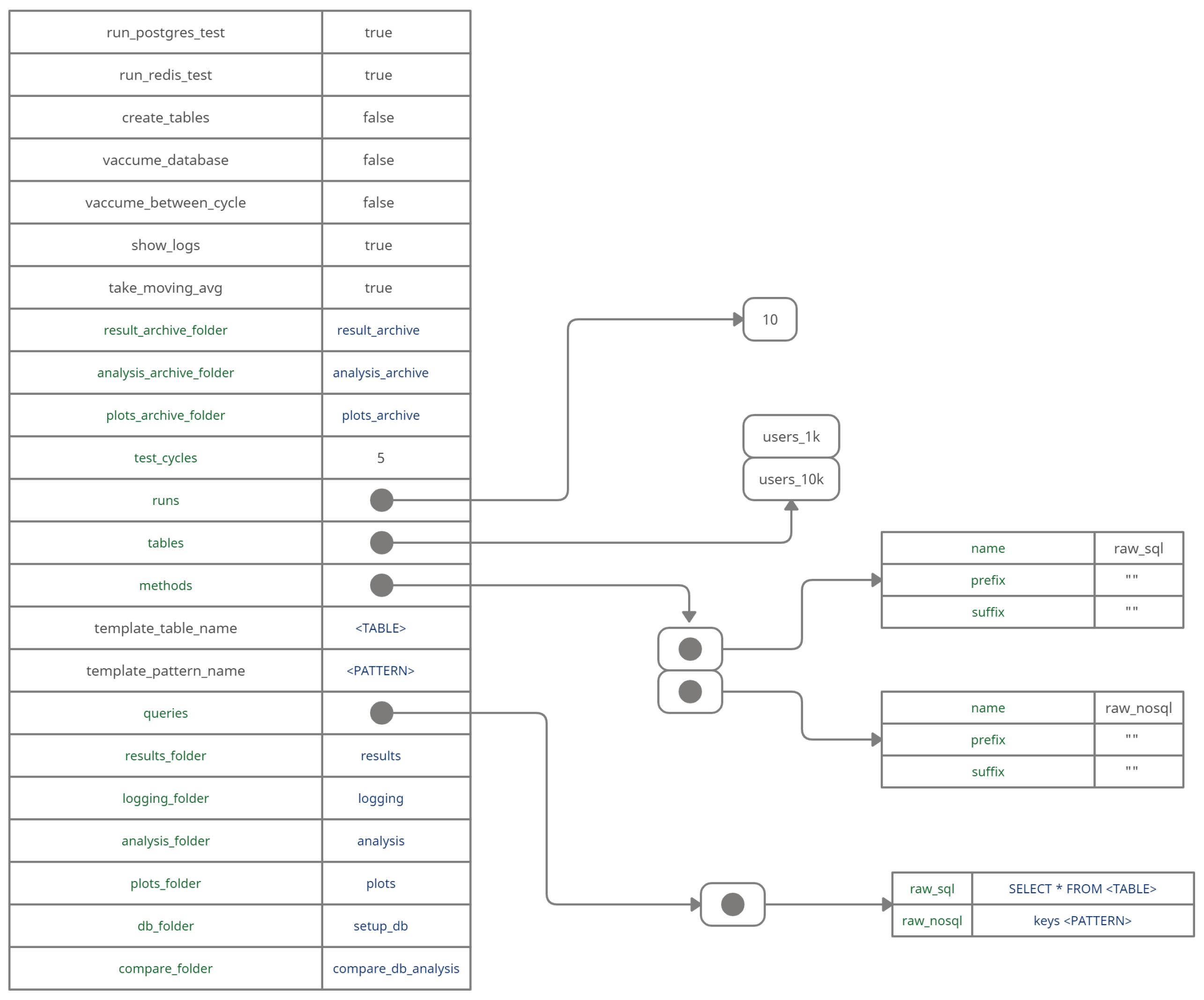

## postgresql.conf - PostgreSQL 17 production config that actually works

autovacuum_max_workers = 6 # More workers = faster cleanup

autovacuum_vacuum_scale_factor = 0.1 # VACUUM when 10% of table changes

autovacuum_analyze_scale_factor = 0.05 # ANALYZE when 5% changes

max_wal_size = 4GB # Prevents constant checkpoints

shared_buffers = 8GB # 25% of system RAM

effective_cache_size = 24GB # Tell Postgres about OS cache

work_mem = 256MB # Per connection - don't go crazy

maintenance_work_mem = 2GB # For VACUUM and CREATE INDEX

random_page_cost = 1.1 # SSD optimization (default 4.0)

Lesson learned: Test with YOUR actual storage speed, not synthetic benchmarks. AWS gp2 at 1000 IOPS is not the same as NVMe at 100,000 IOPS.

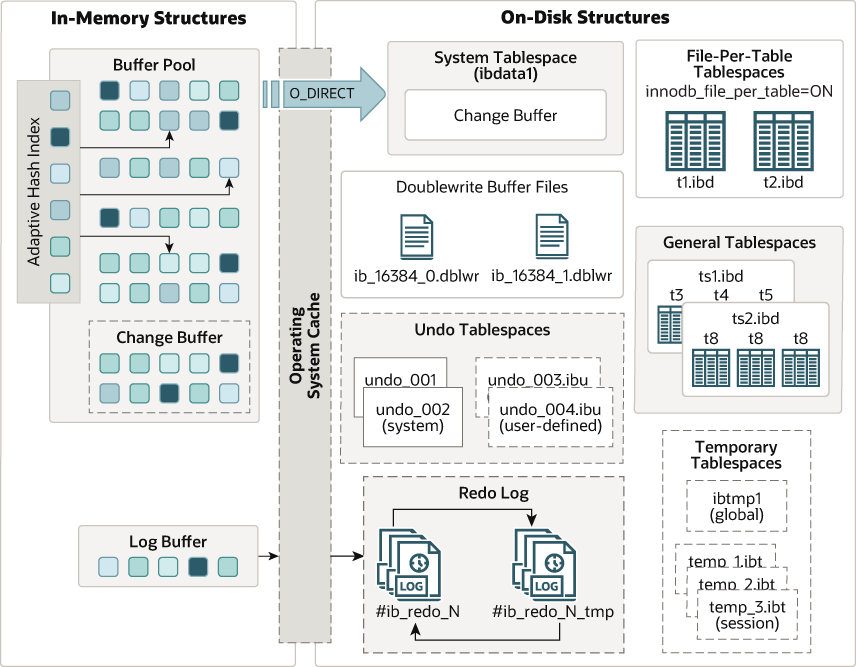

MySQL InnoDB: The Engine That Actually Works

InnoDB's buffer pool caches your hot data in RAM - size this wrong and watch MySQL crawl. The redo logs handle crash recovery, but the defaults are garbage. File-per-table keeps your data organized instead of one giant clusterfuck file.

MySQL 8.4 LTS: Finally Stable After Years of Pain

MySQL 8.4 LTS gains 15-25% performance and finally doesn't break shit during upgrades. I've been burned by MySQL version upgrades so many times that LTS felt like a joke. But 8.4 actually works.

The query cache is magic until you update one row and the entire cache gets nuked. Set query_cache_type=OFF in production and use Redis like a sane person. MySQL's pluggable storage engines sound cool but you'll use InnoDB 99% of the time unless you enjoy debugging obscure engine-specific bugs.

The real win? Thread pooling that actually works and replication that doesn't randomly break.

MySQL 8.4 LTS Production Config (Actually Tested):

## my.cnf - these settings prevent 3AM disasters

[mysqld]

innodb_buffer_pool_size = 16G # 70% of RAM (24GB server)

innodb_buffer_pool_instances = 8 # Split buffer pool for concurrency

innodb_flush_log_at_trx_commit = 2 # Faster writes, small crash risk

innodb_flush_method = O_DIRECT # Bypass OS cache on Linux

## Query performance tracking

slow_query_log = 1

slow_query_log_file = /var/log/mysql/slow.log

long_query_time = 0.1 # Log queries >100ms

log_queries_not_using_indexes = 1 # Catch missing indexes

## MySQL 8.4.3 specific fixes

binlog_expire_logs_seconds = 604800 # 7 days, prevents disk fill

innodb_redo_log_capacity = 2G # Default 100MB is garbage

binlog_format = ROW # Prevent replication corruption

MySQL will quietly handle your boring CRUD operations while you focus on actual features. But ignore this config and watch your site crawl during Black Friday traffic.

Pro tip: MySQL Workbench is where productivity goes to die. Use TablePlus or DataGrip if you value your sanity. Workbench crashes more than a Windows 95 machine and its query performance makes pgAdmin look fast.

PostgreSQL 17: The RAM-Eating Beast That Actually Delivers

PostgreSQL 17 delivers real 30% performance gains, but it's like owning a Ferrari - amazing when you know how to drive it, expensive as hell when you don't. This thing demolishes MariaDB by 13x in OLAP workloads, but you'll need a decent ops team to keep it happy.

The query planner is basically a PhD in computer science stuffed into C code. It'll analyze your shitty JOIN and somehow make it fast, but God help you if you write SELECT * on a 50-million-row table. Use EXPLAIN ANALYZE religiously or prepare for mystery performance problems.

PostgreSQL's extensibility is its superpower and its curse. Want full-text search? Built-in. JSONB indexing? Covered. PostGIS for geospatial? Chef's kiss. But each extension is another thing that can break during upgrades. Pin your versions and test extensively.

PostgreSQL MVCC: Beautiful Theory, Operational Nightmare

Every UPDATE writes a completely new row copy. Old rows pile up as "dead tuples" until VACUUM cleans them. Tune VACUUM wrong and your database turns into a graveyard of old data that slows everything to a crawl.

Shared buffers cache your working set - configure this as 25% of RAM or PostgreSQL will hit disk constantly. WAL logs every change for crash recovery, but it'll fill your disk if you don't archive properly.

EXPLAIN ANALYZE is your best friend when PostgreSQL queries start sucking. It shows exactly where your shit is breaking - use it religiously or prepare for mystery slowdowns that'll ruin your weekend.

MariaDB 11.8 LTS: MySQL's Cooler Sibling With Trust Issues

MariaDB 11.8 LTS promises MySQL compatibility plus vector search because apparently every database needs AI now. Usually delivers 15-25% better performance than MySQL, but here's the catch: "MySQL-compatible" doesn't mean "drop-in replacement."

I migrated a client from MySQL 8.0 to MariaDB 10.6 and spent a weekend rewriting queries because the optimizer makes different decisions. The JSON functions aren't identical, and some replication features behave differently. Test everything twice.

The upside? No Oracle licensing bullshit, thread pooling that actually works, and Galera clustering for multi-master setups. But hiring developers who know MariaDB's quirks costs more than just sticking with boring MySQL. Choose your battles wisely.

Now that you understand how these databases actually behave under pressure, let's address the questions that determine whether you sleep peacefully or get woken up at 3am for database emergencies.