Docker's registry system is a fucking security nightmare. Developers will pull container images from literally anywhere - sketchy GitHub repos, random Docker Hub accounts, some dude's personal registry. RAM is Docker's answer to stopping this madness without making your team want to murder you.

The Real Problem: Developers Love Sketchy Images

Your developers are pulling container images from everywhere. That "lightweight Alpine image" from some random GitHub repo? Could have cryptocurrency miners. That popular MongoDB image that's not the official one? Might be logging your database credentials to some server in Belarus.

I've seen teams get pwned because someone pulled a malicious image that looked legit but was actually harvesting AWS credentials. The 2021 Docker Hub incident where thousands of images disappeared overnight? Teams scrambling because their builds broke when dependencies vanished.

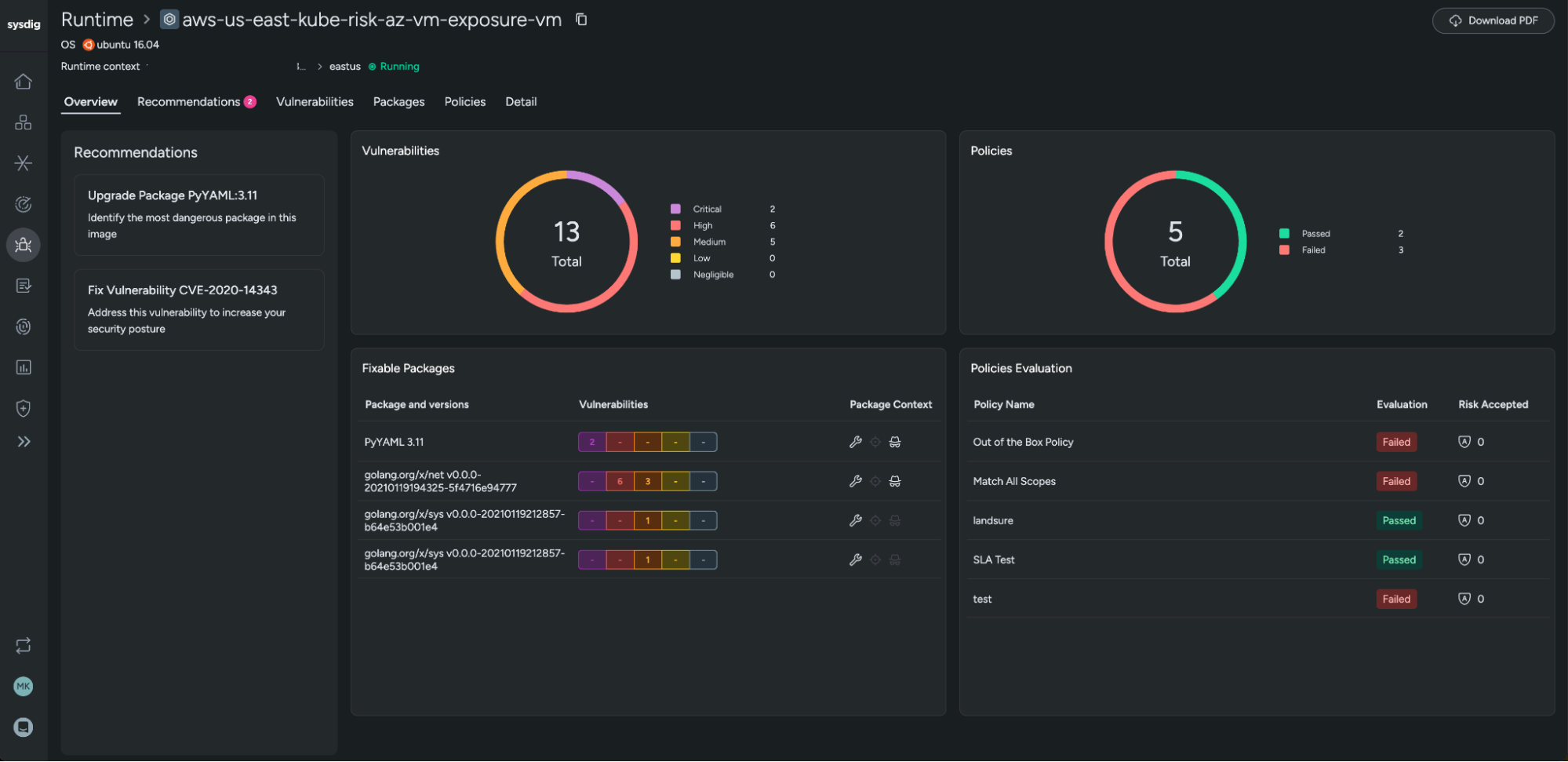

Supply chain attacks through container registries are increasing every year. Sysdig's security research shows 75% of container images contain vulnerabilities. The NIST container security guide specifically calls out registry access control as a critical security control.

How RAM Actually Works (DNS-Level Blocking)

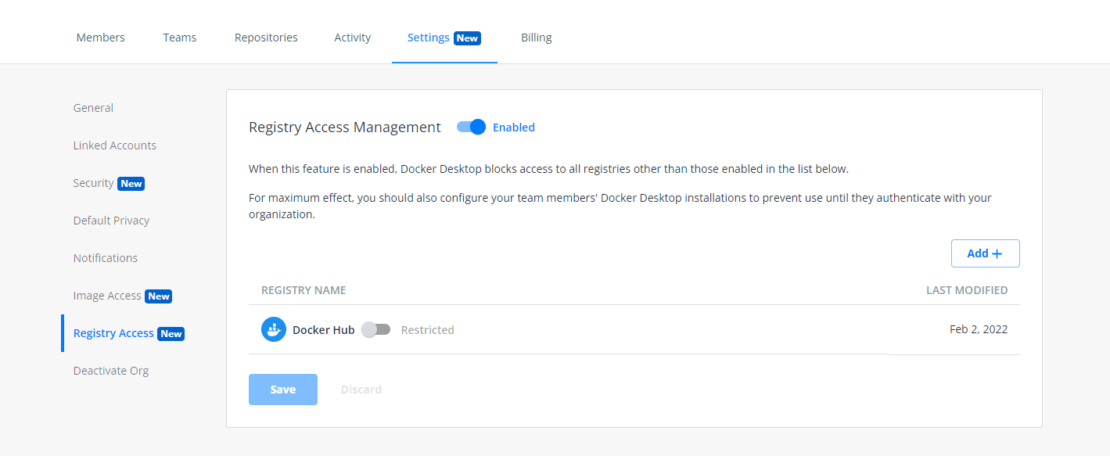

RAM works by intercepting DNS requests at the Docker Desktop level. When your developer tries to pull from sketchy-registry.com, Docker Desktop checks the allowlist first. If it's not approved, you get an immediate "access denied" error.

This isn't some application-level filtering that can be bypassed. It's baked into the Docker daemon itself. Covers everything - docker pull, docker build, even those sneaky ADD instructions in Dockerfiles that fetch random shit from the internet.

The genius part: developers get clear error messages instead of mysterious network timeouts. "registry access to malicious-repo.com is not allowed" beats the hell out of wondering why your build is hanging.

Real-World War Stories

We deployed RAM after a developer accidentally pulled a compromised Redis image that was silently sending our cache data to an external server. Took us three days to figure out why our response times were shit and our bandwidth usage had spiked.

Another team got fucked over by CVE-2024-21626 - a runc container breakout vulnerability. Some asshole developer was running malicious images that exploited leaked file descriptors to escape containers. We only found out during a routine security audit, not because we got breached, but that was pure luck.

The 24-Hour Policy Delay That'll Drive You Completely Insane

Here's the gotcha nobody talks about because it's so goddamn frustrating: policy changes take up to 24 hours to propagate. You block a registry in the admin console, and developers can still pull from it for almost a full day. Need immediate blocking? Force everyone to sign out and back into Docker Desktop. Good luck explaining that clusterfuck to your team.

What Actually Breaks (And How to Fix It)

AWS ECR is the worst offender for configuration headaches. You can't just allowlist the main ECR domain - you need amazonaws.com and s3.amazonaws.com too. Spent a weekend figuring this out when our CI pipeline started failing mysteriously.

Windows containers require enabling "Use proxy for Windows Docker daemon" in Docker Desktop settings. Easy to miss, causes silent failures that'll make you question your life choices. WSL2 needs Linux kernel 5.4+ or RAM restrictions don't apply to Linux containers.

Check Docker's troubleshooting guide for the usual registry headaches. GitHub's container registry docs explain their domain bullshit too.