The basic devcontainer.json templates are complete garbage for anything beyond a hello world app. I've seen devs waste entire sprints fighting with broken templates that work fine in isolation but shit the bed the moment you add Postgres. These patterns survived actual production use.

Multi-Service Architecture with Docker Compose

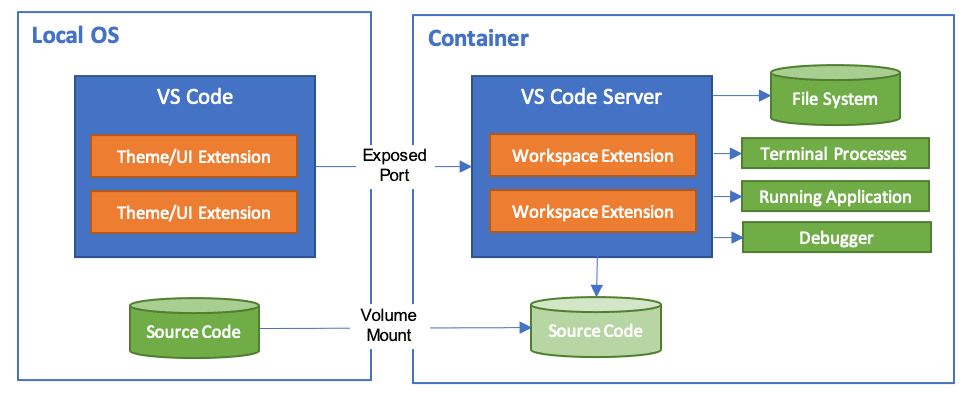

Most real applications need more than one container. Your app needs a database, maybe Redis, possibly an API gateway. The Docker Compose integration lets you define multiple services while keeping your dev environment contained.

The docs are comprehensive but completely fucking useless when Postgres won't connect and your demo is in 6 hours. Here's what actually works:

{

"name": "Full Stack App",

"dockerComposeFile": ["../docker-compose.yml", "../docker-compose.dev.yml"],

"service": "app",

"workspaceFolder": "/workspace",

"shutdownAction": "stopCompose",

"customizations": {

"vscode": {

"extensions": [

"ms-python.python",

"ms-vscode.vscode-typescript-next"

]

}

},

"forwardPorts": [3000, 8000, 5432, 6379],

"postCreateCommand": "npm install && pip install -r requirements.txt"

}

The corresponding docker-compose.dev.yml gives you the full stack:

version: '3.8'

services:

app:

build:

context: .

dockerfile: .devcontainer/Dockerfile

volumes:

- ../..:/workspace:cached

- node_modules:/workspace/node_modules

command: sleep infinity

depends_on:

- db

- redis

db:

image: postgres:15-alpine

restart: unless-stopped

volumes:

- postgres-data:/var/lib/postgresql/data

environment:

POSTGRES_PASSWORD: postgres

POSTGRES_USER: postgres

POSTGRES_DB: devdb

redis:

image: redis:7-alpine

restart: unless-stopped

volumes:

- redis-data:/data

volumes:

postgres-data:

redis-data:

node_modules:

This setup gives you application isolation while maintaining service dependencies. The sleep infinity command keeps the main container running so VS Code can attach to it.

Features - The Right Way to Add Tools

Features are pre-built tool packages that beat writing custom Dockerfile commands. The official feature collection includes over 150 tools, and the syntax lets you specify exact versions and configurations.

Community features exist but most are abandoned shitshows that worked once on the author's laptop in 2022. I spent 3 hours debugging a community Docker-in-Docker feature that was hardcoded for Ubuntu 18.04. Stick to the official ones unless you hate yourself.

{

"features": {

"ghcr.io/devcontainers/features/node:1": {

"version": "18",

"nodeGypDependencies": true,

"nvmVersion": "0.39.0"

},

"ghcr.io/devcontainers/features/python:1": {

"version": "3.11",

"installTools": true,

"optimize": true

},

"ghcr.io/devcontainers/features/docker-in-docker:2": {

"version": "24.0",

"enableNonRootDocker": true,

"moby": true

},

"ghcr.io/devcontainers/features/kubectl:1": {

"version": "1.28"

},

"ghcr.io/devcontainers/features/github-cli:1": {

"installDirectlyFromGitHubRelease": true,

"version": "2.35.0"

}

}

}

Configuration reality checks:

- Pin major versions for stability (

"version": "18"not"latest") - learned this the hard way when a minor Node update broke our entire CI pipeline for 6 hours nodeGypDependencies: truesaves you from native module hell - spent 4 hours debugging bcrypt failures before I found thisenableNonRootDocker: trueprevents the Docker-in-Docker permission nightmare - trust me, the error messages are uselessinstallDirectlyFromGitHubRelease: truefor tools that actually update (GitHub CLI breaks weekly with package managers)

Advanced Lifecycle Hooks

The basic postCreateCommand runs once after container creation, but complex setups need more control. Here are all the lifecycle hooks and when to use each.

Lifecycle hooks fail silently like everything else in Docker land, and the error messages tell you jack shit about what went wrong. Here's what each hook actually does and exactly how they'll break on you:

{

"onCreateCommand": "git config --global user.email 'dev@company.com'",

"updateContentCommand": ["pip", "install", "-r", "requirements.txt"],

"postCreateCommand": "npm install && npm run build:dev",

"postStartCommand": "npm run dev:services",

"postAttachCommand": "echo 'Container ready for development'"

}

When Each Hook Runs (and how they break):

onCreateCommand: Container first created (git config, system setup) - fails if you try to access mounted volumes that aren't ready yetupdateContentCommand: Source code changes (dependency updates) - gets skipped randomly if VS Code thinks nothing changedpostCreateCommand: After creation and updates (build steps) - this is where 90% of container failures happenpostStartCommand: Container starts (start background services) - don't start long-running processes here, they'll be killedpostAttachCommand: VS Code connects (status messages, environment checks) - only use for quick checks, anything slow blocks VS Code startup

Custom Dockerfile Integration

When features aren't enough, integrate your own Dockerfile. The build configuration gives you full control while maintaining dev container compatibility.

Custom Dockerfiles give you power but also enough rope to hang yourself and your entire team. Here's the least fucked approach I've found after breaking production exactly once:

{

"build": {

"dockerfile": "Dockerfile.dev",

"context": "..",

"args": {

"NODE_VERSION": "18",

"PYTHON_VERSION": "3.11",

"INSTALL_ZSH": "true",

"USERNAME": "vscode"

},

"target": "development"

}

}

The corresponding Dockerfile.dev uses multi-stage builds for development and production:

ARG NODE_VERSION=18

ARG PYTHON_VERSION=3.11

FROM mcr.microsoft.com/devcontainers/base:ubuntu AS base

ARG USERNAME=vscode

ARG INSTALL_ZSH=false

## Install base development tools

RUN apt-get update && apt-get install -y \

git \

curl \

vim \

htop \

build-essential

## Install ZSH if requested

RUN if [ "$INSTALL_ZSH" = "true" ]; then \

apt-get install -y zsh \

&& chsh -s /bin/zsh $USERNAME; \

fi

FROM base AS development

## Development-specific setup

RUN apt-get install -y \

debugger-tools \

development-headers

## Install Node.js

RUN curl -fsSL https://deb.nodesource.com/setup_${NODE_VERSION}.x | bash - \

&& apt-get install -y nodejs

## Install Python

RUN add-apt-repository ppa:deadsnakes/ppa \

&& apt-get update \

&& apt-get install -y python${PYTHON_VERSION} python${PYTHON_VERSION}-dev

FROM base AS production

## Production-optimized image

COPY --from=development /usr/bin/node /usr/bin/node

COPY --from=development /usr/bin/npm /usr/bin/npm

RUN apt-get autoremove -y && apt-get clean

This gives you a development container with all the debugging tools and a lean production image from the same source.

Environment Variable Management

Real projects need different configurations for different environments. The container environment variables support multiple patterns:

{

"containerEnv": {

"NODE_ENV": "development",

"DEBUG": "*",

"PORT": "3000",

"DATABASE_URL": "postgresql://postgres:postgres@db:5432/devdb",

"REDIS_URL": "redis://redis:6379",

"API_BASE_URL": "http://localhost:8000"

},

"remoteEnv": {

"LOCAL_WORKSPACE_FOLDER": "${localWorkspaceFolder}",

"PATH": "${containerEnv:PATH}:/workspace/node_modules/.bin"

}

}

Environment Variable Types:

containerEnv: Set inside the container (database URLs, debug flags)remoteEnv: VS Code remote variables (workspace paths, extended PATH)- Variables support substitution with

${localWorkspaceFolder}and${containerEnv:VARIABLE}

Volume and Mount Optimization

File performance makes or breaks your development experience. The mount configuration offers several optimization strategies:

{

"mounts": [

"source=${localWorkspaceFolder},target=/workspace,type=bind,consistency=cached",

"source=${localWorkspaceFolder}/node_modules,target=/workspace/node_modules,type=volume",

"source=dev-container-cache,target=/root/.cache,type=volume",

"source=${env:HOME}/.ssh,target=/root/.ssh,type=bind,readonly"

]

}

Mount Performance Strategies:

- Use

consistency=cachedfor source code (faster reads, eventual consistency) - Use named volumes for

node_modules(avoids slow cross-platform file sync) - Cache directories like

.cache,.npm,.pipin volumes - Bind mount SSH keys and Git config as readonly

Platform-Specific Optimizations:

- macOS: Use VirtioFS file sharing in Docker Desktop

- Windows: Ensure WSL2 backend, store source code in WSL filesystem

- Linux: Bind mounts work well, consider user namespace mapping for permissions