Look, most container startup failures are caused by stupidly simple problems that make you feel like an idiot once you figure them out. I've spent entire afternoons debugging containers that wouldn't start because of a missing space in a command. Last month I burned 3 hours on a recent Docker version because some security change broke containers that used to work fine - kept getting "operation not permitted" errors for no obvious reason.

The Top 3 Things That Will Ruin Your Day

Your Command Is Wrong - This is usually the first place to look. Did you typo the command? Is the executable actually in the container? I once spent 4 hours debugging a container that failed because someone wrote httpd-foregroun instead of httpd-foreground. Four. Hours. The logs just said "exec: httpd-foregroun: executable file not found in $PATH" and I kept looking for missing dependencies like a moron.

Docker's official troubleshooting guide actually covers this, but it's buried in enterprise nonsense.

Check this shit first:

## Override the entrypoint and poke around

docker run -it --entrypoint /bin/bash your-broken-image

## Then see if your command actually exists

which your-command

ls -la /path/to/your/script

Memory/Resource Issues - Docker containers are surprisingly good at running out of memory and not telling you why. The container just dies with exit code 137 and you're left wondering what the hell happened. Recent Docker Desktop versions finally show OOMKill status in the GUI, but it took them years to add something this basic.

Pro tip: Check if Docker murdered your container:

docker inspect dead-container --format '{{.State.OOMKilled}}'

This Stack Overflow thread about OOMKilled containers explains the whole mess. Exit code 137 is Docker's shitty way of saying "I killed your app because memory."

If it returns true, you're out of memory. If false, something else killed it. Usually AWS because you forgot to pay your bill, or Kubernetes decided your container was "unhealthy" for taking 3 seconds to respond during startup.

Permission Problems - Oh, the classic "permission denied" that makes no sense because you're running as root in the container anyway. Some recent Docker version introduced new security bullshit that breaks containers that used to work fine. This happens when:

- Your entrypoint script isn't executable (

chmod +xfixes this) - Volume mounts have the wrong ownership

- SELinux is being a pain in the ass (disable it and try again) - this particularly fucks over RHEL 9 users

This Stack Overflow thread about permission denied issues has thousands of answers because this breaks constantly.

When Things Go Wrong (Spoiler: It's Always During Demo)

Here's when your containers will decide to break, in order of how much it will ruin your day:

Image Pull Fails - Your container can't even start because Docker can't download the image. Docker Hub goes down pretty regularly (I think it was down for hours sometime in early 2024 but can't remember exactly when). Usually because:

- You typo'd the image name (docker.io/my-ap instead of docker.io/my-app)

- Your internet connection sucks

- The registry is down (looking at you, Docker Hub)

- Authentication failed because you forgot to

docker login - Rate limiting - Docker Hub now limits pulls to 200 per 6 hours for free accounts

Creation Phase - Docker downloaded the image but can't create the container. I hit this constantly when switching between projects. Common culprits:

- Port 8080 is already taken (check with

netstat -tulpn | grep :8080) - some abandoned container is hogging it - Your volumes point to directories that don't exist (

/home/user/datavs/Users/user/dataon Mac) - You've run out of disk space (again) - Docker images are huge and

/var/lib/dockerfills up fast

Startup Phase - The container starts but immediately crashes. This is where 90% of your debugging time goes. The command is wrong, dependencies are missing, or permissions are fucked.

Runtime Crashes - Container starts fine but dies randomly. Could be memory leaks, external dependencies going away, or your code just sucks.

Why Docker Debugging Is Different (And Annoying)

Docker containers are like black boxes that explode and disappear, taking all the evidence with them. Traditional debugging doesn't work because:

Everything Is Ephemeral - Container dies, logs disappear, and you're left with nothing. Always mount a volume for logs or you'll hate yourself later.

Isolation Works Too Well - You can't just SSH into a container to poke around. Well, you can docker exec, but the container has to be running first.

Layer Upon Layer Of Complexity - Your app runs in Docker, on Kubernetes, on AWS - when it breaks, good fucking luck figuring out which layer is the problem. Each layer has its own logs, configs, and ways to fail.

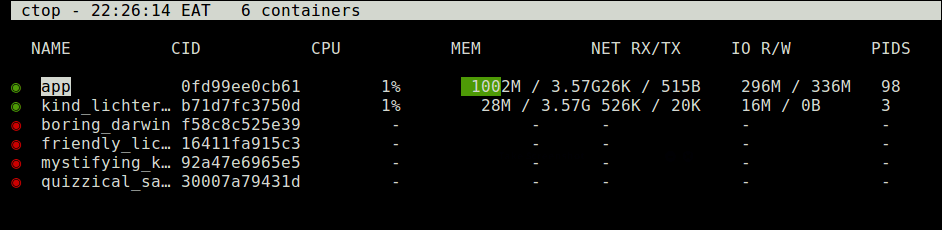

Tools That Actually Help

Skip the fancy enterprise tools and use these:

Docker logs - First thing to check, though they're often useless:

docker logs -f broken-container

## Add timestamps to see when things break

docker logs -f --timestamps broken-container

Docker inspect - Shows you everything about the container:

docker inspect broken-container | grep -i error

Override the entrypoint - When all else fails:

docker run -it --entrypoint /bin/bash broken-image

The Docker Desktop extensions in Desktop 4.33+ are actually pretty useful if you're into clicking buttons instead of typing commands. The logs explorer saves me from scrolling through thousands of log lines.

For more debugging techniques that actually work, check out this comprehensive Docker troubleshooting guide from DigitalOcean. They know what they're talking about, unlike most Docker tutorials.