Last month I watched a new hire spend four days trying to get our app running locally. First it was the wrong Python version. Then missing system libraries that somehow got installed on my machine six months ago. Then our frontend needed Node 18.16.1 specifically because 18.17.0 has a memory leak that breaks the build process after 20 minutes.

By day four, we were debugging why his macOS installation of libxml2 was conflicting with our parsing library. I realized we were idiots for not using containers.

VMs vs Containers: One Wastes Your RAM, One Wastes Your Sanity

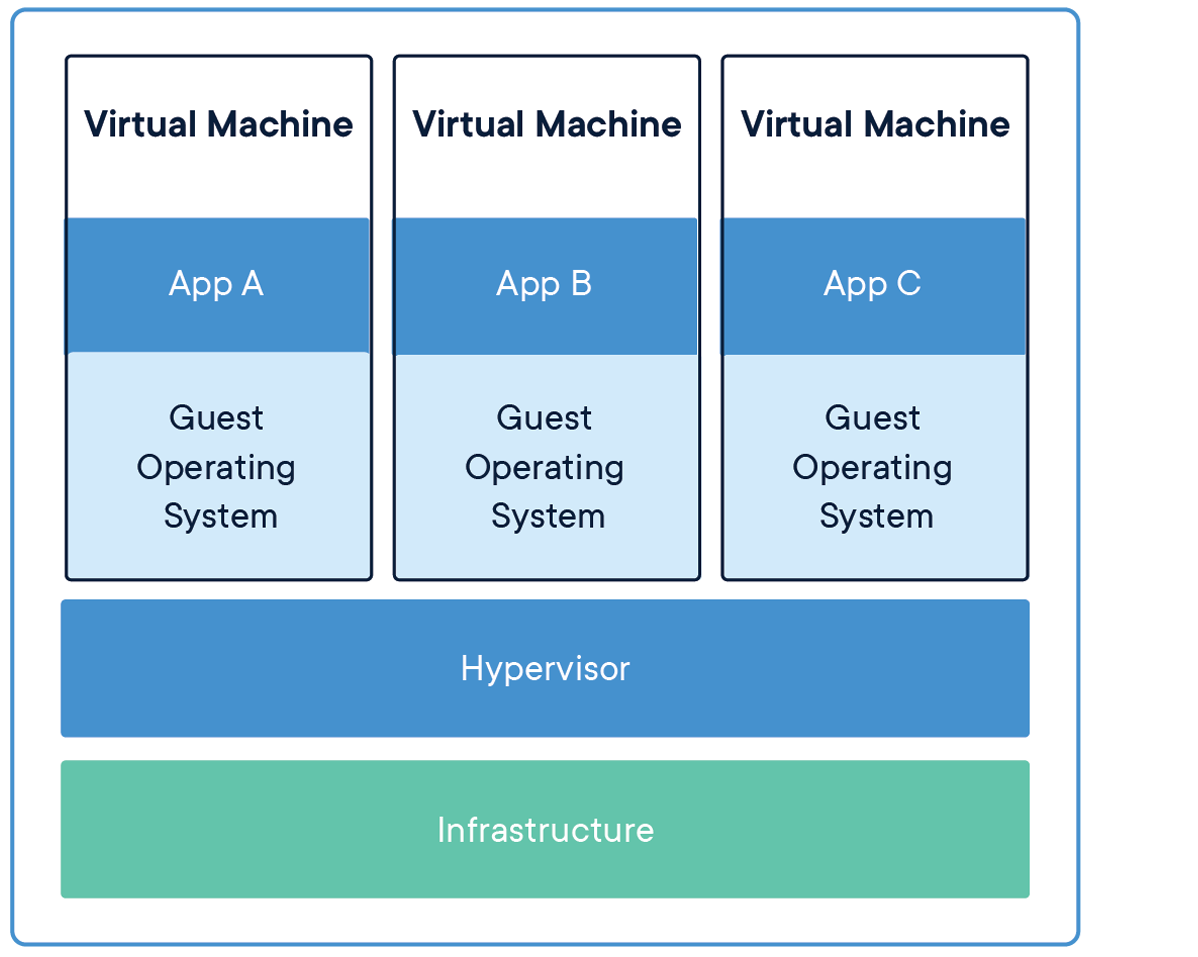

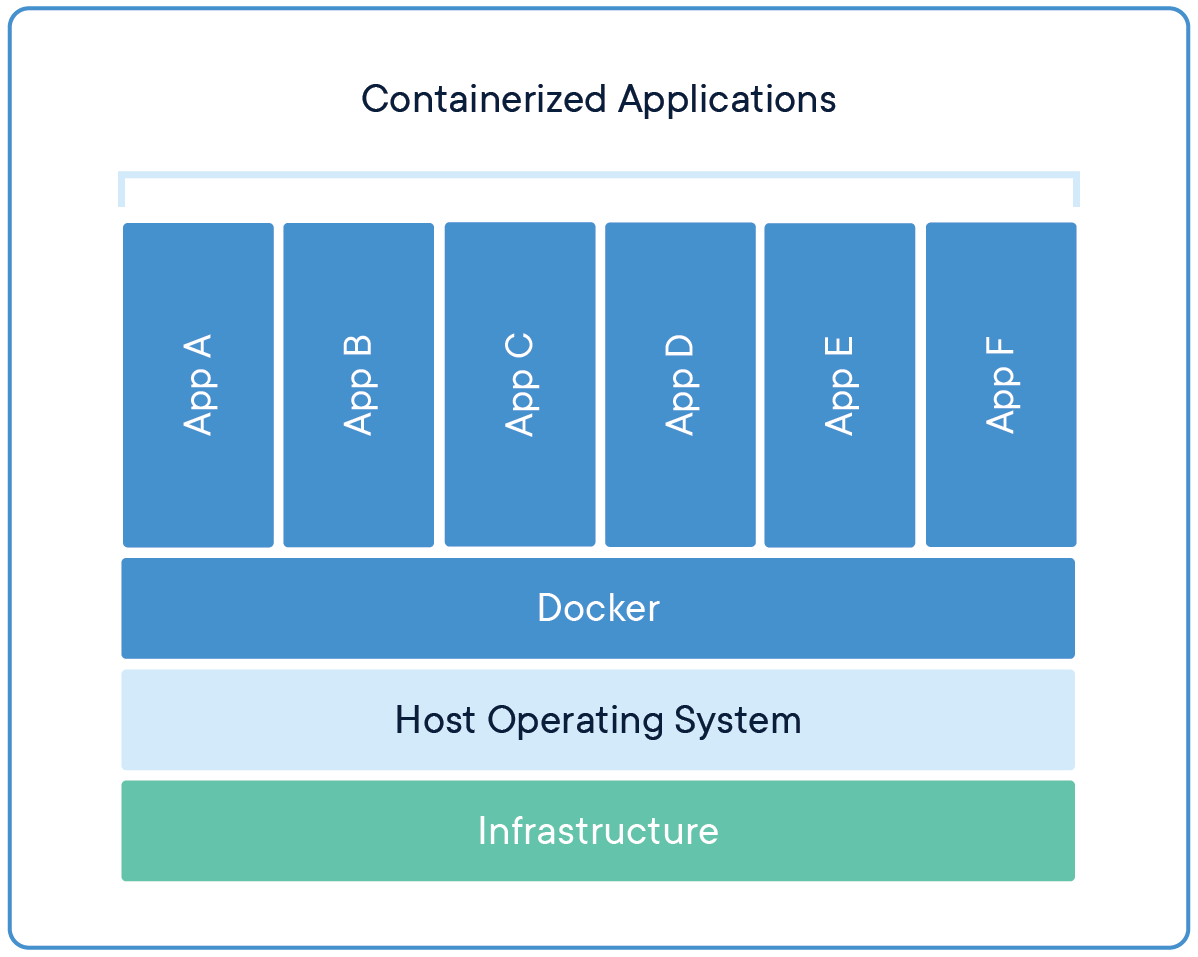

Here's what I learned running both in production: VMs virtualize entire machines, so you're running a full Ubuntu installation just to serve a simple API. I've seen VMs using 2GB of RAM when the actual application needs 200MB.

Containers share the host OS kernel but isolate everything else. Same isolation, way less overhead. The downside? When the host kernel has issues, every container feels it. Learned that during a memory pressure event that killed 15 containers with OOMKilled because we hadn't set proper memory limits.

Docker Desktop Licensing: August 2021 Killed the Free Lunch

Docker Desktop Pricing Structure: Individual developers get Docker Desktop free, but companies with 250+ employees or $10M+ revenue pay $21/month per seat for Professional or $31/month for Team licensing.

In August 2021, Docker Inc. changed their licensing terms and suddenly companies with more than 250 employees had to pay $21/month per developer. Classic digital heroin dealer move: get everyone addicted, then charge for the fix. Our legal team panicked. Our DevOps team started looking at alternatives while muttering about "vendor lock-in bullshit."

We tried Podman Desktop first. It worked for basic stuff but broke our GitHub Actions because the socket path is different. Spent two weeks fixing CI scripts only to discover Podman can't handle our multi-architecture builds properly.

Rancher Desktop was next. Free, supports ARM64, but the networking stack has issues with our VPN. Some containers would randomly lose connectivity and we'd get timeout errors during builds.

What Actually Works in August 2025:

- Docker Compose v2.39+ finally has watch mode that doesn't restart containers constantly

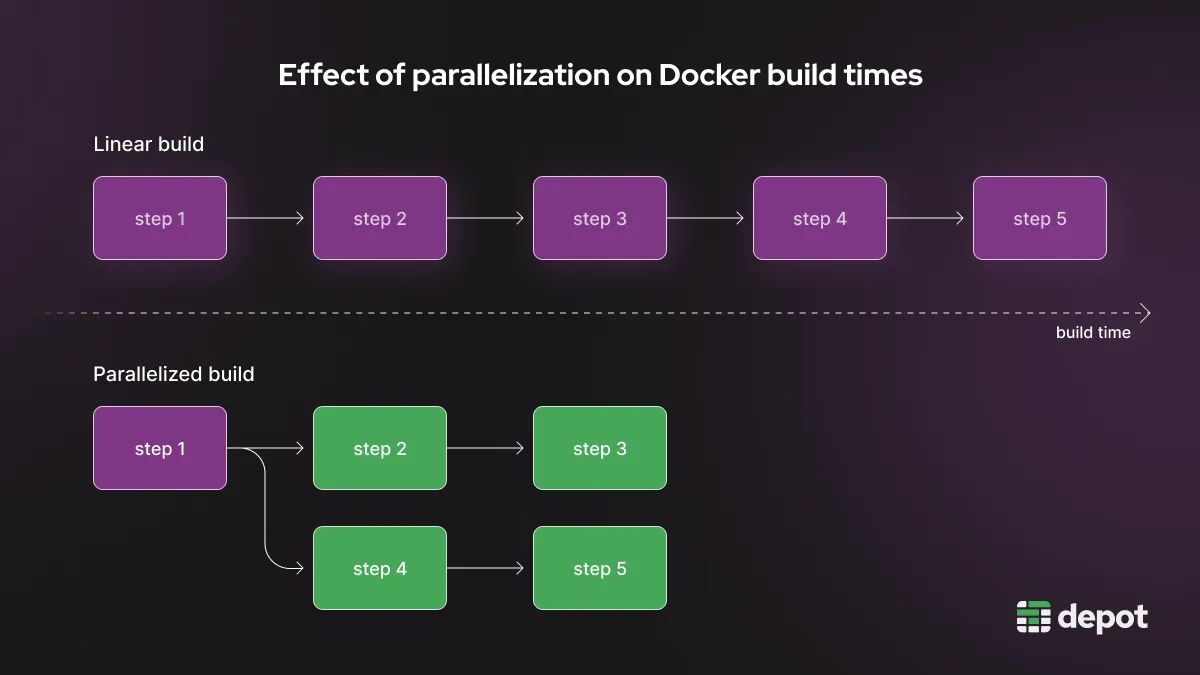

- BuildKit 0.23+ cache invalidation actually works (learned this fixing 45-minute builds)

- Docker Desktop 4.44.3+ (released Aug 20, 2025) stopped randomly resetting memory limits to 2GB

- Docker Engine 28.0+ includes vulnerability scanning that caught 12 critical CVEs in our base images

- Dev Containers extension 0.324+ for VS Code doesn't break when you have spaces in folder names

Three Ways to Structure Your Dev Environment (Two Are Wrong)

Everything in One Container: I tried this once. Postgres, Redis, Node.js app, and nginx all in the same container. Worked great until I needed to debug a database issue and had to restart the entire stack, losing 20 minutes of work. Pure masochism.

Proper Multi-Container Setup: Separate containers for each service. Database gets its own container, cache gets its own, app server gets its own. When your app crashes (and it will), the database keeps running. When you need to update Redis, your app doesn't care. This is how we run 150+ microservices in production.

Half-Assed Hybrid: Database in Docker, everything else on the host machine. I've seen teams do this because they're scared of "complexity." You still get environment inconsistencies, plus now you have Docker AND local tooling to maintain. Pick a side.

Volume Mounts Will Destroy Your Soul (Especially on Windows)

Bind Mounts: Your source code maps directly into the container. File changes show up immediately, which sounds great until you realize Windows file I/O through Docker Desktop is slower than my first dial-up connection. Watched our CI builds go from 3 minutes on Linux to 45 minutes on Windows because of bind mount overhead.

Named Volumes: Docker manages the storage location. Lightning fast for database files, but your code changes don't appear until you rebuild. Perfect for node_modules that you never need to edit directly anyway.

The Solution That Took Me 6 Months to Figure Out: Use multi-stage Dockerfiles with a development target that includes bind mounts for source code, but named volumes for dependencies. Bind mount ./src but never ./node_modules. Your SSD will thank you.

The Reality of Docker Development (When Stars Align)

When Docker Development Actually Works: You write a Dockerfile, build an image, run containers, and use Docker Compose to orchestrate services like your database, cache, and application.

Here's how Docker development works when everything goes right:

- New developer clones repo:

git clone,docker compose up, and they're coding in 10 minutes - Code changes reflect immediately: Thanks to bind mounts (that work 80% of the time)

- Database migrations just work: Same Postgres version, same data, same schema

- Tests pass locally and in CI: Because the environment is actually identical

- No more "missing dependency" tickets: Everything's in the container

What Actually Happens 50% of the Time:

Exit code 137 means your container got killed by the OS for using too much memory. File watching breaks when you exceed inotify limits (default is 8192 on most Linux systems). Networking randomly breaks after macOS updates because Docker Desktop has to rebuild its VM. Windows Defender will flag random Docker processes as malware because it doesn't understand containers.

- Docker Desktop randomly decides it needs 8GB of RAM for a 200MB app

- File watching stops working and you spend 2 hours debugging nodemon

- Container networking breaks after macOS update and localhost:3000 returns connection refused

- Windows Defender flags Docker Desktop as malware and quarantines the installer during updates

Tools That Actually Improved My Life:

- LazyDocker - TUI for managing containers when the GUI breaks

- Docker Scout - Found 23 vulnerabilities in our "trusted" base images

- VS Code Dev Containers - Works when you configure it correctly

- Testcontainers - Integration tests with real databases instead of mocks

After two years of using Docker for development, our team onboarding went from "3 days of environment setup" to "30 minutes of waiting for images to download." Worth the learning curve, despite the emotional trauma.

Set aside 2 hours/month for Docker maintenance - clearing old images, updating Docker Desktop, and fixing whatever randomly broke overnight. It's like owning a car: regular maintenance prevents catastrophic failures, but something will still break at the worst possible moment.

The Point of No Return: Why Docker Development Is Worth the Pain

Here's the moment you'll realize Docker was worth it: your new hire joins on Monday, runs docker compose up, and has the entire development environment working before their first meeting. No Slack messages about missing dependencies. No "it works on my machine" debugging sessions. No three-day setup process that ends with "just install this random Python library globally."

That's when you'll understand why Docker adoption went from startup toy to enterprise necessity in less than a decade. It's not about the technology - it's about solving the fundamental consistency problem that's fucked up software development since we moved beyond single-machine deployments.

![Docker Tutorial for Beginners [FULL COURSE in 3 Hours] by TechWorld with Nana thumbnail](/_next/image?url=https%3A%2F%2Fimg.youtube.com%2Fvi%2F3c-iBn73dDE%2Fmaxresdefault.jpg&w=3840&q=75)