I've spent more weekends than I care to count sitting in a data center watching pg_dump crawl through 500GB databases. pg_upgrade saved my sanity.

The Traditional Upgrade Nightmare

Before pg_upgrade, upgrading PostgreSQL major versions meant:

- Dumping your entire database with

pg_dump(6 hours for our main DB) - Installing the new PostgreSQL version

- Restoring everything with

pg_restore(another 8 hours if you're lucky) - Praying nothing broke during the 14-hour maintenance window

I learned this the hard way during a PostgreSQL 9.6 to 11 upgrade that took 16 hours and required rolling back at 3 AM because we hit extension compatibility issues nobody tested.

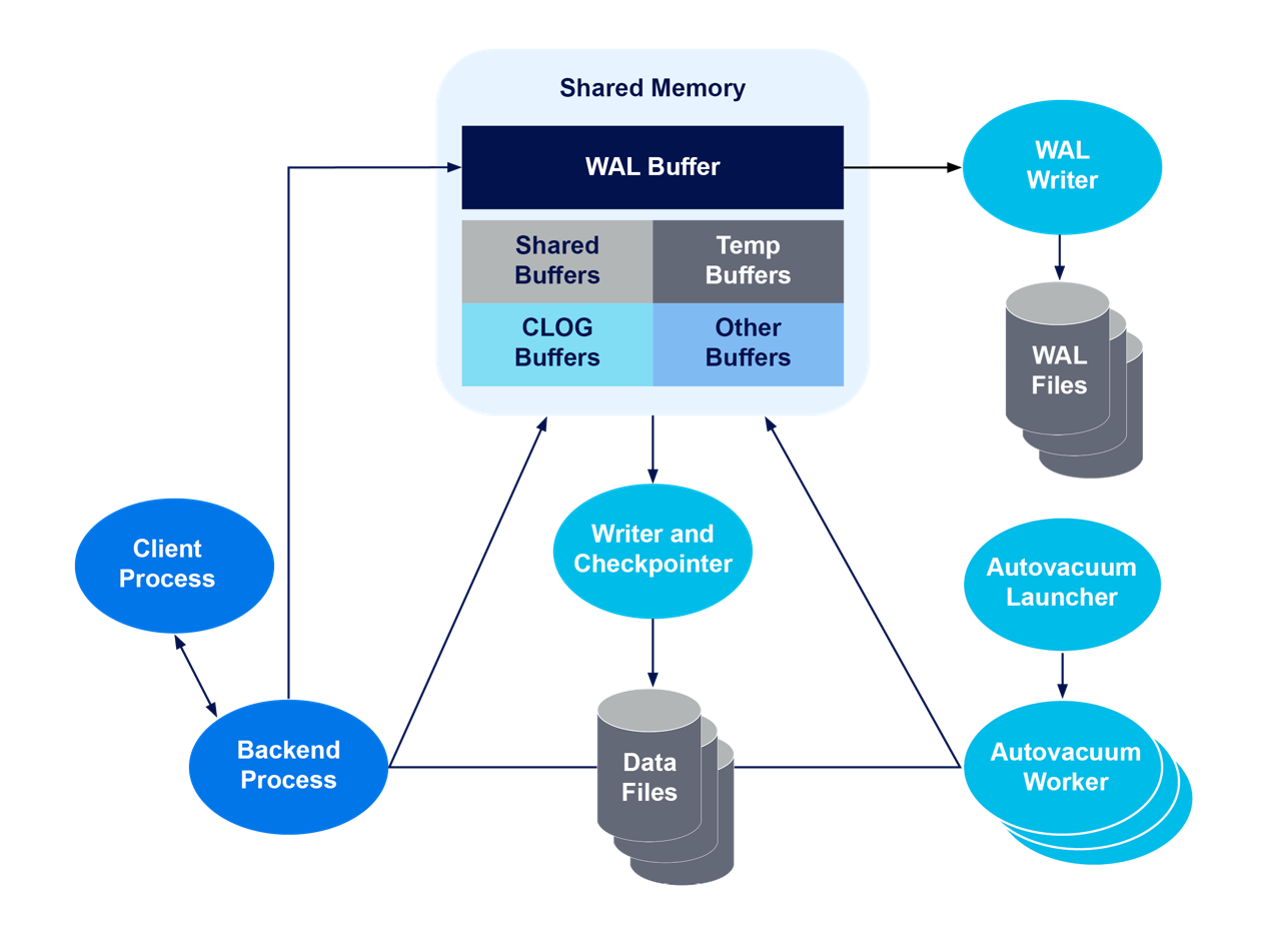

How pg_upgrade Actually Works

pg_upgrade works at the file system level instead of dumping logical data. It literally moves or links your data files from the old PostgreSQL installation to the new one.

Three modes that matter:

- Copy mode: Copies all data files (safe but slow, 2x disk space needed)

- Link mode: Hard links files (fast but scary - if it fails halfway through, you're fucked)

- Clone mode: File system clones where supported (best of both worlds)

Real-World Performance

Our 500GB production database that used to take 14+ hours with dump/restore? pg_upgrade in copy mode: 45 minutes. Link mode: 8 minutes.

Small databases under 50GB typically upgrade in 10-20 minutes with copy mode. Medium databases (50-500GB) take 30 minutes to 2 hours. Large databases benefit massively from link mode but carry the risk.

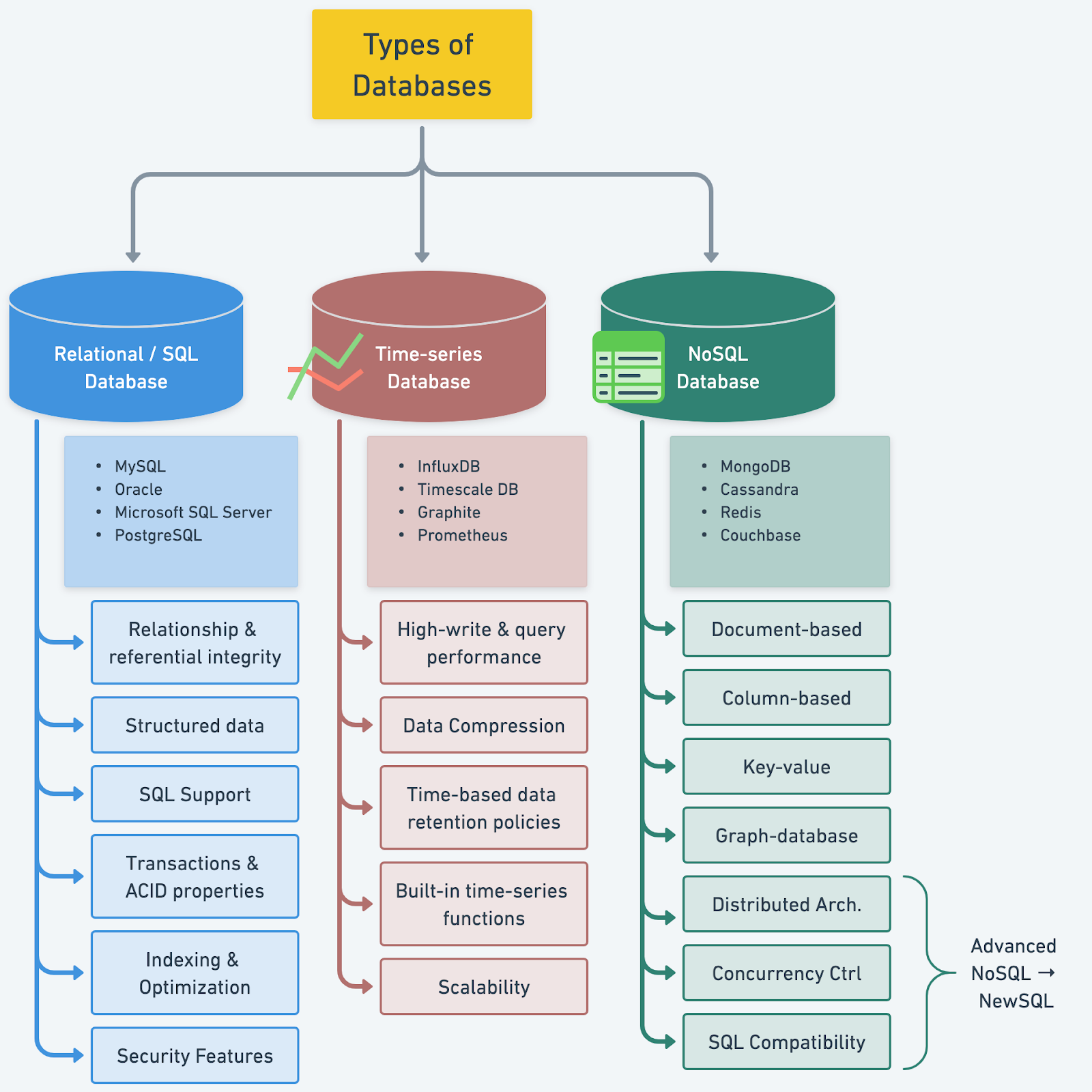

Version Support Reality Check

pg_upgrade supports PostgreSQL 9.2+ to current versions. You can jump multiple major versions in one go - I've successfully upgraded 9.6 directly to 14 without intermediate steps.

PostgreSQL 18 GA was released September 25, 2025. The new --jobs flag in recent versions lets you parallelize the upgrade, cutting time in half for databases with multiple tablespaces.

Things That Will Bite You

- Extension hell: Third-party extensions are where upgrades go to die. PostGIS upgrades have ruined more of my weekends than I want to admit.

- Authentication failures: The upgrade process connects multiple times. Set up peer authentication or you'll be typing passwords for an hour.

- Disk space: Copy mode needs 2x your database size. I've seen upgrades fail at 90% completion because

/varfilled up. - Replication: Standby servers need special handling. The docs make it sound simple - it's not.

Link mode is fast but unforgiving. If the upgrade fails after it starts linking files, your old cluster is toast. Always test in staging first and have backups. I've had to restore from backups twice when link mode upgrades failed spectacularly.