PowerCenter has been around since 1993, which means it's older than most of the developers who'll be forced to maintain it. The latest version 10.5.8, released in March 2025, continues this legacy with incremental improvements and security patches. It was born in the era when client-server architectures ruled the enterprise, and it shows.

Architecture That Makes Sense (Sort Of)

The architecture splits into three main pieces: Repository Service, Integration Service, and the client tools. This separation actually works well until one piece decides to shit the bed and take everything else down with it.

Repository Service is where all your metadata lives. Think of it as a database that holds your mappings, workflows, and version history. When this corrupts (and it will), you'll discover your backup strategy wasn't as bulletproof as you believed. The repository grows like a weed and needs regular maintenance or it'll slow to a crawl.

Integration Service runs your actual ETL jobs. It's where the real work happens and where you'll spend most of your time debugging why Job X that worked fine in dev is now eating all your production memory. The service supports parallel processing, which sounds great until you realize you're limited by database connections and suddenly your "parallel" job is running single-threaded.

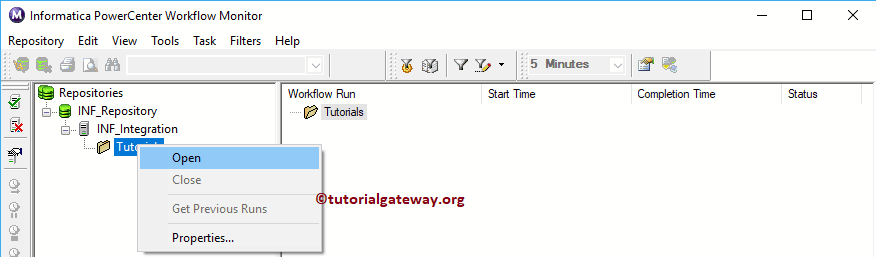

Client Tools include PowerCenter Designer, which crashes if you have too many mappings open, Workflow Manager for orchestration, and Workflow Monitor for watching your jobs fail. These tools were clearly designed by people who never had to use them at 3am during a production issue.

What PowerCenter Actually Does Well

Despite its age and quirks, PowerCenter handles complex transformations that would make modern ETL tools cry. Need to join data from a mainframe, an Oracle database from 2003, and a modern REST API? PowerCenter probably has connectors for all of them.

The 300+ connectors claim is real, though half of them are for systems you forgot existed. But when you need to extract data from that AS/400 system running your payroll, PowerCenter's your friend.

Metadata management is actually solid. PowerCenter tracks lineage, impact analysis, and dependencies better than most modern tools. This becomes crucial when business users ask "where does this field come from?" and you can actually answer without digging through code.

The Performance Reality Check

PowerCenter's performance depends entirely on understanding its quirks:

- Pushdown optimization works great when it works, but PowerCenter sometimes decides your optimized query needs "improvement"

- Session logs grow forever unless you configure rotation properly

- Lookup transformations can eat all your memory if you're not careful with cache sizing

- Parallel processing hits database connection limits faster than you'd expect

Migration to Cloud (Good Luck)

Informatica keeps pushing cloud migration to their IDMC platform. The automated migration tools work for simple mappings but anything complex needs manual rework. That "100% conversion" rate they advertise? It means the tool can parse your mappings, not that they'll actually work in the cloud.

Most enterprises are stuck running hybrid deployments - keeping PowerCenter on-premises for legacy system integration while slowly moving newer workloads to cloud-native tools. This works until you need to maintain two different ETL platforms and explain to management why your data integration costs doubled.

Given these realities, most organizations start evaluating alternatives. But switching from PowerCenter isn't straightforward - the migration complexity depends heavily on your specific use case, data volumes, and tolerance for risk.