So you read the marketing bullshit about "seamless integration" and "enterprise-grade scalability." Cool. Let me tell you what actually happens when you try to connect these two beasts in production.

The Setup That Actually Works (After 47 Failed Attempts)

First, forget everything you read about Cassandra 5.0. It's barely out of beta and will eat your data. Stick with Cassandra 4.1.6 - anything below 4.1.4 has memory leaks that'll crash your containers. I learned this after burning through $8,000 in AWS compute credits debugging phantom OOM errors.

For Kafka, 3.6.1 is your safest bet. The 4.x series looks shiny but breaks randomly with connection pooling issues that took down our prod environment for 6 hours on a Tuesday. The Confluent performance tuning guide covers production deployment best practices.

Cassandra's Ring Architecture: The Foundation of Everything

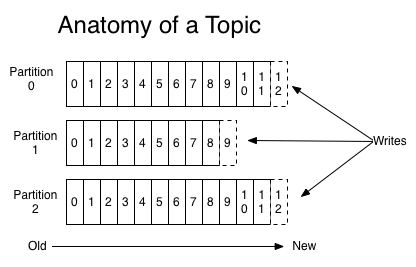

Cassandra organizes nodes in a ring topology where each node owns a range of data based on consistent hashing. This architecture is why Cassandra scales horizontally - add more nodes, get more capacity. It's also why everything can break in interesting ways when nodes go down.

The Three Patterns That Don't Completely Suck

Event Sourcing (The One That Actually Works)

Store everything as events in Kafka, replay to rebuild Cassandra state. Sounds simple, right? Wrong. The gotcha: Kafka Connect memory limits. Set your heap to at least 4GB or watch java.lang.OutOfMemoryError: Java heap space kill your connectors every 3 hours.

CQRS with Separate Read/Write Models

This works if you enjoy debugging eventual consistency issues at 2AM. Pro tip: your read model will always be behind your write model. Plan for it or your customers will hate you. I learned this when our inventory system showed 47 items in stock while we actually had zero.

Change Data Capture (The Nightmare)

Cassandra's CDC is broken by design. It drops files randomly, especially under memory pressure. The official CDC documentation won't tell you this, but DataStax's CDC patterns guide hints at the problems. Use Debezium instead - yes, it's another moving part, but at least it has actual error handling. The Instaclustr folks agree - CDC is where projects go to die.

Resource Reality Check

Forget what the documentation says. Here's what you actually need:

- 8GB RAM minimum per Cassandra node (16GB if you don't want to debug GC pauses) - see the hardware choices guide

- 4GB heap for each Kafka Connect worker - memory tuning strategies explain why

- At least 3 nodes for Cassandra (replication factor 3, because single points of failure are career-ending)

AWS cost? About $2,500/month for a basic 3-node Cassandra cluster with decent instances (r5.xlarge). Add Kafka and you're looking at $4,000+/month. Your manager will love that.

The Gotchas Nobody Mentions (But Will Ruin Your Weekend)

Docker Memory Limits: Set container memory to 1.5x your heap size or Docker will OOMKill your containers. This bit me hard - containers showing "healthy" in orchestrator dashboards while randomly dying with exit code 137. The extra 50% accounts for off-heap structures, code cache, and native memory allocations that JVM monitoring doesn't track.

Network Partitions: When AWS has "intermittent connectivity issues" (their euphemism for shit breaking), Cassandra doesn't recover gracefully. You'll spend hours running nodetool repair across your cluster.

Compaction Storms: Your disk I/O will spike randomly when Cassandra decides to compact everything at once. Set concurrent_compactors: 2 or your alerts will go off like Christmas lights. The compaction documentation explains the strategies, while Strimzi's broker tuning guide covers similar issues on the Kafka side.

Look, this setup can work. Companies like Netflix and LinkedIn run it successfully. But they have teams of engineers whose entire job is keeping this shit running. If you're a 5-person startup, maybe just use PostgreSQL and Redis.

The bottom line: understand what you're getting into before you commit. This integration will test your monitoring, alerting, and incident response capabilities. Make sure you have the team and budget to support it, or you'll end up as another cautionary tale about premature optimization.

Once you've absorbed this reality check and still want to proceed (masochist), the next section covers the actual implementation details - the configuration settings, deployment patterns, and operational practices that separate working systems from expensive disasters.