Three separate line items means three different ways your bill can explode: compute credits, storage, and data transfer. Snowflake bills these separately so when costs blow up, it takes forever to figure out which part is actually killing you.

Credits: Snowflake's Monopoly Money

Credits are how Snowflake charges for compute. Every warehouse, every Snowpipe job, every materialized view refresh burns credits. The price per credit changes based on which edition you picked and where your data lives:

What Credits Cost (ballpark, varies by region)

- Standard: Around $2-2.50 per credit (cheapest option, pretty basic features)

- Enterprise: Usually $2.80-3.20 per credit (most teams end up here)

- Business Critical: Something like $3.80-4.20 per credit (when compliance is non-negotiable)

- VPS: "Contact sales" (translation: they'll quote whatever they think you'll pay)

US regions are cheapest. International deployments cost maybe 40-60% more depending on where you are because Snowflake knows you're trapped once you've migrated. Check the official consumption table for exact regional pricing.

Here's the math that'll hurt: Medium warehouse burns 4 credits per hour - let's say you're paying around $3 per credit in Enterprise - so roughly $12 hourly. Left running 24/7, you're looking at maybe $102-108K annually just for compute. And that's before storage, before any extra features, before someone accidentally runs a query that scans your entire fact table.

The 60-Second Minimum Billing Thing

Here's where Snowflake really gets you: they bill per second but with a 60-second minimum. Run a 5-second query? You pay for 60 seconds. Another query 10 seconds later? Another full minute charge.

I've seen teams burn maybe 35-45% of their budget on this. Dashboard refreshes, health checks, schema queries - everything gets rounded up to a full minute. Can easily add thousands monthly. Their billing docs actually explain this pretty clearly, which is... something.

Pro tip: Batch your quick queries or you'll get destroyed by the 60-second minimum. That SELECT COUNT(*) FROM users that takes 2 seconds? You're paying for 60 seconds of Large warehouse time.

Warehouse Sizing: Where Everyone Screws Up

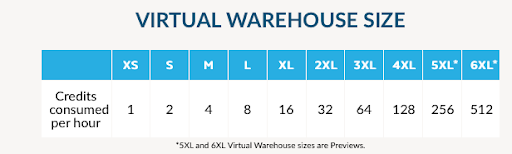

Each warehouse size doubles the credits of the one below it:

| Size | Credits/Hour | Monthly Cost @ ~$3/credit |

|---|---|---|

| X-Small | 1 | ~$2,200 |

| Small | 2 | ~$4,400 |

| Medium | 4 | ~$8,800 |

| Large | 8 | ~$17,600 |

| X-Large | 16 | ~$35,200 |

| 2X-Large | 32 | ~$70,400 |

The "better safe than sorry" tax: Everyone picks Medium or Large because nobody wants to be the person whose queries are slow. I worked with this one company that had a dashboard warehouse running on Large for like 8 months - queries took maybe 12-15 seconds. Downsized to Small and they went to around 18-22 seconds but costs dropped something like 70-75%. Users barely noticed the difference.

Nobody wants to get blamed for slow dashboards, so teams just throw money at the problem. Those extra few seconds can cost thousands annually.

Gen2 Warehouses: Worth the 25% Markup

Gen2 warehouses cost 25% more but run faster for most analytics workloads - maybe 1.5x faster. Sometimes the math works:

- Gen1 Large: 8 credits × $3.00 = $24.00 for a 20-minute query

- Gen2 Large: 10 credits × $3.00 = $30.00 for a 12-minute query

You save time and your users stop complaining about slow dashboards.

The catch: Gen2 memory allocation is weird compared to Gen1. Had this ETL job that worked fine on Gen1 Medium - switched to Gen2 and it started throwing Out of memory: Warehouse ran out of memory during execution. Had to bump it up to Large to make it work, which basically ate all the performance savings. Definitely test your workloads before migrating or you'll regret it. Gen2 docs explain the memory differences if you're into reading technical specs.

Storage: The $23/TB Lie

Storage starts at $23/TB but that's just the beginning. Here's what actually counts toward your bill:

Everything That Eats Storage

- Your actual tables (compressed, so that's nice)

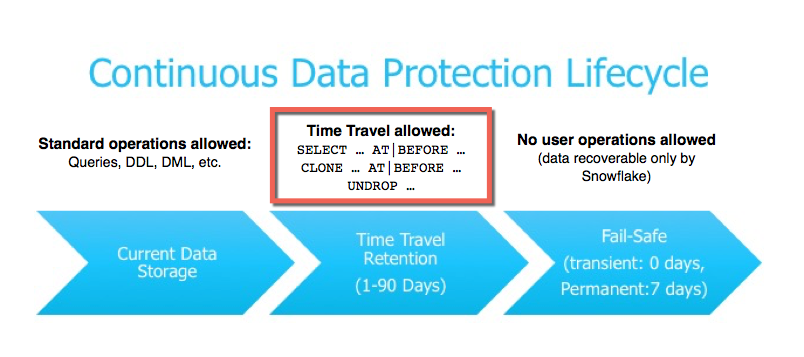

- Time Travel (historical versions for 1-90 days)

- Fail-safe (7 days of disaster recovery after Time Travel)

- Clones (zero-copy until you modify data, then it's real storage)

- Staged files (data sitting in Snowflake before loading)

- Materialized views (yeah, they take space too)

The good news: Snowflake compresses data 3:1 to 5:1. Your 30TB raw dataset might only cost you for 6-10TB.

The bad news: Time Travel can triple your storage costs. Some genius set 90-day Time Travel on dev tables "for safety." Burned 8-9K monthly for 6 months before anyone noticed. Nobody ever used the Time Travel data. Not once. Check storage pricing and Time Travel config before this happens to you.

Serverless Features: The Silent Budget Killers

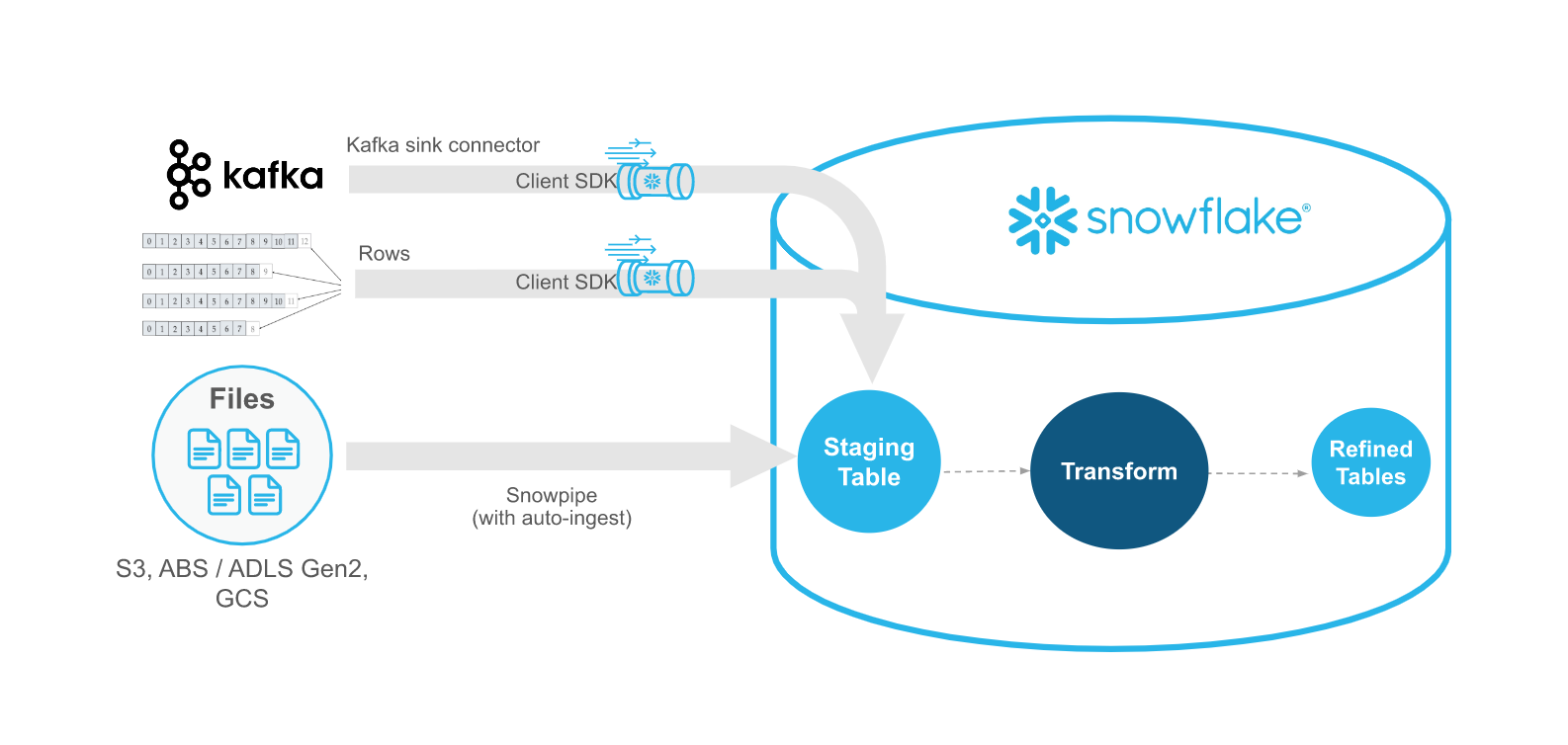

Unlike warehouses that shut off when idle, serverless features keep burning credits 24/7 once you enable them:

What These Features Actually Cost

- Snowpipe: 1.25x credit multiplier + 0.06 credits per 1,000 files

- Auto-clustering: 2x credit multiplier (runs constantly on clustered tables)

- Materialized views: 2x credit multiplier for refreshes

- Search optimization: 2x credit multiplier (indexes everything continuously)

- Replication: 2x credit multiplier for copying data around

Real example: Worked with a team that turned on auto-clustering for a 2TB fact table that got queried maybe twice a week. Thing was burning around 15 credits every hour - cost them something like $28-32K annually to optimize queries worth maybe $200. When I tried turning it off, their deployment pipeline broke because someone had hardcoded clustering keys into the schema migrations. Took like 3 days to fix the deployments just to save money on a feature they barely used.

These features easily eat 15-25% of your total bill, and most teams have zero visibility into what's running in the background. Use resource monitors and account usage views to track what's actually consuming credits. Snowpipe pricing and clustering costs are documented if you want the gory details.

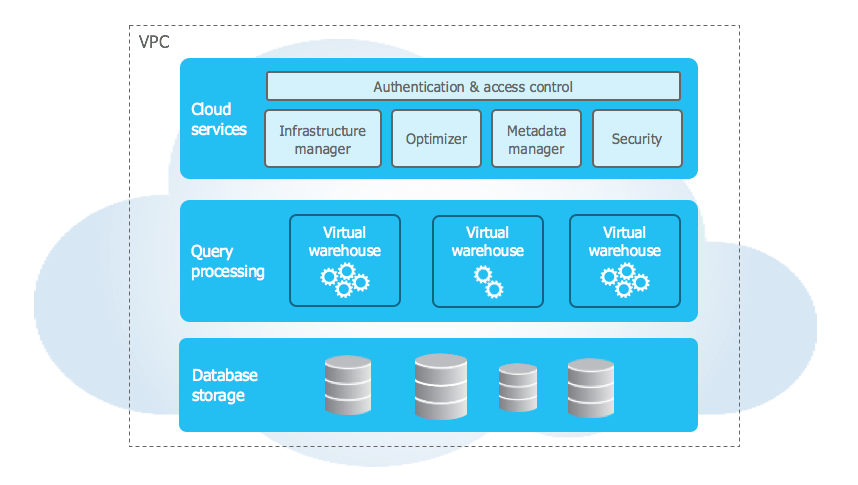

Cloud Services: Free Until It's Not

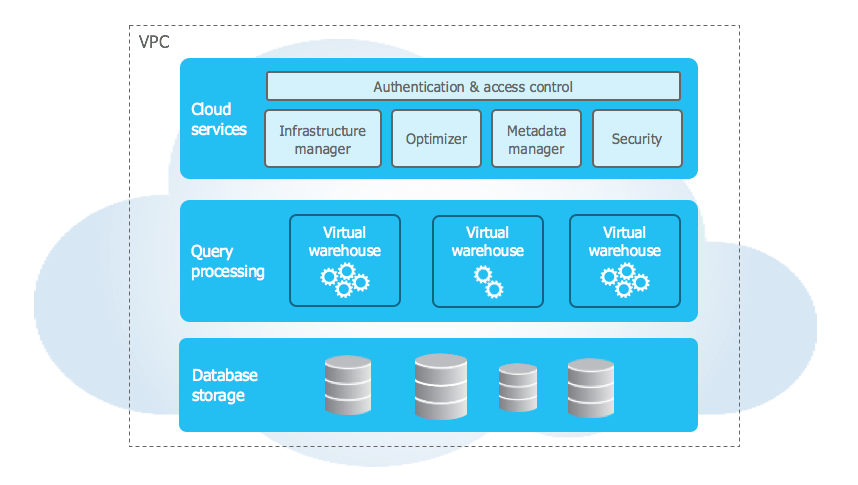

The cloud services layer handles auth, metadata, query compilation - basically the stuff that makes Snowflake work. It's free as long as it stays under 10% of your compute credits daily.

When it bites you: Lots of schema changes, heavy cloning, or tons of tiny queries can push you over 10%. Then you pay full credit rates for cloud services too.

Data Transfer: The Multi-Cloud Penalty

Getting data INTO Snowflake is free. Getting it OUT costs money:

- Unloading to external storage

- Replicating between regions or cloud providers

- External functions and data sharing

Moving data between AWS and Azure can cost $0.02-$0.12 per GB. If you're processing terabytes monthly, this adds thousands to your bill. Review Snowflake's data transfer pricing for specific regional rates and cross-cloud data sharing alternatives that can reduce transfer costs.

On-Demand vs Capacity: The $25K Gamble

On-Demand: Pay as you go, costs more per credit but totally flexible.

Capacity: Buy credits upfront for 15-40% discounts. Minimum $25K commitment. Unused credits expire, overages cost full price.

The math: If you'll spend $25K+ annually, capacity pricing might save you money. But guess wrong and you're screwed either way - pay for unused credits or pay overage rates on what you actually use.

SaaS company I worked with committed to around 4,000 credits monthly at $2.40 each. Actually ended up using closer to 4,800. Those 800 overage credits cost something like $3.50 each instead of the $2.40 committed rate. Would've been cheaper to just buy 5,000 upfront, but nobody predicted usage would spike like that.

Reality check: Snowflake scales with usage, which sounds great until usage spikes unexpectedly. These pricing mechanics extract maximum revenue while appearing "flexible." Compare with BigQuery or Databricks to see how others handle this. Snowflake's calculator helps with estimates, but real usage always differs.

For cost analysis, check Snowflake's cost docs and resource monitoring. Third-party tools like SELECT and Keebo handle automated cost control when manual monitoring isn't enough.

Now that you understand how billing works and where money disappears, let's break down what different scenarios actually cost across editions and regions.