Don't fall for the demos. Every CDC tool looks great until your data gets weird and your database starts sweating. I've been through enough vendor pitches and production incidents to know what questions actually matter.

The Stuff That Will Actually Bite You

Will This Work With Your Janky Database Setup?

First question: does it actually work with your database version? Not the shiny new one in the demo, but the PostgreSQL 11.2 instance that IT won't let you upgrade because "it's working fine."

I learned this the hard way when Debezium worked perfectly on Postgres 14 in staging, then couldn't handle the ancient logical replication setup on our production 11.x cluster. Three days of debugging later, we found out logical replication slots work differently between major versions.

PostgreSQL CDC limitations by version matter more than whatever the sales engineer promised. Check the Debezium PostgreSQL connector docs for version-specific gotchas.

What Happens When Things Go Wrong?

Here's what no one tells you about CDC: it's not if it breaks, it's when. Schema changes will fuck up your pipeline. Network partitions will cause lag spikes. That batch job someone runs monthly will max out your database connections.

The real question isn't "does it work" - it's "how fast can I fix it when it doesn't?"

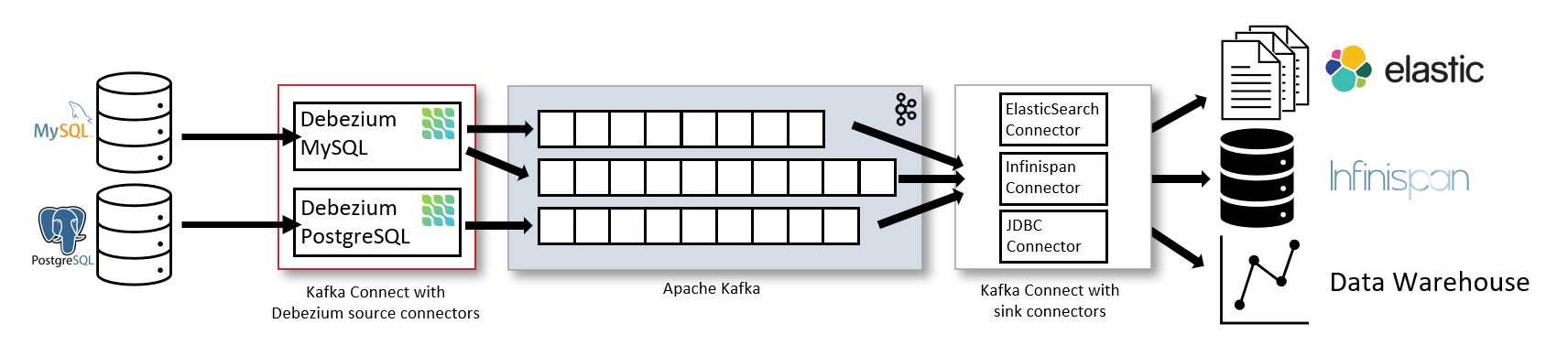

AWS DMS has decent monitoring but their support is hit-or-miss. Debezium requires you to understand Kafka, which means you need someone who can debug Kafka consumer lag at 3AM.

Confluent costs 5x more but their support actually picks up the phone.

What It Actually Costs

Nobody talks about the real costs. Sure, Debezium is "free" until you factor in:

- Couple engineers spending half their time babysitting Kafka (~$300K/year)

- AWS infrastructure costs (probably $40-60K, depends on your usage)

- The poor ops engineer who gets paged at 3am (another $80K+ if you can find one)

- Downtime when the primary Kafka broker dies during Black Friday (priceless)

Meanwhile Fivetran charges $2K/month but it actually works. Do the math yourself - Fivetran has a calculator if you need it.

The Scale Problem Nobody Talks About

Here's the thing about CDC scale: it's not linear. You can handle 1M events/hour just fine, then hit 10M and everything falls apart. Network buffers fill up, Kafka starts dropping messages, and your database connection pool gets exhausted.

I saw this firsthand at a fintech where everything worked great until market open. Market open was a complete shitshow. Volume would spike from maybe 10K/hour to 500K/hour in like 30 seconds and our CDC setup just fucking died. Kafka lag went completely nuts - started at 20-30 minutes, then I think it hit an hour before we gave up monitoring it. Network buffers were maxed, JVM was throwing OutOfMemoryError left and right, and our database connection pool was completely exhausted. Everything downstream started breaking and the trading desk was losing their minds because their risk calculations were based on data from yesterday.

We ended up switching to Confluent Cloud because the self-managed Kafka cluster became a full-time job for two engineers.

The Vendor Roulette

The CDC market is consolidating fast. IBM bought StreamSets for $2.3B, Qlik acquired Talend (originally acquired by Thoma Bravo for $2.4B in 2021), and half the smaller players will probably get acquired or shut down in the next two years.

This matters because CDC isn't a "set it and forget it" tool. You'll need upgrades, bug fixes, and feature updates. That cool startup with the amazing demo might not exist when you need support.

The Mistakes That Will Cost You

Picking Tools Based on Demos

Every demo is perfect. The data is clean, the network is fast, and nothing ever goes wrong. Real CDC deals with schema changes, network partitions, and databases that run out of disk space during a backup.

Ask for a demo with realistic data volumes and watch what happens when you simulate a network failure. Most vendors will make excuses.

Ignoring Your Team's Skills

Debezium is powerful but you need to understand Kafka internals. If your team doesn't know what a consumer lag spike means or how to debug partition assignment, you'll be learning at 3AM when things break.

Managed solutions like Airbyte cost more but someone else deals with the ops headaches.

Underestimating Integration Hell

CDC doesn't exist in a vacuum. You need monitoring, alerting, data validation, schema evolution, and error handling. Half the work isn't the CDC tool itself - it's everything around it.

Count on spending 3-6 months integrating with your existing monitoring and deployment pipelines, even with "easy" tools.

What Industry You're In Matters

If You're in Fintech

Everything needs audit trails or the regulators will come for you. Oracle GoldenGate costs $50K/year but your compliance team will sleep better. Compliance documentation isn't optional.

If You're E-commerce

Black Friday will kill your CDC pipeline if it can't auto-scale. I've seen too many retailers lose sales because their real-time inventory updates broke under load. Cloud-native tools handle traffic spikes better than anything you'll manage yourself.

If You're Healthcare

HIPAA compliance eliminates half your options. Data residency rules eliminate half of what's left. You'll probably end up with an on-premises solution that costs 3x more than the cloud version.

If You're a Startup

Pick the managed solution. You don't have time to become Kafka experts. Fivetran or Airbyte will cost more upfront but save months of engineering time.

How to Actually Evaluate This Stuff

Skip the formal RFP bullshit. Here's what actually works:

- Test with your real data (not the clean demo dataset) for 2-4 weeks

- Break things on purpose - kill network connections, max out CPU, run schema changes

- Calculate what it actually costs including the engineers who'll maintain it

- Talk to existing customers who aren't on the vendor reference list

- Have a rollback plan because your first choice might be wrong

Most tools work fine until they don't. Test the failure scenarios because that's where you'll live when things go wrong.