Airflow is a Python-based workflow orchestrator that lets you define data pipelines as code. It was born out of necessity at Airbnb in 2015 when their data team got tired of managing hundreds of brittle cron jobs that would fail silently and leave everyone wondering why reports were broken.

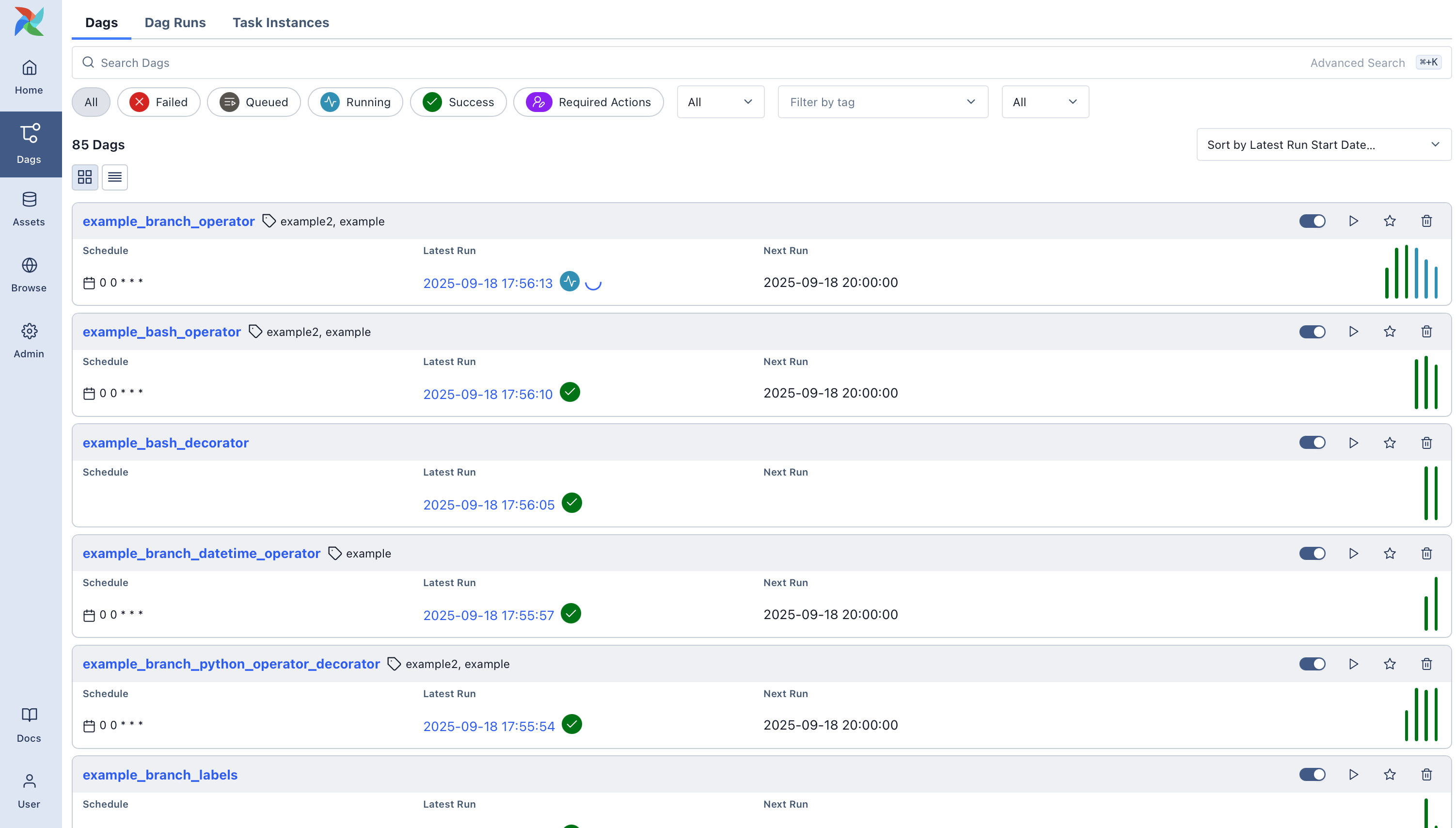

Airflow Web Interface: The UI shows your DAGs as interactive graphs where you can see which tasks are running, failed, or completed. It's actually pretty useful once you figure out where everything is.

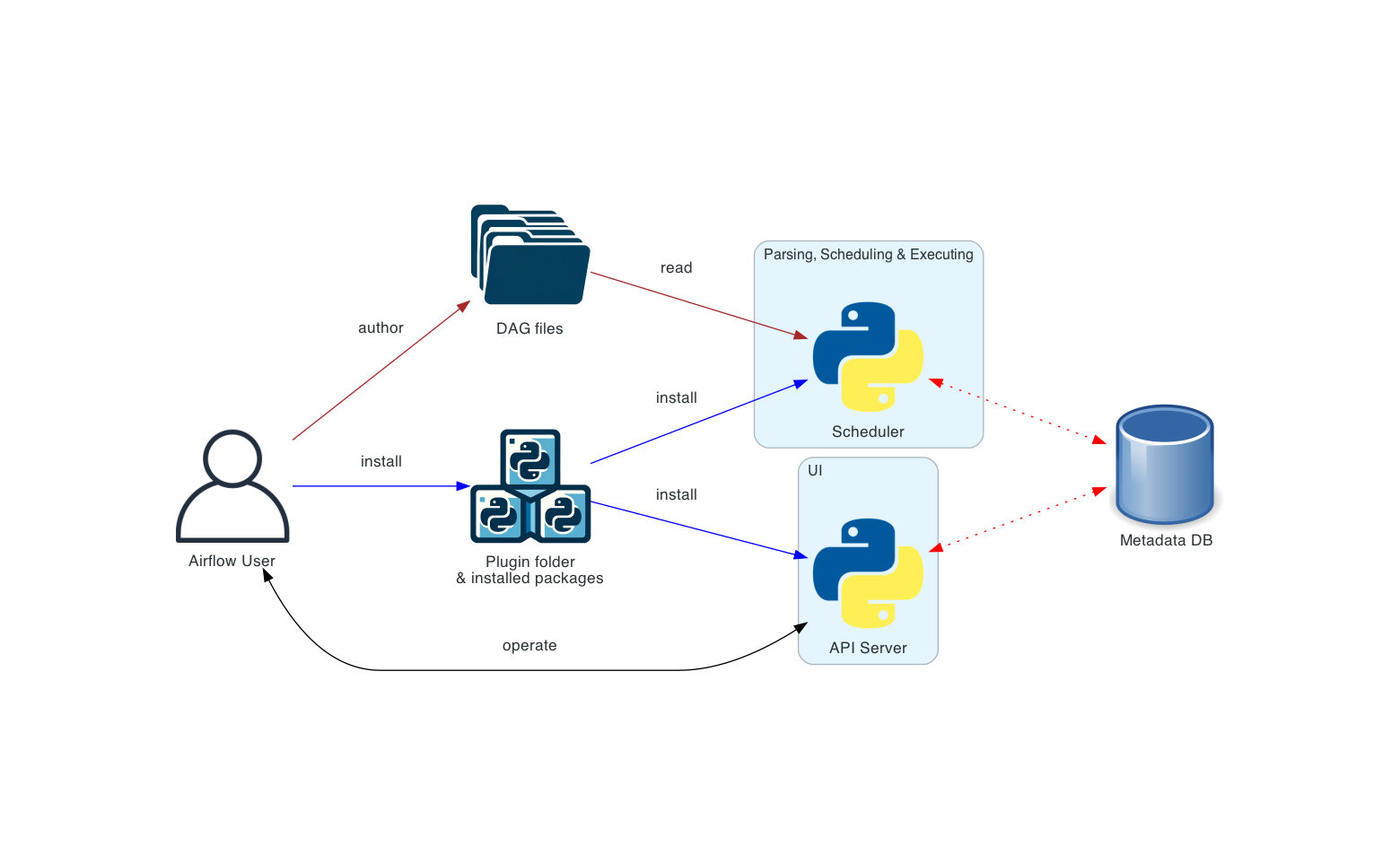

How This Thing Actually Works

Here's what you're dealing with when you deploy Airflow:

Scheduler: The thing that actually runs your workflows. It's supposed to be reliable, but will randomly stop working when you hit around 300 DAGs unless you tune it properly. We learned this during a Black Friday deployment. Scale early.

Web Server: The UI where you'll spend most of your time trying to figure out why tasks failed. It's actually pretty decent - you can see logs, trigger reruns, and pretend you understand why the scheduler decided to skip your task.

Executor: Defines where tasks run. LocalExecutor works for single machines, CeleryExecutor works great until Redis crashes at 2am, and KubernetesExecutor is what you use when you want to make your infrastructure team hate you.

Metadata Database: PostgreSQL is your best bet. MySQL works but has weird edge cases. SQLite is fine for testing until you have more than one user.

Workers: The things that actually run your code. They'll work fine until you run out of memory, then they'll die silently and your tasks will hang forever.

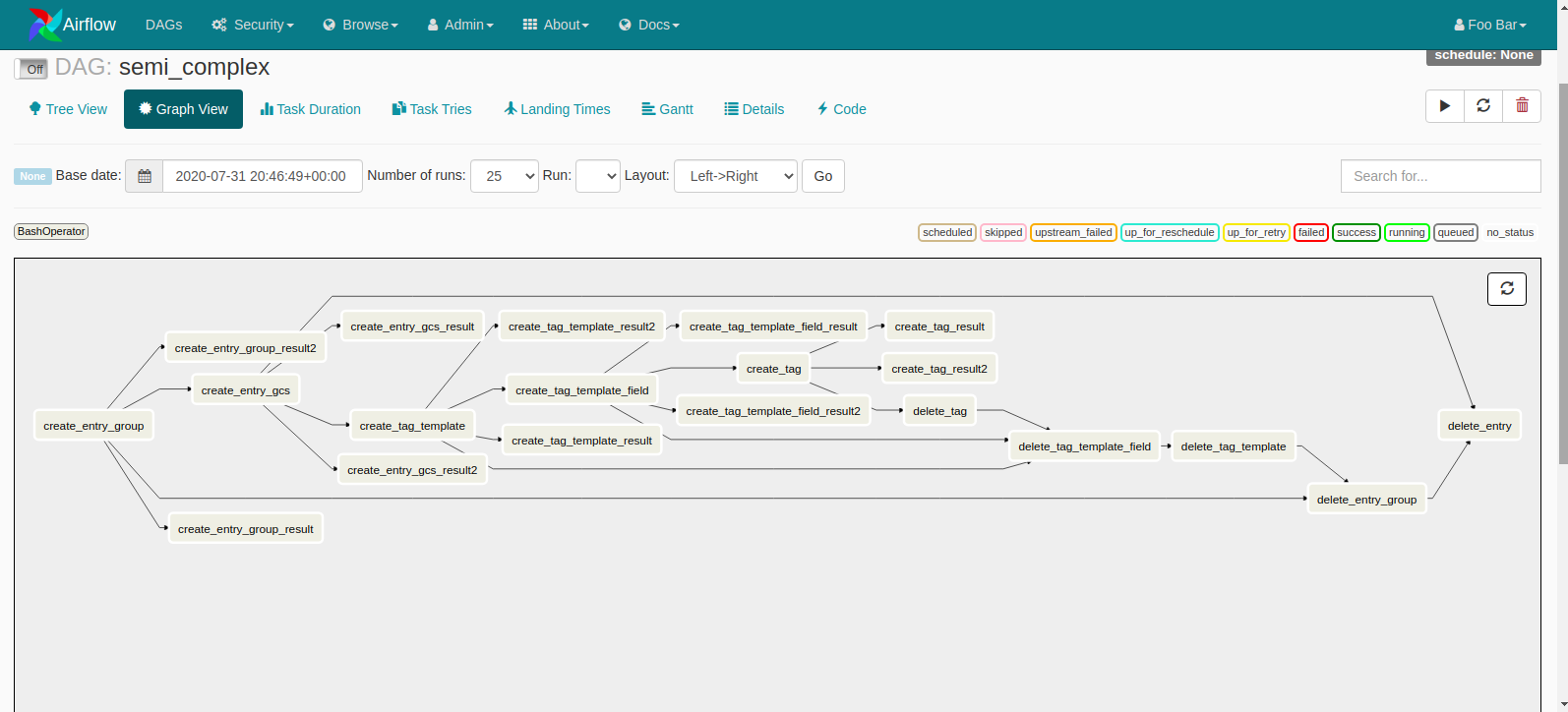

DAGs (Directed Acyclic Graphs)

Workflow Structure: DAGs represent your data pipeline as a graph of connected tasks, each with dependencies and retry logic.

This is Airflow's fancy name for "workflow." You write Python code that defines:

- What tasks to run (

PythonOperator,BashOperator, etc.) - When to run them (scheduling and dependencies)

- What to do when they fail (retry 3 times then wake up the on-call engineer)

- How long to wait before giving up (task timeouts)

Dependencies look like this: task_a >> task_b >> task_c. Simple enough that even your product manager can understand it.

DAG Visualization: Tasks show up as colored boxes (green = success, red = failed, yellow = running). You can click on any task to see logs, retry failed tasks, or mark them as successful if you're feeling dangerous.

Production Reality Check

High Availability: You can run multiple schedulers, but the first one will do most of the work anyway. The metadata database is still a single point of failure.

Security: Has RBAC, OAuth, SSL, and encrypted connections. Basically enterprise-ready if you spend the time configuring it properly.

Monitoring: Built-in Prometheus metrics and email alerts. You'll still need external monitoring because the scheduler can fail without any alerts.

Scaling: Works until it doesn't. The scheduler becomes a bottleneck around 1000+ DAGs. You'll need to tune scheduler performance or split into multiple Airflow instances.

Version Reality (September 2025)

Apache Airflow 3.0.6 is the current version as of September 2025. Airflow 3.0 was a major release in early 2025 with breaking changes. Don't use anything before 2.7 - too many security issues.

Major 3.0 changes include:

- New execution model with better task isolation

- Improved scheduler performance (finally)

- Modern web UI that doesn't look like it was designed in 2010

- Better Kubernetes integration for cloud-native deployments

Netflix runs 100k+ workflows daily on Airflow, but they also have an army of engineers keeping it running. Adobe manages thousands of pipelines, and both companies contribute heavily to making it less terrible for the rest of us.

Now that you know what you're getting into, let's see how Airflow stacks up against the competition - because there are definitely easier alternatives if your use case doesn't demand Airflow's complexity.