DNS servers used to be simple. Then containers happened and everything got complicated. CoreDNS replaced kube-dns because kube-dns was a hot mess of three different containers that barely worked together. CoreDNS does the same job with one binary that doesn't randomly break when Kubernetes updates.

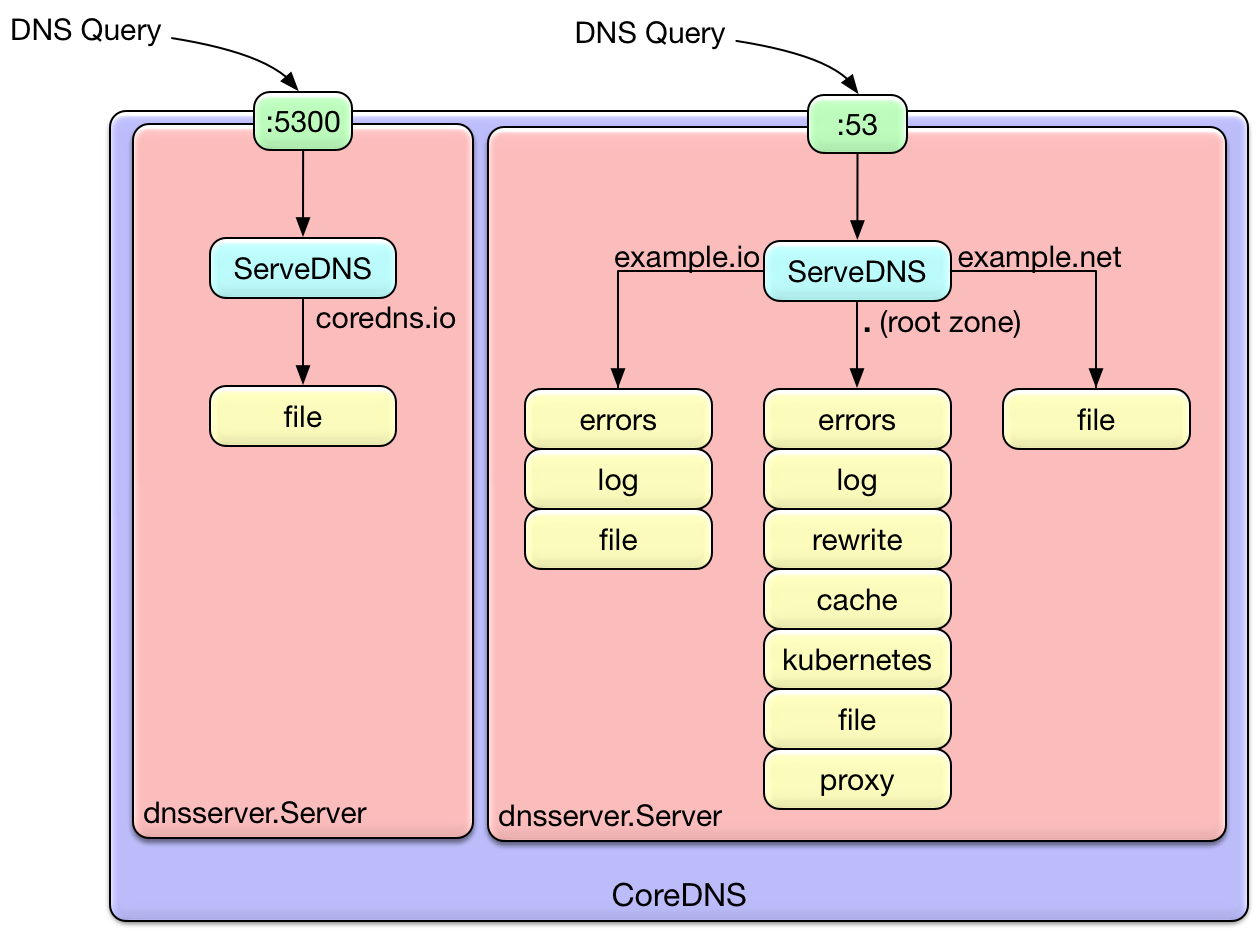

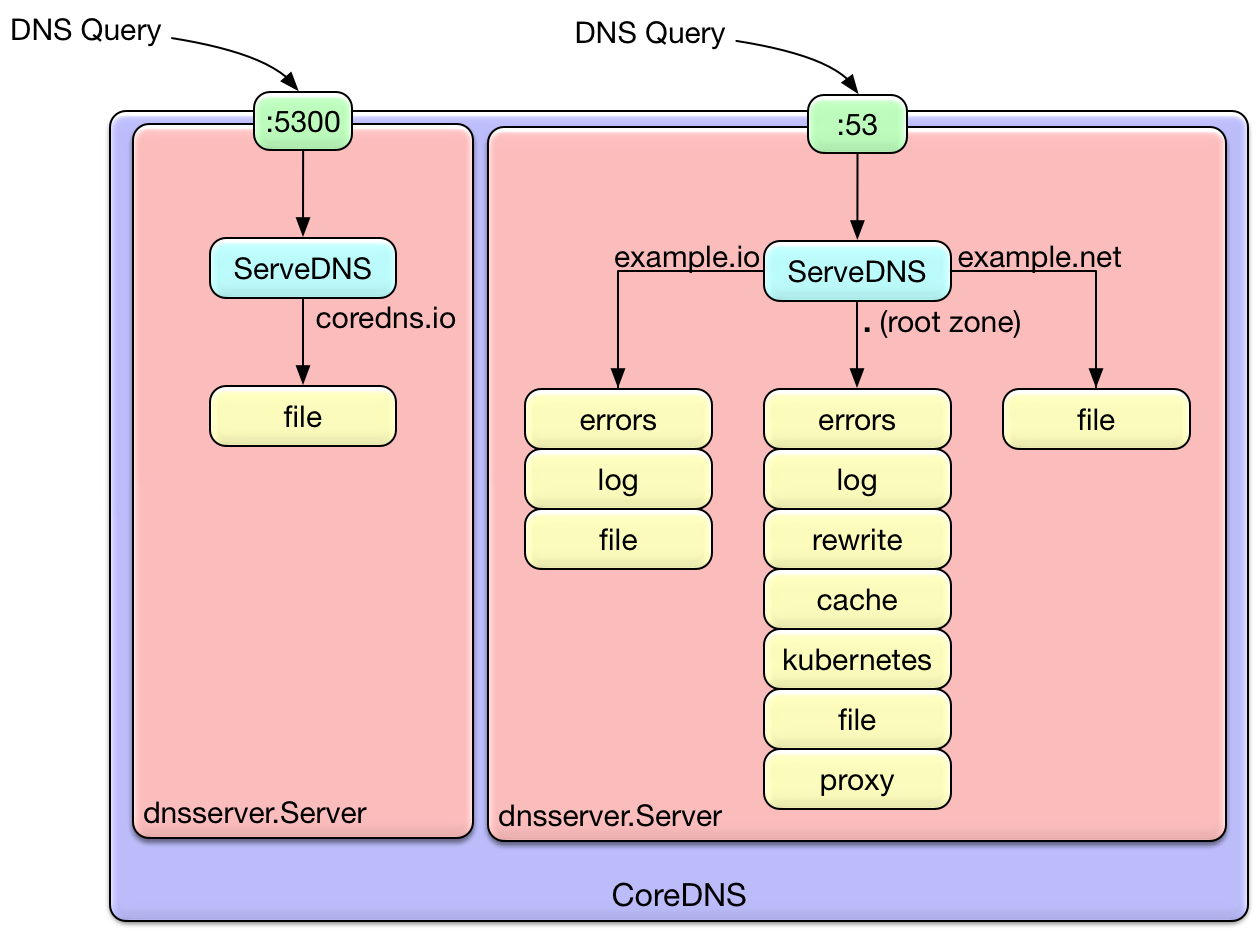

Why Everything is a Plugin

BIND is a monolithic beast from the 1980s that does everything and nothing well. CoreDNS said "fuck that" and made everything a plugin. Want DNS serving? Use the file plugin. Need Kubernetes integration? There's the kubernetes plugin. Want caching? Enable cache. The best part? Plugin order matters and if you get it wrong, nothing works and the error messages are about as helpful as a chocolate teapot.

Here's what'll fuck you over: plugins execute in a specific order defined at compile time, not in your config file. So even if you put cache at the end of your Corefile, it might run first. Check the plugin.cfg file to see the actual order, or you'll be debugging DNS issues at 3am wondering why your cache isn't working.

Why Go Actually Matters

CoreDNS is written in Go, which means it's actually good at handling thousands of concurrent connections without shitting itself. The Docker images are tiny compared to BIND's bloated containers.

Performance depends heavily on which plugins you enable and how you configure them. The moment you add too many plugins or misconfigure caching, performance goes to hell just like any other DNS server.

How CoreDNS Fixed Kubernetes DNS

CoreDNS became the default DNS server for Kubernetes in v1.11 because kube-dns was an absolute nightmare to debug. Three separate containers (kubedns, dnsmasq, and sidecar) that had to talk to each other? What genius thought that was a good idea?

CoreDNS fixed this clusterfuck by being one binary with the kubernetes plugin that actually works. It reads from the API server and creates DNS records for your services and pods automatically. The Corefile configuration is way cleaner than whatever kube-dns was using.

But here's where it gets tricky: if you need custom domains or stub zones, you have to edit the CoreDNS ConfigMap in kube-system namespace. And if you screw up the syntax, DNS breaks for your entire cluster. Pro tip: always test your Corefile changes in a dev cluster first, because I learned this the hard way when I took down production DNS for... I think it was around 2 hours? Maybe closer to 3. Time moves slowly when your phone won't stop ringing.

Protocol Support That Actually Works

CoreDNS supports DNS over UDP/TCP (obviously), DNS over TLS, and DNS over gRPC. DNS over HTTPS is trickier - you need to set up an external proxy because CoreDNS doesn't handle HTTPS directly in the current version.

The forward plugin is where CoreDNS shines for upstream queries. It can load balance between multiple upstream servers, health check them, and fail over when one goes down. This actually works pretty well, unlike some DNS servers where failover takes forever or doesn't work at all.

Configuration Simplicity

The Corefile syntax is way simpler than BIND's clusterfuck of a config format. Here's a basic zone:

example.org {

file db.example.org

log

errors

}

This actually makes sense compared to BIND's named.conf which looks like it was designed by someone who hates humans. The best part? Hot reload actually works with kill -SIGUSR1 or the reload plugin, though sometimes you just have to restart the damn thing anyway when configs get complex.

Word of warning: indentation matters in Corefiles, and if you mix tabs and spaces, CoreDNS will silently ignore your config and you'll be wondering why your changes aren't working. Ask me how I know - spent what felt like half my weekend debugging a "working" config that was being completely ignored because of a single fucking tab character somewhere. Pretty sure it was v1.11.1 that had this really fun bug where it would just silently fail on mixed indentation, but honestly all the CoreDNS versions blur together when you're debugging at 2am.