CDC security failures don't give you second chances. When your replication pipeline leaks customer data, you don't get to debug it for a week - you get fired, your company gets fined, and your users find out about it on TechCrunch.

The Healthcare Startup That Almost Lost Everything

The Setup: Series A health tech startup, smart engineers who knew their shit, solid product, growing user base. They had a custom Debezium setup that worked great... until it didn't.

The Fuck-Up: Their CDC pipeline was replicating patient data from PostgreSQL to their analytics warehouse. Everything looked secure on paper - SSL encryption, VPC networks, proper authentication. Problem was, nobody thought about field-level encryption for PII columns because "the transport is already encrypted."

The Discovery: During Series B due diligence, some investor's security guy was poking around their Kafka cluster and found patient SSNs, birthdates, medical record numbers - all just sitting there in plain text in the topics. Transport layer was encrypted, sure, but once you're inside Kafka you could read everything. Took him maybe 10 minutes to pull up a console consumer and show them live patient data scrolling by.

The Damage:

- Fundraising got pushed back 6 months while they unfucked everything

- Burned through something like $180K on consultants (I saw the invoices, it was fucking expensive)

- Had to send "we might have leaked your medical data" letters to 50,000 people

- Lead investor noped out, next round valued them 40% lower because "security risk"

What They Should Have Done: Field-level encryption in the Schema Registry. PostgreSQL transparent data encryption. And basic fucking HIPAA compliance - PII gets encrypted everywhere, not just during transport. But they figured "SSL is encryption" and called it a day.

The E-commerce Company That Got GDPR'd

The Nightmare: Mid-size e-commerce company with EU customers. Their CDC setup replicated user behavior data from their main database to marketing systems and analytics platforms in real-time.

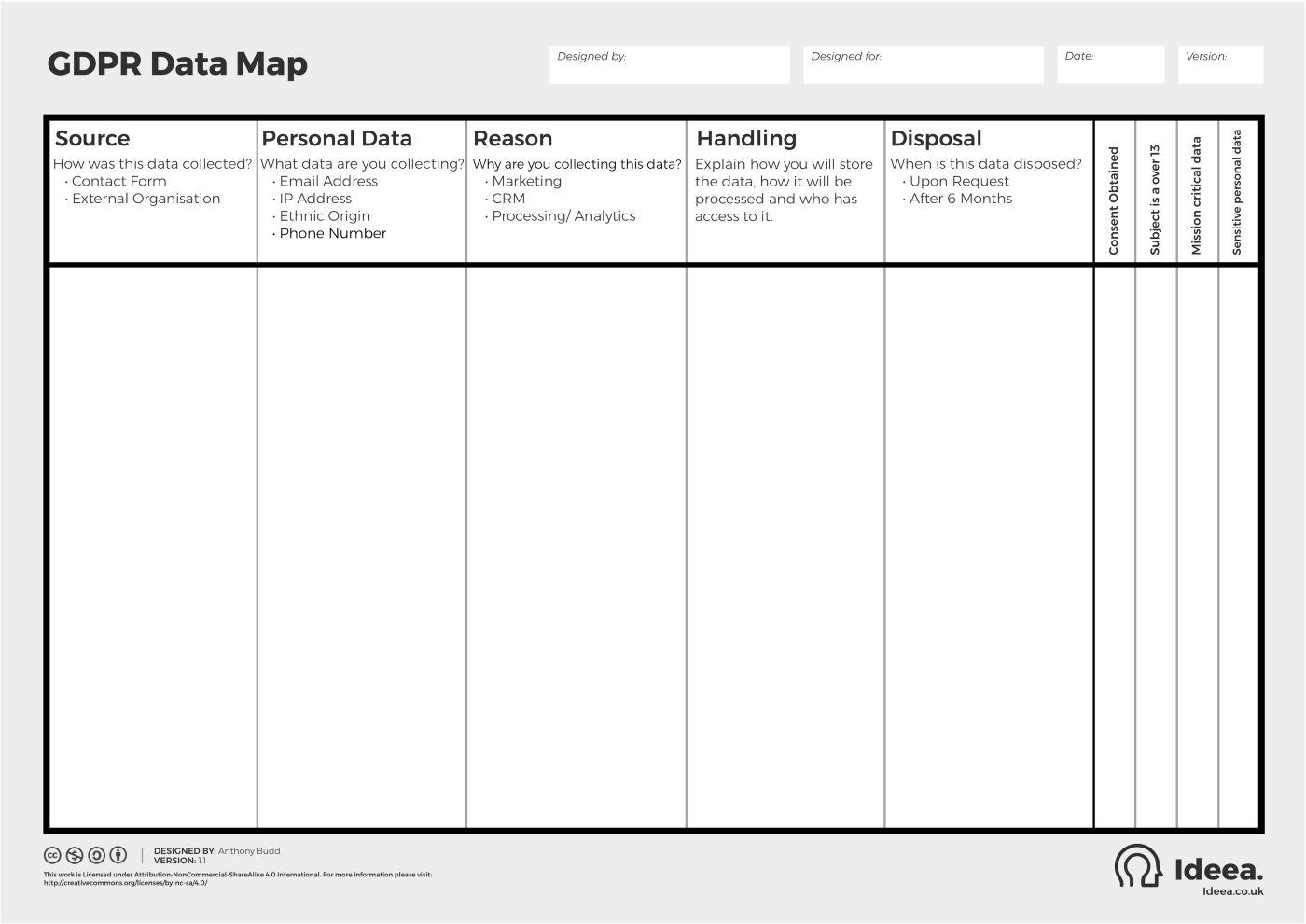

The Problem: Under GDPR, users can request data deletion ("right to be forgotten"). But their CDC setup had already replicated personal data to 12 different downstream systems across 3 countries. When users requested deletion, the company couldn't track or delete all the copies.

The Fine: €2.1M GDPR fine. Yeah, that's not a typo - over 2 million euros. Because they couldn't prove they could delete user data from all their systems.

The Lesson: GDPR's "right to be forgotten" doesn't give a shit about your real-time pipeline complexity. You need to track where every piece of data goes and be able to delete it on demand. They thought they could figure this out later. Spoiler: you can't.

The Fintech That Learned About CVE Vulnerabilities the Hard Way

The Setup: Fintech company using Debezium 1.9.0 to replicate transaction data for risk analysis and fraud detection.

The Security Alert: CVE-2024-1597 - SQL injection vulnerability in Debezium PostgreSQL connector. CVSS score 8.1 (High). Remote attackers could potentially execute arbitrary SQL queries.

The Response: Security team freaked out and demanded immediate upgrade. Course, this happened during their "production freeze" period before a major product launch. But Debezium 2.x had breaking schema changes, so upgrading meant rebuilding half their connectors and probably 2-3 days of downtime to test everything.

The Choice: Risk getting hacked with the old vulnerable version, or risk missing their product launch with upgrade downtime. Spoiler: there's no good answer here.

The Outcome: They burned like $80K (maybe $85K? I wasn't tracking receipts) on emergency consulting to do the upgrade over a weekend. Learned that security patch management for CDC needs to be planned way in advance. Also learned that their "production freeze" policy was complete bullshit when compliance is breathing down your neck.

Why CDC Security Is Different From Regular Database Security

Data in Motion vs. Data at Rest

Traditional database security focuses on data at rest - encryption, access controls, audit logs. CDC creates new attack surfaces because data is constantly moving between systems.

Your data might be secure in PostgreSQL but vulnerable in:

- Kafka topics (even with encryption, topics are readable by administrators)

- Network transmission (SSL misconfigurations are common)

- Downstream systems (analytics warehouses often have weaker security)

- Log files (CDC errors can leak data into application logs)

- Monitoring systems (metrics and alerts can expose data patterns)

The Replication Lag Window

During CDC replication lag, your security posture becomes inconsistent. User gets deleted from source database, but their data still exists in downstream systems for minutes or hours. During that window:

- Access control checks might pass in some systems, fail in others

- Regulatory compliance is technically violated

- Audit trails become inaccurate

- Data lineage tracking breaks down

Third-Party Component Risks

CDC typically involves multiple components with different security models:

- Apache Kafka: Built for performance, security was an afterthought

- Debezium: Open source with limited security focus until recently

- Schema Registry: Stores schema definitions that can reveal data structure

- Kafka Connect: Runs with broad database permissions

- Monitoring tools: Often have access to data samples for troubleshooting

The Attack Vectors Nobody Talks About

Schema Evolution as Data Leakage

Schema Registry stores complete table schemas, including column names, data types, and constraints. This metadata can reveal business logic, data relationships, and sensitive field names to anyone with access.

I've seen schema registries that exposed:

credit_card_numbercolumn definitionsssn_encryptedfield names (revealing that SSNs exist)- Foreign key relationships showing data connections

- Historical schema versions showing deleted sensitive columns

CDC Error Messages Containing Data

When CDC fails, error messages often include data samples for debugging. These logs get stored in centralized logging systems, monitoring platforms, and support tickets.

Example error message from production:

Failed to process record: {\"user_id\": 12345, \"email\": \"john.doe@company.com\", \"ssn\": \"123-45-6789\", \"credit_score\": 750}

That single error message just leaked PII to whoever has access to application logs.

Kafka Consumer Group Persistence

Kafka stores consumer offsets and group metadata indefinitely. This data can reveal:

- Which systems consume which data streams

- Processing patterns and delays

- System architecture and data flow topology

- When security incidents occurred (offset resets)

Debugging and Development Exposure

Developers debugging CDC issues often:

- Copy production Kafka topics to development environments

- Extract data samples for schema testing

- Enable verbose logging that includes record contents

- Create test consumers that process real data

Without proper data governance, production PII ends up in development systems, developer laptops, and test databases.

What Actually Works for CDC Security

Start with Data Classification

Before implementing CDC, classify your data:

- Public: Can be replicated anywhere (product catalogs, marketing content)

- Internal: Requires access controls but not encryption (employee directories)

- Confidential: Requires encryption and strict access (financial records)

- Restricted: Heavily regulated with specific requirements (PII, PHI, PCI data)

Don't replicate restricted data unless you absolutely need it. Every downstream system multiplies your compliance burden.

Implement Defense in Depth

- Network isolation: VPCs, security groups, private subnets

- Encryption everywhere: TLS for transit, encryption at rest for storage

- Authentication and authorization: SASL/SCRAM for Kafka, role-based access

- Field-level encryption: Encrypt PII columns before they enter CDC pipelines

- Data masking: Replace sensitive values with pseudonymous identifiers

- Audit logging: Track who accessed what data when

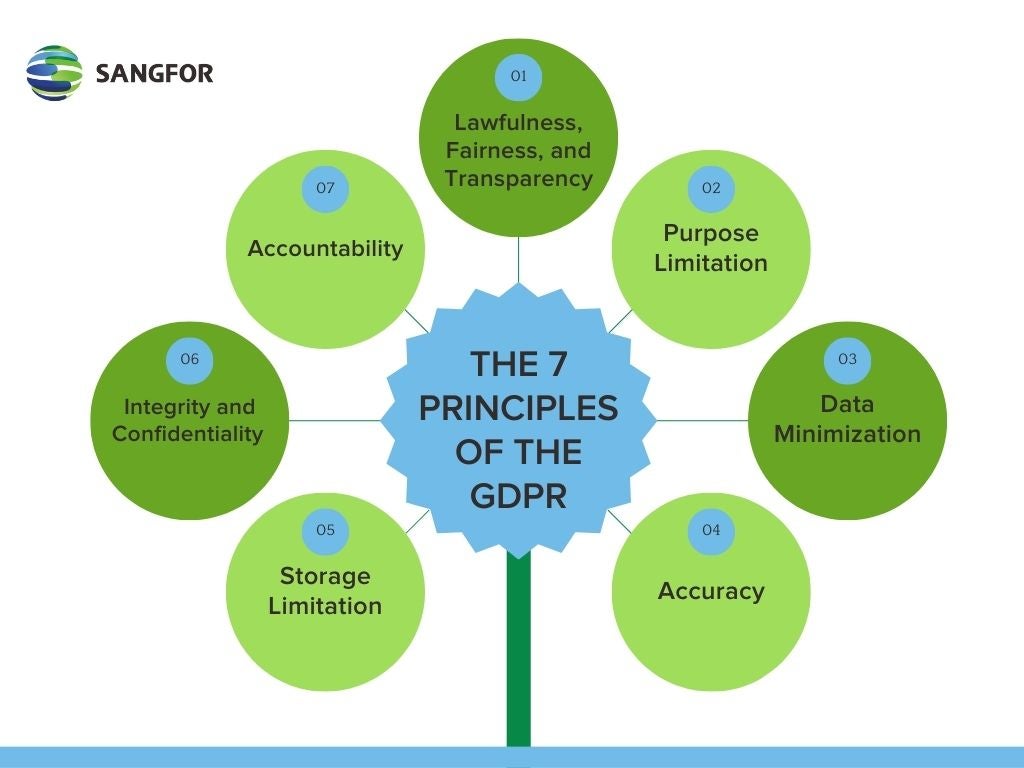

Plan for Regulatory Compliance from Day One

- Data lineage tracking: Know where every piece of data gets replicated

- Retention policies: Automatically delete data after compliance periods

- Right to deletion: Implement cascading deletes across all downstream systems

- Access controls: Principle of least privilege for all CDC components

- Incident response: Procedures for data breaches in real-time systems

The companies that get CDC security right treat it as a regulatory compliance problem, not a technical problem. They involve legal, compliance, and security teams from the architecture phase, not after the first audit failure.

Look, CDC security isn't about following security theater checklists. It's about not being the engineer who has to explain to the board why customer SSNs are trending on Twitter.

The companies that get this right start with the assumption that their CDC pipeline will be attacked. They build security controls that work when everything is on fire, not just during the demo.