DMS is AWS's database migration service. Sometimes it works great, sometimes you'll want to throw your laptop out the window. The marketing says it's migrated "1.5 million databases" but they don't mention how many of those took 3x longer than expected or made developers cry over data transfer bills.

DMS is basically a fancy ETL tool running on EC2 instances you pay for by the hour. Reads from your old database, transforms shit if needed, writes to your new database. Simple concept, but the devil's in the 47 different configuration parameters AWS didn't bother explaining properly.

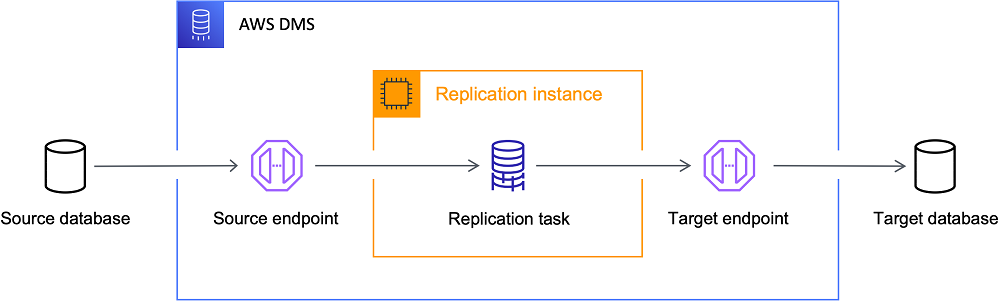

How It Actually Works

Setup's pretty straightforward - endpoints for source and target databases, spin up a replication instance, create a migration task. The replication instance is just an EC2 box running DMS software that does the actual work.

Full Load: Copies all your existing data. Works fine for small databases under 100GB. Anything bigger and you're looking at hours or days depending on your network.

CDC (Change Data Capture): Keeps reading transaction logs from your source database and applying changes to the target. This is where it gets tricky - CDC lag can spike during high transaction periods, and you'll need to monitor CloudWatch metrics like a hawk.

Full Load + CDC: What you'll actually end up doing. Bulk copy first, then flip on CDC to keep things in sync while you test and eventually cut over.

What Works (And What Doesn't)

Homogeneous migrations work well: MySQL to MySQL, Oracle to Oracle. The schema conversion tool handles basic stuff automatically.

Heterogeneous migrations are where things get expensive: Oracle to PostgreSQL sounds simple until you hit edge cases with stored procedures, custom data types, or triggers. The Schema Conversion Tool claims "90% automation" - that's complete bullshit unless your database is vanilla as unsalted crackers.

Network connectivity will make you want to quit tech and raise goats: Getting DMS to talk through corporate firewalls, VPNs, and security groups is half the battle. I've seen grown developers cry trying to get this shit working through a corporate proxy.

Version-Specific Gotchas

DMS 3.6.1 (May 2024) finally added PostgreSQL 17 support and IAM database auth for MySQL/PostgreSQL. DMS 3.5.4 introduced data masking which is actually useful for compliance, but also fucked up some existing transformations for Oracle → PostgreSQL migrations. Learned that one the hard way during a weekend cutover.

Data Resync capability got added recently - saves your ass when you need to re-sync specific tables without starting over. Virtual Target Mode in Schema Conversion lets you start migration planning without spinning up target databases first - actually saves money during the "will this work?" phase.

The current architecture supports about 20 database engines, but "supports" and "works reliably in production" are different things. MySQL, PostgreSQL, and Oracle tend to be the most stable. MongoDB support exists but has quirks with complex document structures.

Useful Resources for Getting Started: