Our builds were 8 minutes in January. By March they were 45 minutes. What the hell happened?

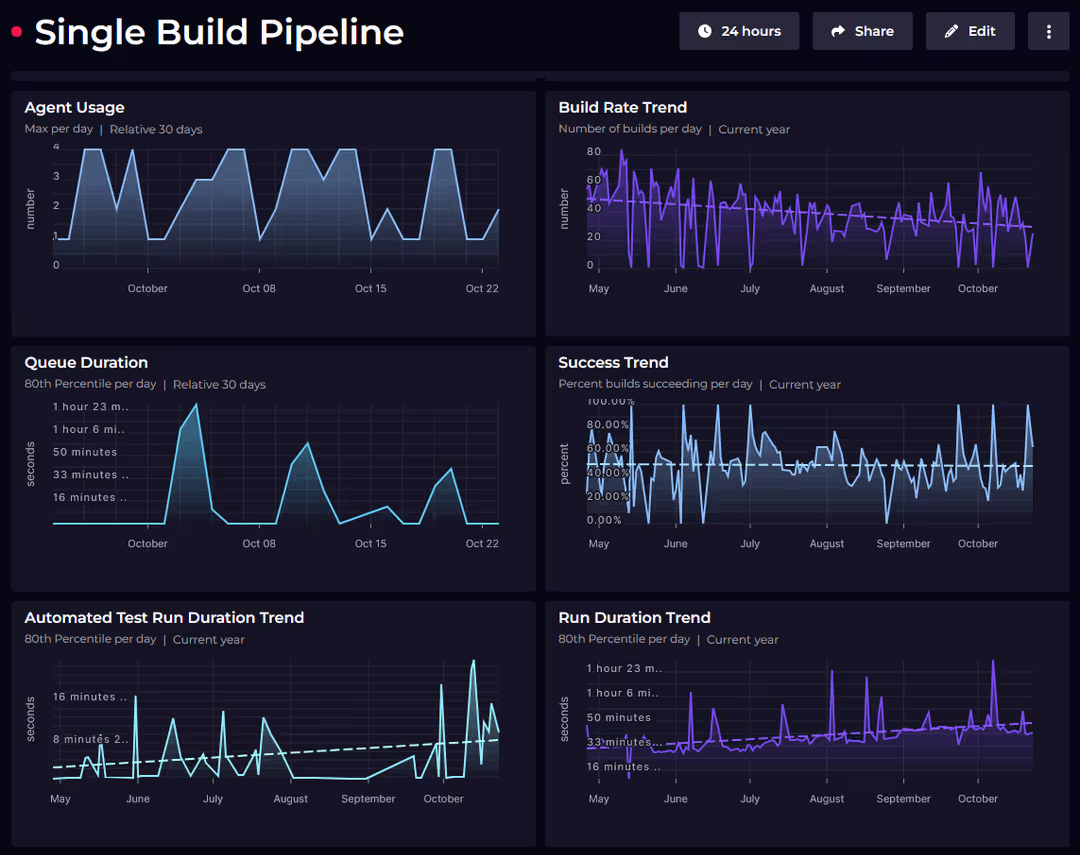

I've watched teams go from 5-minute builds to 45-minute time sinks after migrating to Azure DevOps. The free 1,800 build minutes disappear by mid-month because half your time is spent in queues. Microsoft-hosted agents turn into molasses during peak hours - Monday at 9 AM? Good luck getting an agent before lunch.

The Real Cost of Slow Pipelines

Let's do the math that Microsoft doesn't want you to see. Your team of 10 developers commits code 3 times per day. Each build takes 30 minutes (including queue time). That's 150 wasted hours per day just waiting for builds to complete.

At around $100/hour developer cost (varies by location), you're burning somewhere around $250-300k annually (I did the math on a napkin) on pipeline inefficiency alone. I tracked this for 6 weeks in Q2 2024 after our builds became unusable. The free 1,800 minutes run out by the 15th because Microsoft counts queue time toward your limit.

What Actually Causes the Slowdown

Microsoft-Hosted Agent Limitations: Shared agents with 2 cores and 7GB RAM get overloaded during US business hours. Peak performance varies by 20-40% based on which VM generation you get assigned. There's no SLA for queue times because fuck you, that's why.

Dependency Hell: I've seen .NET builds waste 15 minutes restoring NuGet packages that haven't changed in weeks. Node.js projects downloading the same npm modules every single run. Docker base images pulled repeatedly because Azure's caching is basically useless. This dependency management overhead kills productivity.

Sequential Build Steps: Default pipeline templates run everything sequentially. Your tests could run in parallel but Microsoft's examples don't show you how. Build → Test → Deploy in series when Deploy could start as soon as Build finishes.

Poor Resource Allocation: Parallel jobs cost $40/month each for Microsoft-hosted agents. Most teams stick with the free single job and wonder why everything takes forever.

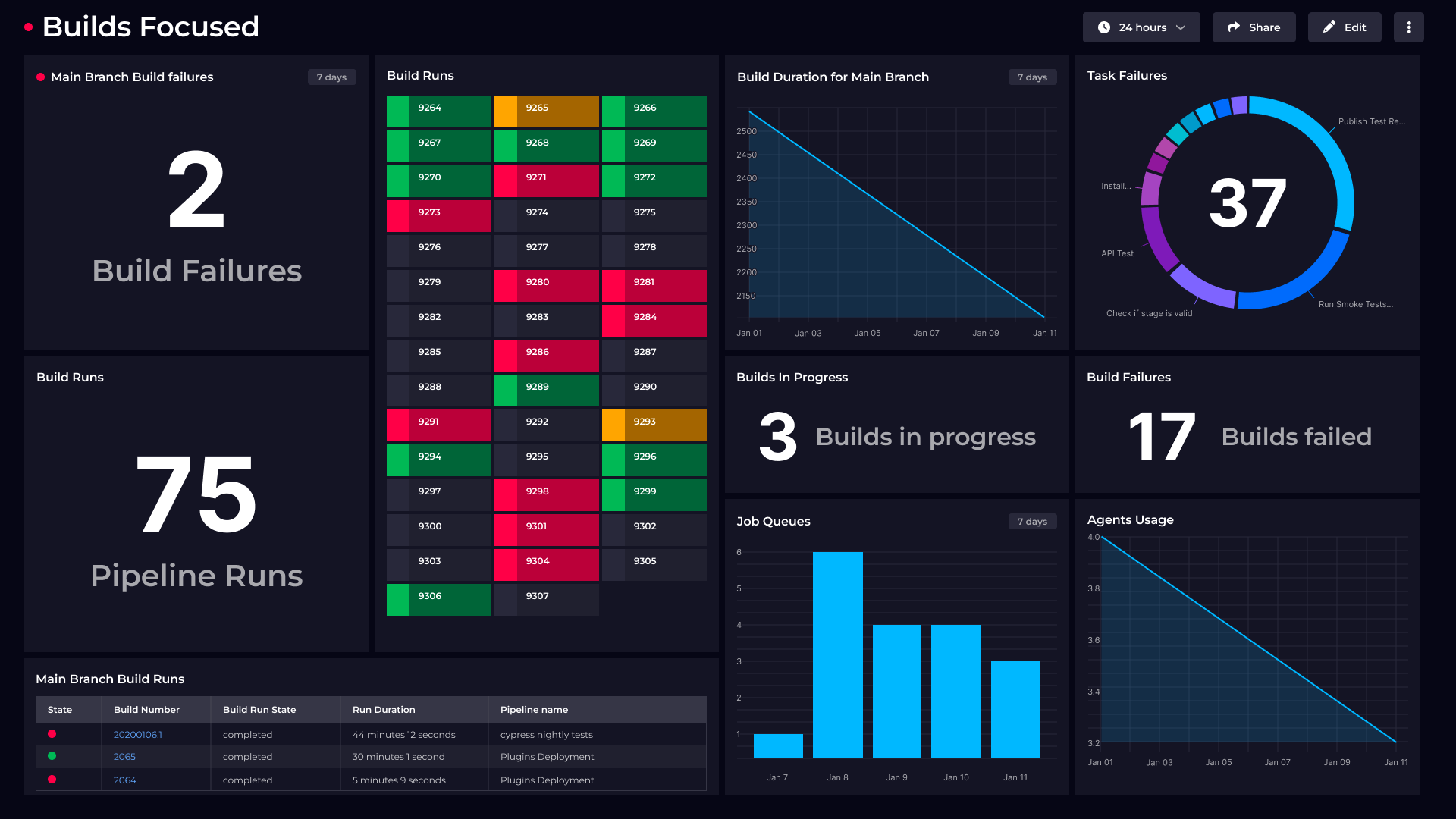

Performance Reality Check

Here's what actually happens when you scale:

- Small teams (1-5 developers): Free tier works fine until you hit business hours

- Medium teams (10-20 developers): Queue times become unbearable, need 2-3 parallel jobs minimum

- Large teams (50+ developers): Microsoft-hosted agents are unusable, self-hosted agents become mandatory

Break-even is somewhere around 20-25 builds daily, depending on how much you value your weekends. Below that, pay the $40/month. Above that, self-hosted agents save money and sanity.

The Hidden Costs Microsoft Doesn't Mention

Agent Utilization: You're paying for agents that sit idle 60% of the time because Microsoft's load balancing is inefficient. Premium agents promised "faster performance" but cost 3x more with marginal improvements.

Storage Costs: Pipeline artifacts start at 2GB free, then jump to $200/month with no warning. Docker images eat this up in days.

Timeout Penalties: Builds that timeout still consume your minutes. A misconfigured step that hangs for 60 minutes costs you $8 in wasted agent time.

Your 1,800 "free" minutes become 900 effective minutes after queue time and retries. Plan accordingly or get fucked by overage charges.

This isn't about Microsoft being evil - it's about understanding what you're actually paying for versus what the marketing promises.