Most people think running Kafka + Redis + RabbitMQ together is over-engineering. And 90% of the time, they're right. But if you're dealing with the kind of system where you need real-time user updates, massive event streams, and reliable task processing all in one architecture, welcome to the club.

The Reality Check

I've been running this combo for about 8 months in production, and here's the honest truth: Apache Kafka handles the firehose of events (user clicks, IoT data, whatever), Redis keeps frequently-accessed stuff fast (user sessions, real-time leaderboards), and RabbitMQ makes sure important workflows don't get lost (payment processing, notifications that actually matter).

Performance Numbers That Actually Matter

Forget the marketing specs. Here's what I see in production:

- Kafka: Processing like 2-3 million events/hour normally - Black Friday hit us with 4M+ and everything was on fire

- Redis: Sub-5ms response times for cache hits, which is like 95% of requests

- RabbitMQ: Around 30k messages/second, but zero lost messages for payment workflows

Version numbers actually matter here (usually they don't): Kafka 3.x finally marked KRaft as production ready (and 4.0 is supposed to finally ditch ZooKeeper completely), RabbitMQ 4.0.x doesn't randomly crash like the 3.x versions did, and Redis 8.0 - which just went GA in July - is way faster than Redis 7.x - cut our latency almost in half.

When This Actually Makes Sense

You need this unholy trinity when you've got conflicting requirements that no single system can handle:

Real-time user features need Redis - session lookups, feature flags, live leaderboards. Anything that has to respond in under 10ms or users get pissed.

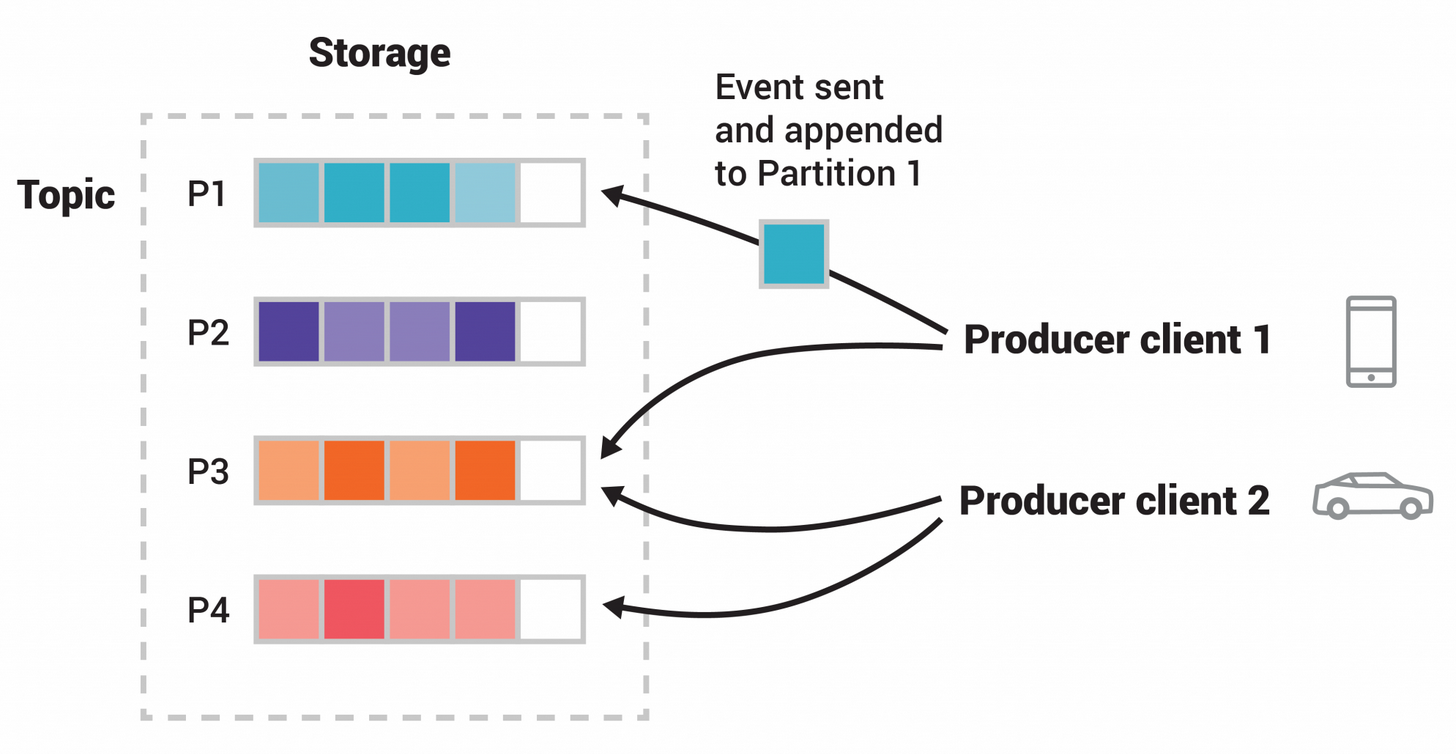

Event streaming at scale needs Kafka - audit logs, user behavior tracking, system metrics. The stuff that needs to be durable and replayable when you inevitably screw up processing.

Critical workflows need RabbitMQ - payment processing, order fulfillment, anything that legally can't get lost. The boring but important stuff that keeps the business running.

The Gotchas That Will Bite You

Message routing is where dreams die. You need to decide upfront what goes where, or you'll end up with a mess like we had initially - audit logs scattered across two systems, payment confirmations sometimes going to Redis (facepalm).

Monitoring becomes a shitshow. You'll have Kafka metrics in one place, Redis stuff somewhere else, RabbitMQ in a third dashboard. Good luck correlating issues at 3am when everything's on fire.

Deployment coordination sucks. Three systems means three different config formats, three different scaling patterns, three different ways for your deployment to fail halfway through.