Django wasn't built for background work. Try processing a 10MB file upload inline and watch your server burn. I learned this when our report generation endpoint regularly hit 30-second timeouts during lunch rush - turns out accounting people all export reports at exactly 12:15 PM.

What Goes Wrong With Synchronous Django

The stuff that breaks everything:

- Sending emails - blocks HTTP thread for 2-8 seconds depending on SMTP

- Image resizing - 50MB photos will eat your CPU alive

- PDF generation - memory usage spikes to 2GB+ for complex reports

- Data exports - CSV with 100k rows takes 45 seconds to build

- Third-party API calls - external services go down, your requests hang

Real failure story: Our customer uploaded a 47MB product photo during peak traffic. Django tried to resize it inline, consumed 3GB RAM, triggered OOMKilled, took down the whole container. 200 users got 502 errors because one person uploaded a massive image.

Error log looked like this:

[2025-08-15 12:23:45] ERROR django.request: Internal Server Error: /upload/

[2025-08-15 12:23:47] CRITICAL gunicorn.error: WORKER TIMEOUT (pid:1847)

[2025-08-15 12:23:48] WARNING kernel: [15234.567890] Memory cgroup out of memory: Killed process 1847 (gunicorn: worker) score 1000 or total-vm:3145728kB, anon-rss:2097152kB

Why Django Async Views Don't Fix This

Django 4.1+ has async views but they're useless for CPU-intensive work. async def only helps with I/O waiting - database queries, HTTP requests, file reads. But image processing, PDF generation, data crunching? Still blocks the event loop.

Plus async Django is a pain in the ass to debug. Stack traces get weird, database connections act funny, and most third-party packages don't support it anyway.

The Background Task Solution That Actually Works

Split your work into two phases:

- Web request: Accept the job, return immediately

- Background worker: Process the job separately, update database when done

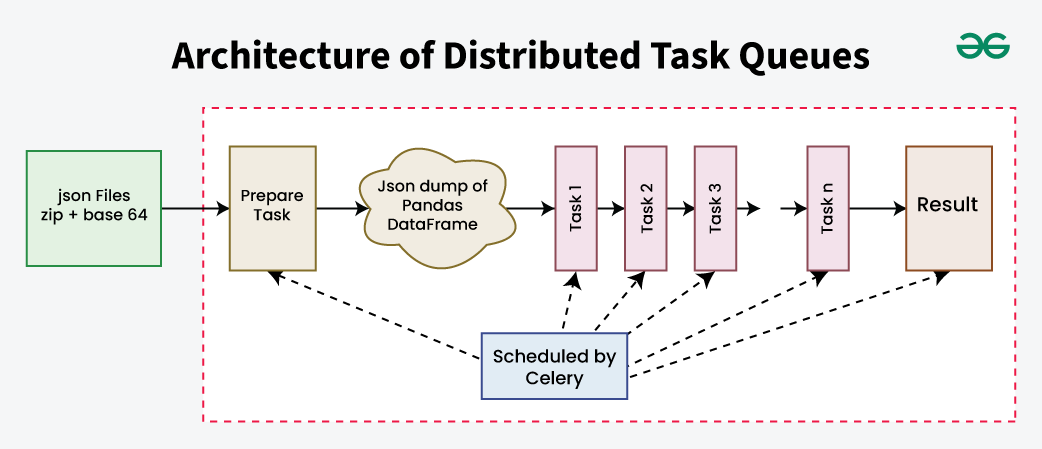

User uploads file → Django saves it, queues a task, returns "Processing..." → Celery worker handles resize → Updates database → User gets notification.

Architecture Overview: Django web servers handle HTTP requests while Celery workers process background tasks through Redis message broker.

Tech stack I use:

- Redis: Message queue (because it's simple and we already use it for caching)

- Celery: Task runner (despite its networking bullshit, it works)

- PostgreSQL: Database (shared between web and workers)

- Docker: Because deployment without containers is suffering

Why Redis Instead of RabbitMQ

I tried RabbitMQ first. Spent two days fighting Erlang dependencies, cluster configuration, management UI permissions, and memory management issues. Said fuck it and went with Redis after reading the Redis vs RabbitMQ comparison.

Redis advantages:

- Already running it for Django cache, sessions, etc.

- One

docker runcommand and it works - Easy to debug with `redis-cli`

- Uses way less memory than RabbitMQ

Redis gotchas:

- Messages disappear if Redis crashes (enable AOF persistence)

- No fancy routing like RabbitMQ (we don't need it anyway)

- Memory usage can get weird with long queues

Celery Integration Hell

Celery talks to Django through shared database connections and settings import. Works great until it doesn't.

Connection problems: Workers can't find Django models, import errors everywhere, database connections timeout. Fixed by making sure `PYTHONPATH` and `DJANGO_SETTINGS_MODULE` are identical between web and worker containers. Check the Celery Django integration docs and Django deployment checklist for common configuration issues.

Database connection limits: PostgreSQL defaults to 100 connections. With 8 web workers + 6 Celery workers + monitoring, you hit limits fast. Had to bump `max_connections = 200` and add connection pooling.

Memory leaks: Celery workers grow memory over time. Set `CELERY_WORKER_MAX_TASKS_PER_CHILD = 1000` to recycle them before they eat all your RAM.

Docker Networking Pain Points

Docker networking will fuck you. Use service names, not localhost. This took me 4 hours to figure out because local development worked fine but Docker containers couldn't talk to each other.

Wrong:

CELERY_BROKER_URL = 'redis://localhost:6379/1'

Right:

CELERY_BROKER_URL = 'redis://redis:6379/1' # 'redis' is the service name

Also, mount volumes consistently or workers can't access uploaded files. Both web and worker containers need the same volume mounts for media files.

Performance Reality Check

Before: Report generation blocked web requests for 30+ seconds, users got timeout errors, server CPU spiked to 90%+ during peak hours.

After: Report requests return instantly with "Processing..." message, background workers handle the heavy lifting, web server stays responsive even during export rushes.

Actual numbers from production:

- Response time for report requests: 850ms → 45ms

- Peak CPU usage: 85% → 35% (work spread over time)

- User timeout errors: ~50/day → 0

- Concurrent user capacity: roughly doubled

When You Don't Need This

Don't over-engineer simple apps. If your Django app handles basic CRUD operations and nothing takes longer than 200ms, stick with synchronous code.

Skip background tasks for:

- Basic blogs, portfolios, simple CMSes

- Apps with <1000 daily active users

- Operations that complete in under 1 second

- Prototypes and MVPs (add complexity later)

The setup overhead isn't worth it unless you're actually hitting performance walls.