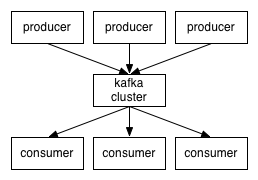

So you've decided to connect Kafka to ClickHouse. Smart choice for real-time analytics, but buckle the fuck up. Look, Kafka and ClickHouse are both solid at what they do. Kafka handles millions of events per second without breaking a sweat, and ClickHouse can run analytical queries stupid fast. But getting them to play nice together? That's where your weekend disappears.

The Real Problem Nobody Tells You About

Here's what the docs don't mention: these systems were designed for completely different workloads. Kafka wants to stream individual events as fast as possible. ClickHouse wants to ingest data in batches and compress the hell out of it. It's like trying to connect a fire hose to a funnel.

Kafka pushes individual events. ClickHouse wants batches. This mismatch will bite you in the ass repeatedly.

We learned this the hard way when our first attempt to stream click events directly into ClickHouse brought our analytics cluster to its knees. Turns out ClickHouse creating a new part for every single event is a terrible idea. Who knew?

Your Three Integration Options (And What Goes Wrong)

ClickPipes - ClickHouse Cloud's managed connector. Costs money but actually works, which is fucking refreshing. The setup is dead simple: point it at your Kafka cluster, configure the schema mapping, done. We handle maybe 40-60k events/sec this way and it just works. The downside? Your AWS bill went from like 2 grand a month to over 7 grand real quick - ClickPipes charges $0.30 per million events plus compute time. Your CFO won't be thrilled.

The ClickPipes documentation is actually decent, which is rare.

Kafka Connect - The open-source connector that promises to be free. Yeah right. It's not free - you'll pay with your sanity and weekend time instead. Getting exactly-once semantics working took us three weeks and two production outages. But once it's running, it's actually pretty solid. We process maybe 25-35k events/sec through our Connect cluster running version 7.4.0 - avoid 7.2.x if you can, it has a nasty memory leak with large consumer groups.

Connect runs worker processes that grab messages from Kafka and shove them into ClickHouse. Simple concept, nightmare implementation.

The connector has a habit of silently dropping messages when your schema changes. Always, ALWAYS monitor your consumer lag.

Kafka Table Engine - ClickHouse's native integration. Fastest option at like 5ms latency, but fragile as fuck. Works great until someone restarts the ClickHouse service and forgets to recreate the materialized views. Then your data just... stops flowing. No errors, no warnings, just silence. Pro tip: ClickHouse 23.8+ finally added proper error logging for this, but earlier versions will just fail silently and leave you wondering why your dashboards went flat.

We use this for low-volume, high-importance event streams where we need sub-10ms latency. Anything mission-critical goes through Connect instead.

Performance Reality Check

Forget those "230k events/sec" benchmarks you see online. In production with real data, real schemas, and real network conditions, here's what you can actually expect:

- ClickPipes: Maybe 40-70k events/sec depending on message size

- Kafka Connect: 20-45k events/sec with proper tuning

- Kafka Table Engine: 50-120k events/sec when it's working

Your mileage will vary dramatically based on message size. Those benchmarks assume tiny JSON messages. Try streaming 2KB events and watch your throughput drop by like 60-70%.

Hardware matters. Message size matters more. Network conditions will fuck you over. Test with your actual data or prepare for surprises.

Security That Actually Works in Production

If you're dealing with sensitive data, Kafka Connect is your only real option. ClickPipes doesn't support field-level encryption (yet), and the Table Engine treats security as an afterthought.

We encrypt PII fields at the application level before sending to Kafka. It's extra work, but it saves you from compliance nightmares when someone inevitably dumps your analytics database to S3 without thinking.