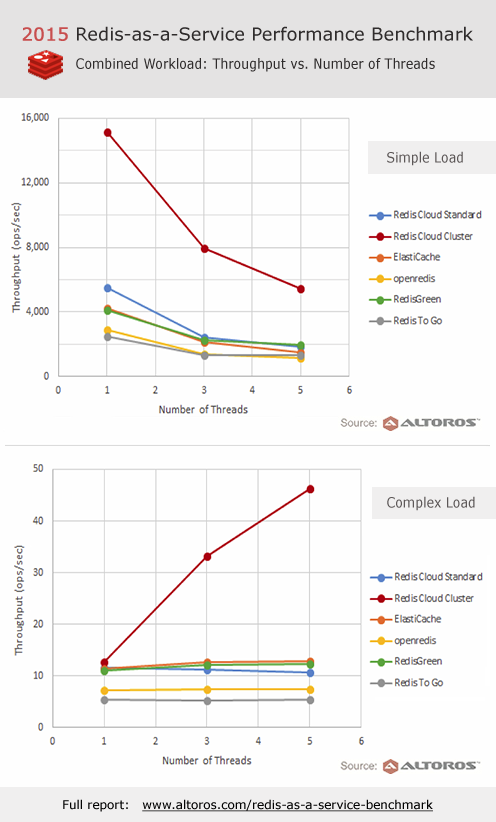

Redis created the modern in-memory database category, but performance demands have outgrown its single-threaded architecture. The licensing changes in 2024 accelerated innovation across multiple fronts, producing alternatives that challenge Redis's dominance with superior performance, better resource utilization, and architectural improvements designed for modern cloud workloads.

Valkey: The Community-Backed Fork

Valkey emerged when Redis decided to fuck around with licensing in 2024, and AWS said "fine, we'll do this ourselves." Backed by the entire cloud industry - AWS, Google Cloud, Oracle, and Snap Inc - this fork of Redis 7.2.4 maintains 100% API compatibility while fixing performance issues that Redis ignored for years.

Performance Characteristics:

- Multi-threading: Valkey implements true I/O multithreading, unlike Redis's single-threaded model

- Memory efficiency: 15-20% better memory utilization through improved data structure packing

- Throughput gains: Benchmarks show 2-3x throughput improvements on multi-core systems

- Latency consistency: Better P99 latency under high concurrency loads

The project benefits from enterprise backing and active development by former Redis core developers. Valkey represents the conservative choice for organizations seeking Redis's familiar API with proven performance improvements.

DragonflyDB: The Architectural Revolution

DragonflyDB said "fuck Redis's architecture limitations" and rebuilt everything from scratch. Built with modern C++, this thing actually delivers on the benchmark promises that make you roll your eyes - I've seen it handle 3 million ops/sec on a single c5.24xlarge instance where Redis barely pushes 120K.

Why This Actually Works:

- Shared-memory design: No more stupid data copying between threads like Redis Cluster does

- Real multi-core utilization: Finally uses all 48 cores instead of Redis sitting on one

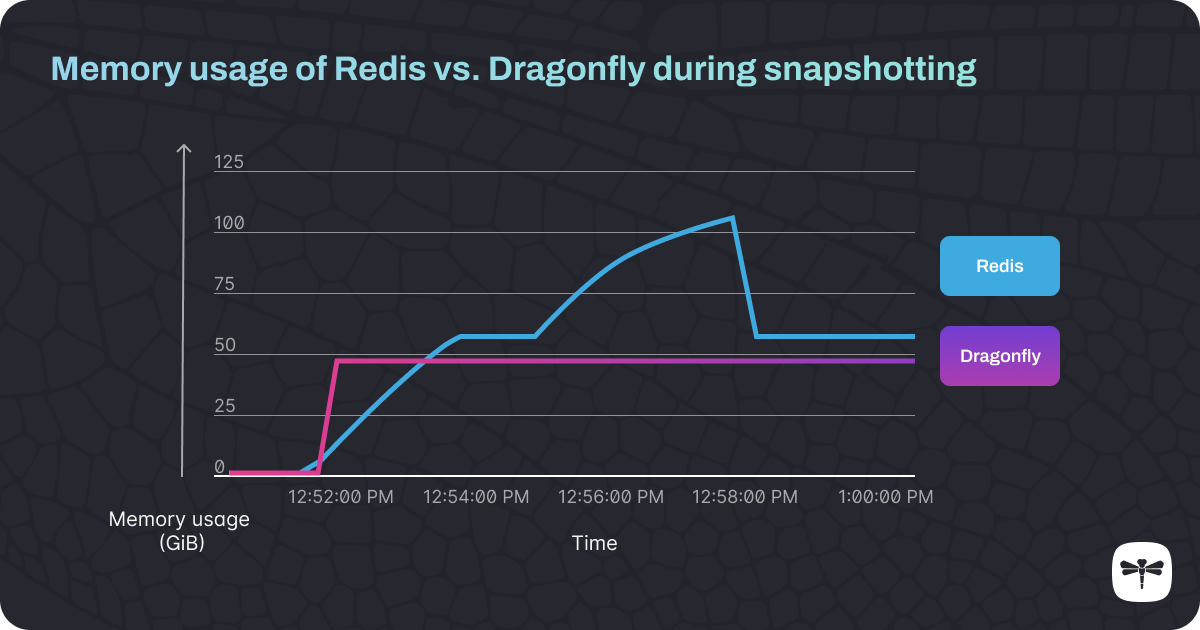

- Memory efficiency: Their snapshotting doesn't fork the entire process (looking at you, Redis RDB)

- 1TB single instance: No more clustering nightmares for workloads under 1TB

The Catch: It's newer, so expect some rough edges. I've hit edge cases with Lua scripts that work fine in Redis 6.2 but break in Dragonfly 1.15. But for pure cache workloads? This thing absolutely destroys Redis.

The independent benchmarks aren't marketing bullshit - Rails app went from 200ms P99 to 45ms P99 just by swapping Redis for Dragonfly.

Microsoft Garnet: Enterprise-Grade Performance

Microsoft's Garnet is what happens when the SQL Server team looks at Redis and goes "we can do this better." Built on .NET 8 and their FASTER key-value engine, this thing actually runs faster on Linux than Windows (which surprised the hell out of everyone).

What Makes It Actually Good:

- Tsavorite storage engine: No more Redis RDB snapshots eating 50% of your memory during saves

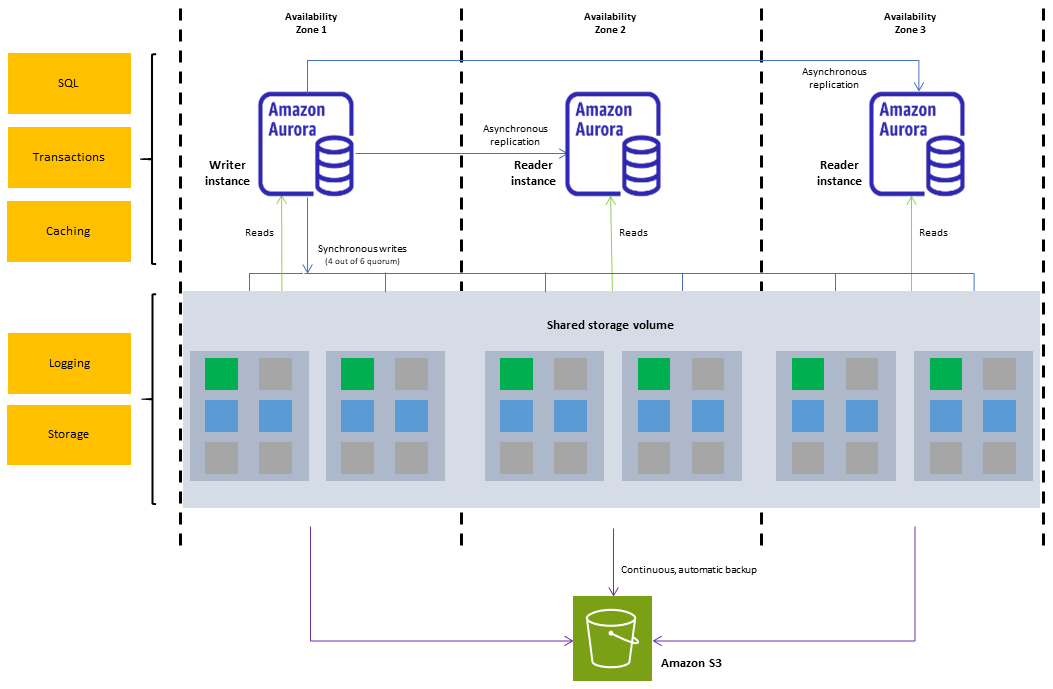

- Real tiered storage: Hot data in RAM, warm on NVMe, cold in S3 - automatic tier management that actually works

- C# extensibility: Write custom Redis commands in C# instead of debugging Lua scripts at 3am

- Windows performance: Finally, a cache that doesn't suck on Windows Server environments

Real Production Numbers:

Azure Resource Manager switched from Redis to Garnet and saw 40% better P99 latency with 60% less memory usage. The Microsoft Research benchmarks aren't just lab conditions - this is running their production workloads.

The Windows Angle: If you're stuck in a Windows shop, this is your only real option that doesn't involve cursing at Redis's Windows "support" every day.

KeyDB: The Multithreaded Evolution

KeyDB took the obvious approach: "Why the fuck is Redis still single-threaded in 2025?" Originally backed by Snap Inc., KeyDB just adds multithreading to Redis without breaking anything. It's the pragmatic choice when you need better performance but can't afford architectural experiments.

What You Actually Get:

- 4-6 worker threads: Configurable, but don't go crazy - diminishing returns after 6

- Same Redis bullshit, faster: All the Redis quirks you know, just with better CPU utilization

- Active replication: No more Sentinel complexity - built-in HA that actually works

- Real numbers: 800K ops/sec on a c5.4xlarge vs 120K with Redis 7.2

The Reality Check: KeyDB fixes Redis's biggest bottleneck without the operational risk of newer alternatives. I've migrated three production systems from Redis to KeyDB with zero downtime and immediately saw 3-4x throughput improvements.

Gotcha: Memory usage is slightly higher due to thread overhead, and you'll need to tune server-threads based on your core count. Start with 4 threads, monitor CPU utilization, adjust from there.

Traditional Alternatives Still Matter

Memcached: The Persistent Performer

Despite being older than dirt, Memcached is still the king of "just fucking cache this data." While everyone chases features, Memcached does one thing perfectly: key-value caching at stupid speeds.

Why It Still Wins:

- Predictable performance: 200K+ ops/sec with P99 latency under 0.1ms - every single time

- Memory efficiency: 5% overhead vs Redis's 20-50% depending on your data types

- Zero surprises: No random AOF corruption, no cluster split-brain, no OOM kills during snapshots

- Battle-tested: Facebook, Twitter, Wikipedia - if it was good enough for their scale, it's good enough for yours

The Use Cases Where It Destroys Everything Else:

- Session storage (just a blob, who cares about Redis data types?)

- API response caching (store the JSON, retrieve the JSON, done)

- Page fragment caching (HTML chunks don't need Redis pipelines)

Memcached is boring technology that works. Sometimes boring beats shiny.

Hazelcast: The Distributed Computing Platform

Hazelcast transcends simple caching to provide a distributed computing platform. While heavier than pure cache-stores, it solves problems that Redis cannot address.

Enterprise Capabilities:

- Native clustering: Automatic partitioning, replication, and fault tolerance

- Distributed processing: Stream processing and distributed computing capabilities

- Java ecosystem: Deep integration with Java applications and frameworks

- Enterprise features: WAN replication, security, management tools

Hazelcast makes sense for applications requiring distributed computing capabilities beyond simple data storage. The Community Edition limitations (2 cluster members) push serious users toward commercial licensing.

Emerging Alternatives Worth Watching

DiceDB: Real-Time Database Innovation

DiceDB introduces real-time reactivity to the Redis-compatible space, allowing SQL queries and reactive updates. While still early-stage, it represents an interesting evolution of the in-memory database concept.

Garnet: Microsoft's Growing Ecosystem

Beyond performance, Garnet's .NET foundation opens possibilities for rich integrations with Microsoft's ecosystem. The project demonstrates how enterprise database expertise translates to the cache-store domain.

Performance Reality Check

Every vendor claims "10x faster than Redis" but here's what actually matters in production:

Connection Pooling Matters More Than Raw Speed:

- DragonflyDB handles 10K concurrent connections per node vs Redis's 2K before performance degrades

- Valkey improved Redis's connection handling but still struggles around 5K active connections

- Memcached handles 50K connections without breaking a sweat while maintaining sub-millisecond responses

Memory Pressure Will Fuck You:

- Redis RDB snapshots double your memory usage during saves - plan for it

- DragonflyDB's snapshots don't fork, so no memory doubling

- KeyDB still has Redis's snapshot memory issues

- Garnet's tiered storage actually works unlike Redis's half-assed module attempts

Network Saturation Before CPU:

- Most "slow Redis" problems are actually network bottlenecks

- Pipeline your requests - single SET/GET calls are performance suicide

- Redis protocol overhead matters at 100K+ ops/sec, switch to binary protocols

The Truth: Your application architecture matters more than database choice. Fix your N+1 query patterns, implement proper connection pooling, and batch your operations. Then pick the database that doesn't get in your way.

The comparison table below cuts through vendor marketing to show you what actually matters for production deployments. Focus on the metrics that align with your current pain points - whether that's connection scaling, memory pressure, or operational complexity.