You know what sucks? Waiting 10 minutes for CI/CD to tell you that you forgot a comma in your YAML. Or having your shared dev cluster randomly crash during a demo because someone deployed a memory-leaking pod. Local Kubernetes development isn't just nice-to-have anymore - it's the difference between productive development and wanting to throw your laptop out the window.

Reality Check: Local k8s in 2025

Docker Desktop's Kubernetes integration still uses ridiculous amounts of memory and randomly stops working. Minikube will turn your laptop into a space heater. But if you know which tools don't suck, you can actually get a functional cluster running without maxing out your system resources.

The biggest game-changer? Tools like kind that start clusters in 30 seconds instead of the 5-minute VM boot times that made everyone give up. k3s runs in 512MB of RAM, which is a fucking miracle compared to the 4GB monsters we used to deal with.

Meanwhile, MicroK8s gives you a full Kubernetes installation that doesn't require Docker Desktop. k3d wraps k3s in containers for even faster iteration. And Rancher Desktop offers a Docker Desktop alternative that doesn't randomly break your setup.

Why Local Development Beats Shared Clusters

You Stop Waiting for Everything

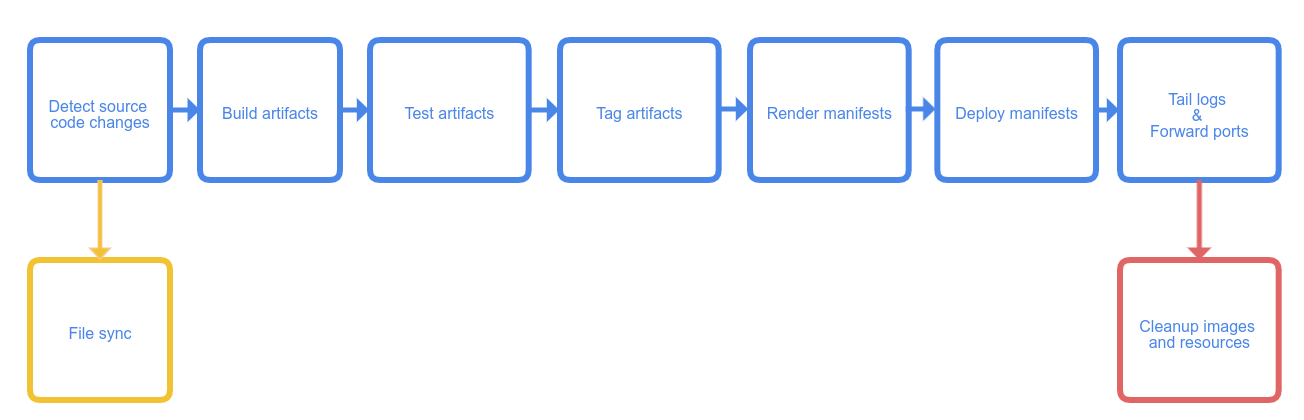

Traditional workflow: Write code → git push → wait for CI → deploy to shared cluster → something breaks → debug → repeat. Total time wasted per fix: 15-20 minutes.

Local workflow: Write code → see it running locally in under a minute → fix issues immediately. When it works locally, it'll work in production (most of the time).

Stop waiting for CI/CD pipelines that take forever. Stop debugging networking issues that only happen in shared environments. Get your feedback loop down to seconds, not minutes.

Your AWS Bill Won't Kill You

Those shared dev clusters are expensive as hell. Our 5-person team hit $1,200 on EKS last month, and half the time the cluster was just sitting there doing nothing because Jenkins takes 20 minutes to deploy anything. GKE costs and AKS pricing add up fast when you're running multiple environments. Local development eliminates the "oh shit we left the cluster running all weekend" moments that tank your startup's budget.

Works When Everything Else Is Down

Local clusters work when your internet is shit, AWS is having another outage, or you're debugging on a plane. Can't count how many times the "cloud-first" strategy meant sitting around doing nothing because some service was down. GitHub status pages are bookmarked for a reason.

I once spent a weekend debugging why our 'highly available' shared dev cluster kept crashing during demos. Turned out someone deployed a memory leak that only triggered under specific conditions. The cluster would fail spectacularly whenever we tried to show the CEO our progress. Now everything runs locally first - if it crashes on my laptop, it's not going anywhere near production.

Catch the Real Issues Early

Production networking is weird. Storage behaves differently. Resource limits bite you in unexpected ways. Local clusters let you find these issues when you're not under pressure to fix production at 2am. Test your resource limits, ingress configs, and storage patterns before they become production incidents.

System Requirements (What You Actually Need)

Hardware Reality Check

- 8GB RAM: You can run a basic cluster, but Docker will compete with your browser for memory. Expect swapping and thermal throttling.

- 16GB RAM: Sweet spot for local development. Enough for a cluster plus your usual 47 Chrome tabs.

- 32GB RAM: You can run multiple clusters simultaneously without your laptop sounding like a jet engine.

CPU cores matter more than you think: 4 cores minimum or your cluster will take forever to start. 8 cores if you want hot reloading that doesn't make you wait.

SSD is mandatory: Don't even try this with a spinning disk. You'll wait 10 minutes for pods to start and hate your life. Performance comparisons show the dramatic difference storage makes.

Software Prerequisites (And What Will Break)

- Docker Desktop: Required for most tools. Will randomly update and break your setup. Docker Desktop pricing costs $7/month per user if you're not a tiny company.

- kubectl: Get the latest version or weird shit will break. Version skew between kubectl and cluster will ruin your day.

- VPN software: Will conflict with cluster networking. Corporate VPNs especially love to fuck with Docker networking.

Network Reality

Local clusters create their own network namespaces that will conflict with:

- Corporate VPNs (guaranteed networking hell)

- Existing Docker networks

- Port 6443 and 8080 (something is always using these)

- Your company's overly restrictive firewall

If you're on corporate WiFi, prepare for random networking failures that make no sense.

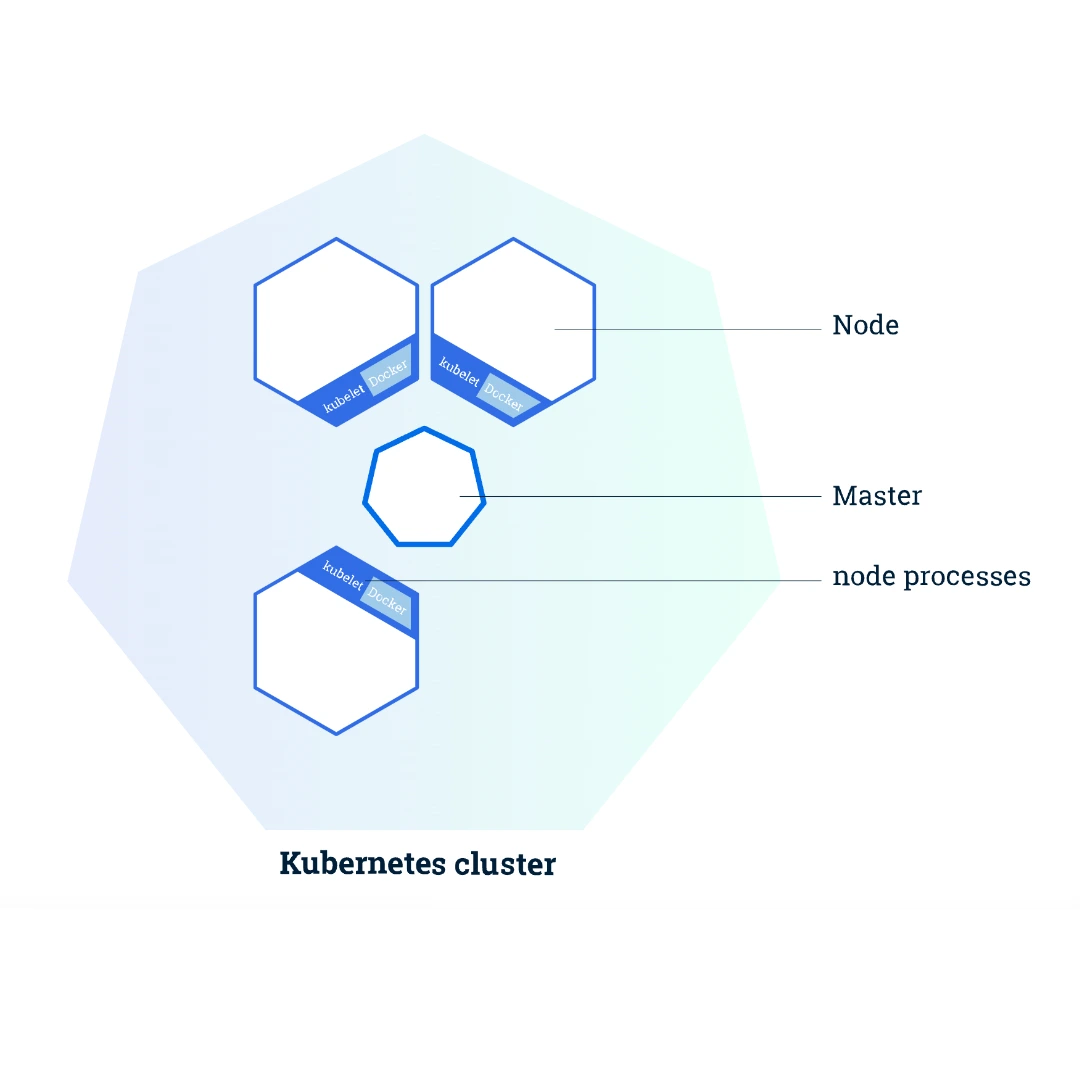

Architecture Overview: How Local Kubernetes Actually Works

Local Kubernetes environments use three main approaches:

VM-Based Solutions (Minikube)

Creates a virtual machine running Linux with a complete Kubernetes installation. Provides the most production-like environment but uses more system resources.

Container-Based Solutions (kind, k3d)

Runs Kubernetes components as containers on your host Docker daemon. Faster startup times and lower resource usage, but some networking limitations.

Native Installations (k3s, MicroK8s)

Installs Kubernetes directly on your host system. Highest performance but more complex to manage and clean up.

The choice between these approaches affects startup time, resource usage, networking capabilities, and how closely the environment matches production. We'll explore each option in detail with specific setup instructions and optimization tips.

Understanding the trade-offs between these approaches is crucial for selecting the right tool for your specific development needs and system constraints.