Consumer lag is that moment when you realize your streaming platform is actually broken as hell.

The worst part? The symptoms lie to you. I spent 6 hours one night chasing org.apache.kafka.clients.consumer.CommitFailedException: Commit cannot be completed errors that turned out to be database connection pool exhaustion. The real problem was three layers deep from what Kafka was telling me.

What Is Consumer Lag (Skip the Theory)

Consumer lag = how far behind your consumer is. It's the difference between the latest message offset and where your consumer actually is. If producers are at offset 50,000 and your consumer just processed offset 47,000, you have 3,000 messages of lag.

Anything over a few hundred messages usually means shit's going sideways.

Why Lag Happens (The Real Reasons)

Your Code Is Slow: 90% of the time it's your consumer logic being garbage. I've seen 50ms processing time turn into 2+ seconds because someone added a synchronous HTTP call to validate every message. Don't do that.

Producers Going Nuts: Black Friday hits and suddenly your producers are vomiting 10x normal traffic while your consumers are still configured for Tuesday afternoon load. Your consumers can't keep up.

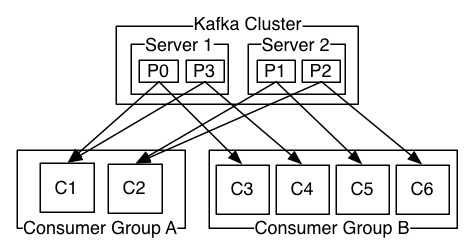

Partition Assignment Problems: One consumer gets all the hot partitions while others sit idle. I've seen one partition drowning while others barely had any lag. That's messed up partition distribution.

Infrastructure Issues:

- JVM garbage collection decides to pause for 30 seconds

- Network hiccups make consumers think brokers died

- Some genius put Kafka on spinning disks

- Kubernetes kills your pod right as it's catching up

When Lag Becomes a Problem

Had a client where even a couple seconds of lag meant fraudulent transactions slipped through. The costs were brutal when fraud detection can't keep up.

Lag creates vicious cycles. Messages pile up faster than you can process them. Your consumers get overwhelmed, process even slower, lag gets worse. I've watched systems take down entire platforms this way.

KRaft in Kafka 2.8+ helped with coordinator issues, but if your consumer code sucks, you're still screwed. I've seen teams upgrade from Kafka 2.4 to 3.6 expecting miracles, only to discover their 5-second database calls were still killing performance. No amount of Kafka improvements will fix slow database calls or blocking I/O.