This space changes constantly and it's annoying as fuck. New embedding models come out every few months, pricing changes, features get deprecated. Here's how to build stuff that doesn't require complete rewrites every 6 months.

Preparing for Model Evolution (Because It Never Stops)

Embedding Model Migrations Without Disasters

New embedding models come out all the time and everyone acts like you need to upgrade immediately. Your architecture should handle this without breaking everything.

Version-isolated architecture (learned this the hard way):

class EmbeddingVersionManager:

def __init__(self):

self.models = {

"ada002": "text-embedding-ada-002", # Legacy, retiring Q1 2026

"3large": "text-embedding-3-large", # Current production

"voyage2": "voyage-large-2-instruct", # Testing phase

"bge": "BAAI/bge-large-en-v1.5" # Open source backup

}

def get_namespace(self, tenant_id, feature, model_version="3large"):

return f"tenant:{tenant_id}:{feature}:model_{model_version}"

Migration process that doesn't break things:

- Pre-populate new namespace with re-embedded content (expensive but necessary)

- A/B test with 5% traffic for 2 weeks minimum

- Monitor quality metrics - user engagement, click-through rates, complaints

- Gradual rollout - 5% → 25% → 50% → 100% over 4-6 weeks

- Keep old namespace live until you're 100% confident (learned this from painful rollbacks)

Reality check: Re-embedding your entire corpus costs serious money. I spent like 2.5x our normal OpenAI bill one month doing a migration. Budget for that shit or you'll get a nasty surprise.

Rollback strategy: Always have a feature flag to instantly switch back to the old namespace. New models fail in weird ways you don't discover until production.

Don't Lock Yourself Into Specific Dimensions

Models have different dimensions: ada-002 is 1536D, text-3-large is 3072D, some open-source models are 768D. You can't mix them in the same index so plan for this or it'll break.

## Dimension-aware index routing - works on my machine

def get_index_for_model(model_name):

# TODO: move this to config file

model_specs = {

"ada002": {"dimensions": 1536, "index": "main-1536d"},

"3large": {"dimensions": 3072, "index": "main-3072d"},

"bge-large": {"dimensions": 1024, "index": "main-1024d"} # untested

}

return model_specs[model_name]

## Route based on embedding dimensions

def route_query(embedding_vector, namespace):

dims = len(embedding_vector)

index_name = f"vectors-{dims}d"

return pinecone.Index(index_name).query(vector=embedding_vector, namespace=namespace)

Pro tip: Don't create separate indexes for every model unless you have to. Use namespaces within dimension-matched indexes to save money.

Compliance Architecture (For When Lawyers Care)

Privacy-First Design Patterns

GDPR, CCPA, and other privacy laws are a pain in the ass but unavoidable. Build compliance in from day one or you'll hate your life later.

Data minimization strategy:

## Don't store PII in vector metadata

safe_metadata = {

"doc_id": hash(document.id), # Hash, not original

"created_at": document.timestamp, # Dates are usually okay

"category": document.category, # Non-personal classification

"user_hash": hash(user_id) # Reference, not identity

}

## Store user mapping separately (encrypted)

user_mapping_db[hash(user_id)] = encrypt(user_id)

Right-to-deletion implementation:

async def gdpr_delete_user(user_id):

user_hash = hash(user_id)

# Find all namespaces for this user

user_namespaces = await find_namespaces_by_pattern(f"*:{user_hash}:*")

# Delete from Pinecone

for namespace in user_namespaces:

await index.delete(delete_all=True, namespace=namespace)

# Remove from user mapping

del user_mapping_db[user_hash]

# Log for audit trail

await audit_log.record({

"action": "user_data_deletion",

"user_hash": user_hash,

"timestamp": datetime.utcnow(),

"namespaces_deleted": len(user_namespaces)

})

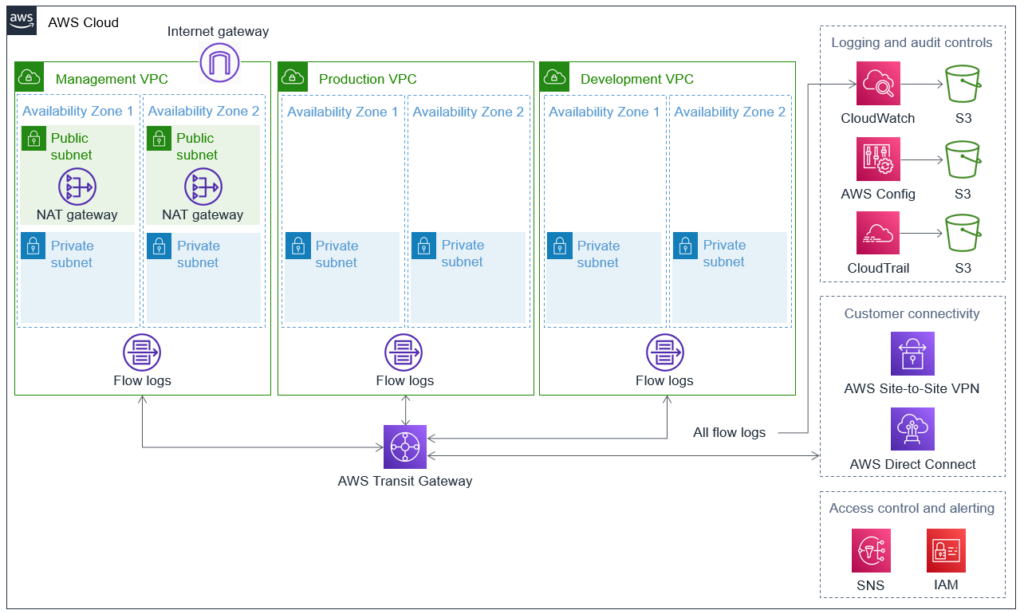

Multi-region compliance (enterprise requirement):

## Route data based on user location and regulations

def get_compliant_index(user_location, data_type):

if user_location.startswith("EU"):

return pinecone_eu_client # EU data stays in EU

elif data_type == "medical":

return pinecone_us_hipaa_client # HIPAA-compliant infrastructure

else:

return pinecone_default_client

Scaling Beyond Pinecone (Multi-Cloud Reality)

Vendor Lock-in Escape Hatch

Don't put all your eggs in one basket. Build fallbacks from day one.

Multi-provider architecture:

class VectorDatabaseRouter:

def __init__(self):

self.primary = PineconeClient() # Primary for performance

self.secondary = QdrantClient() # Backup for cost/control

self.cache = RedisVectorCache() # In-memory fallback

async def resilient_query(self, query_vector, namespace):

# L1 cache (sub-millisecond)

cached = await self.cache.get(query_vector, namespace)

if cached:

return cached

# L2 primary service (10-50ms)

try:

result = await self.primary.query(query_vector, namespace)

await self.cache.set(query_vector, namespace, result)

return result

except (TimeoutError, ServiceUnavailable, RateLimitError):

# L3 secondary fallback (50-200ms but better than nothing)

return await self.secondary.query(query_vector, namespace)

Why multi-cloud matters:

- Pinecone outages do happen

- Price changes can kill your margins overnight

- Different providers excel at different workloads

- Compliance requirements may force geographic distribution

- Multi-cloud strategy research shows reduced vendor lock-in risks

Implementation reality: Start with Pinecone, add fallbacks as you scale. Don't over-engineer from day one, but design the interfaces to support it.

The patterns in this guide provide the foundation for production systems that scale with your AI ambitions while maintaining operational excellence. Focus on the architecture decisions that matter: namespace design, cost management, monitoring, and future-proofing. The rest can be optimized later.

Building these systems requires ongoing learning and adaptation as the vector database ecosystem continues to evolve. The resources in our final section will help you stay current with the latest developments and connect with the community of practitioners who are solving similar challenges.

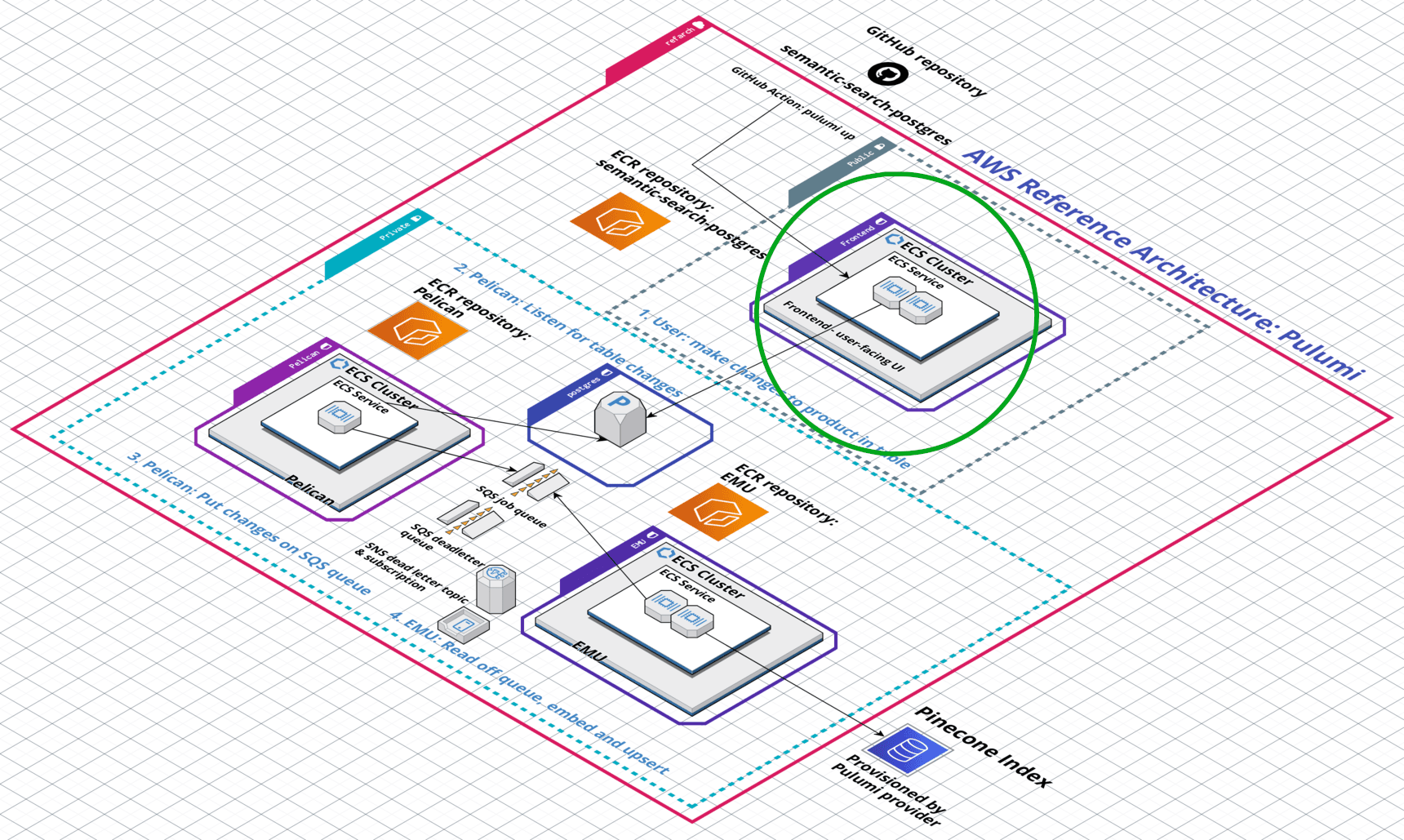

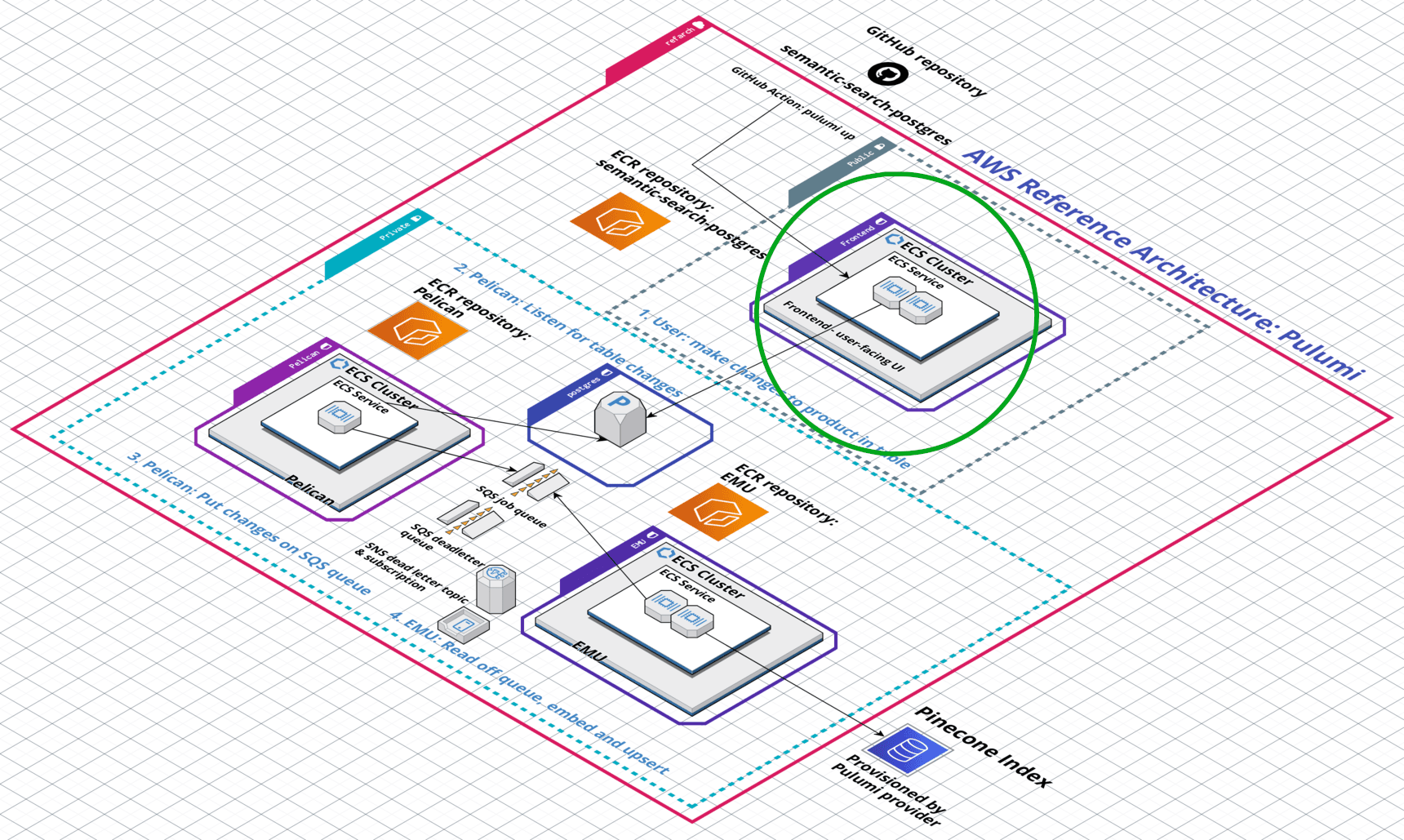

Microservice Decomposition Strategy

As systems scale, decompose vector operations into focused services:

Embedding Service: Handles model inference and caching

Vector Storage Service: Manages Pinecone operations and namespaces

Query Routing Service: Implements hybrid search and reranking

Analytics Service: Monitors performance and costs

The microservices architecture patterns provide detailed guidance on service decomposition strategies, while the distributed systems primer covers essential concepts for scaling these architectures.

Inter-service communication:

## Use async messaging for non-critical paths

async def index_document(document):

# Immediate: Generate embeddings

embeddings = await embedding_service.generate(document.content)

# Background: Store vectors

await message_queue.send("vector.upsert", {

"namespace": document.namespace,

"vectors": embeddings,

"metadata": document.metadata

})

# Background: Update analytics

await message_queue.send("analytics.document_indexed", {

"doc_id": document.id,

"size": len(embeddings)

})

Caching That Actually Helps

Set up multi-layer caching for different query patterns:

## Hot/warm/cold caching strategy - works most of the time

class SmartVectorCache:

def __init__(self):

self.hot_cache = RedisCache(ttl=300) # 5 min for recent queries

self.warm_cache = MemcachedCache(ttl=3600) # 1 hour for popular queries

self.cold_cache = S3Cache(ttl=86400) # 24 hours for rare queries - TODO: tune these

async def get_or_query(self, query_vector, namespace):

# Check hot cache first

result = await self.hot_cache.get(query_vector, namespace)

if result:

return result

# Check warm cache

result = await self.warm_cache.get(query_vector, namespace)

if result:

await self.hot_cache.set(query_vector, namespace, result)

return result

# Query Pinecone and cache result

result = await pinecone_query(query_vector, namespace)

# Cache with appropriate TTL based on query frequency

query_frequency = await self.get_query_frequency(query_vector)

if query_frequency > 10: # Popular query

await self.hot_cache.set(query_vector, namespace, result)

elif query_frequency > 1: # Moderate query

await self.warm_cache.set(query_vector, namespace, result)

else: # Rare query

await self.cold_cache.set(query_vector, namespace, result)

return result

Making Queries Not Suck

Optimize for different query patterns automatically:

## Adaptive query optimization

class QueryOptimizer:

def optimize_query(self, query_vector, filters, top_k):

# Reduce top_k for highly selective filters

if self.is_highly_selective(filters):

optimized_k = min(top_k, 50)

else:

optimized_k = top_k

# Use approximate search for large result sets

if top_k > 100:

return self.approximate_search(query_vector, filters, optimized_k)

else:

return self.exact_search(query_vector, filters, optimized_k)

Monitoring and Observability Evolution

Use AI to monitor AI systems - detect anomalies in vector search performance:

## Anomaly detection for query patterns

class VectorSearchMonitor:

def __init__(self):

self.baseline_model = IsolationForest()

def detect_anomalies(self, query_metrics):

# Features: latency, result relevance, query volume

features = self.extract_features(query_metrics)

anomaly_scores = self.baseline_model.decision_function(features)

# Alert on significant deviations

if anomaly_scores.min() < -0.5:

await self.alert_performance_anomaly(query_metrics)

Predictive scaling:

## Predict capacity needs based on usage patterns

def predict_scaling_needs(historical_metrics):

# Use time series forecasting for query volume

forecast = prophet_model.predict(

periods=7, # Next 7 days

historical_data=historical_metrics

)

# Recommend capacity adjustments

if forecast.yhat.max() > current_capacity * 0.8:

return "scale_up", forecast.yhat.max()

elif forecast.yhat.max() < current_capacity * 0.3:

return "scale_down", forecast.yhat.max()

return "no_change", current_capacity

The architecture patterns covered in this guide provide the foundation for building production systems that scale with your AI ambitions while maintaining operational excellence. The final section provides specific resources and tools for implementation.