Cold starts mean your API is basically dead in the water for the first few seconds. No persistent processes, no warm servers - just you, Lambda, and the sound of users bouncing while AWS decides to wake up your function.

Every time Lambda downloads 600KB of Express garbage, I lose money and patience. Hono's 12KB isn't just a nice-to-have - it's the difference between a usable API and watching users bail during that painful first request.

Bundle Size Impact on Cold Starts

These numbers are from actual production deployments, not synthetic benchmarks:

- Cloudflare Workers: Hono ~100ms, Express 2+ seconds of pure suffering

- AWS Lambda: Hono ~500ms, Express hits 3-4 seconds (and sometimes just times out)

- Vercel Edge: Same story, different platform

Cold starts are random as hell - same function can take 200ms or 3 seconds depending on AWS's mood, your region, and whether Mercury is in retrograde. But smaller bundles consistently perform better across this chaos.

Common Cold Start Performance Killers

Here's what'll fuck your cold starts (learned the hard way):

- AWS SDK v2 - This ancient piece of shit adds 100MB. Use v3 or hate your life

- Database connections at startup - PostgreSQL pooling doesn't work in serverless. Found this out at 3am

- 128MB Lambda memory - Whoever set this default should be fired. Use 512MB-1GB or watch everything crawl

- Prisma at startup - 200MB+ schema loading killed production twice for me

GitHub issues are full of people who imported their entire ORM at the module level and wondered why Lambda timed out. Don't be that person.

Runtime Setup Options

Cloudflare Workers - Fast as hell but picky:

import { Hono } from 'hono'

import { compress } from 'hono/compress'

const app = new Hono()

app.use('*', compress())

export default app

No filesystem, no Node.js APIs, no bullshit. Perfect for APIs that just serve JSON and don't need to read files.

Bun - Benchmarks look amazing, reality is messier:

import { Hono } from 'hono'

import { serveStatic } from 'hono/bun'

const app = new Hono()

app.use('/static/*', serveStatic({ root: './public' }))

export default {

port: 3000,

fetch: app.fetch,

}

Bun breaks on minor version updates. Only use if you enjoy debugging mysterious runtime failures.

Node.js - Boring and reliable:

import { serve } from '@hono/node-server'

import { Hono } from 'hono'

const app = new Hono()

serve(app)

Slower startup, but shit actually works. Use this unless you have a specific reason not to.

Memory Management on Serverless

Express drags in 31 dependencies that each have their own dependency hell. Hono has zero. This shit matters more than you think.

Memory fuckups that'll kill your Lambda:

- Global variables that grow - Stored user data in global scope, OOMed after 2 hours

- Buffering 50MB responses - Tried to return a full CSV, Lambda just died

- Prisma schema loading - 200MB consumed before handling a single request

app.get('/export-data', async (c) => {

// Don't load this shit unless you need it

const { generateReport } = await import('./report-generator')

return c.stream(async (stream) => {

await generateReport(stream)

})

})

Lazy imports saved my ass when CSV exports started OOMing Lambda. Load heavy shit only when you actually need it.

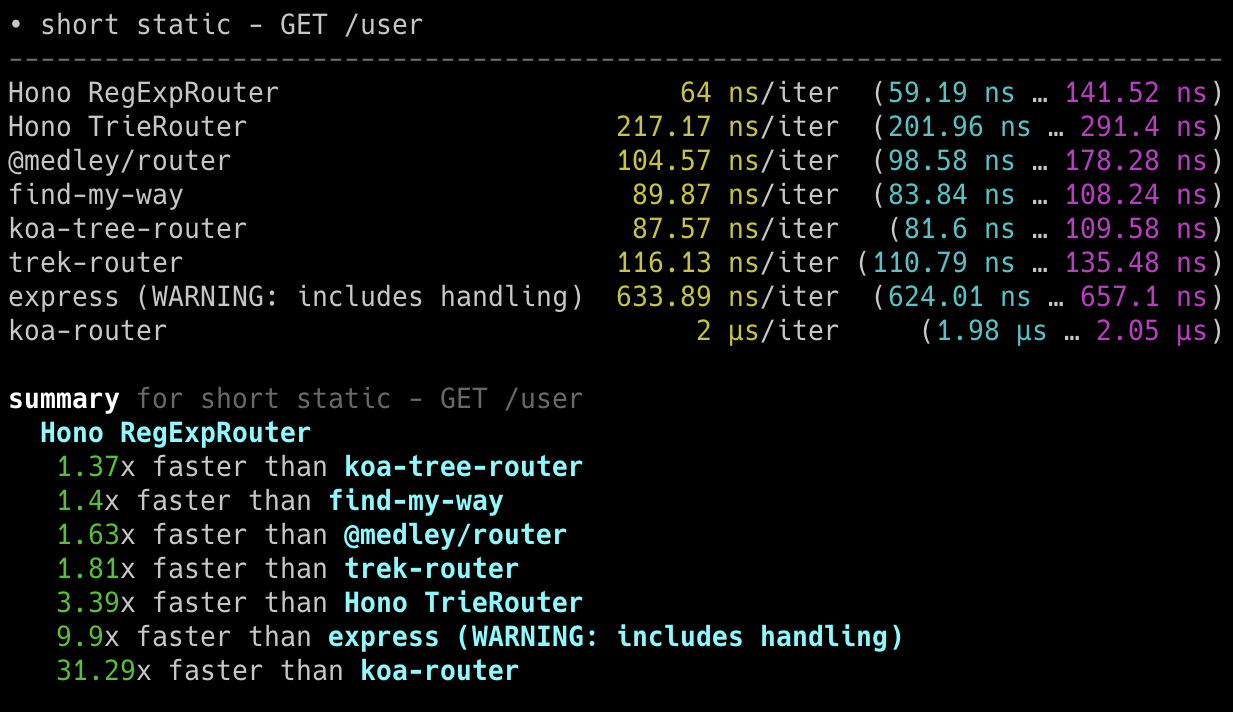

Router Performance Characteristics

Hono has multiple routing strategies because one size doesn't fit all. Express uses one router that gets sluggish around 100+ routes - noticed this when our admin panel started feeling like molasses.

- RegExpRouter: Default, compiles to regex patterns

- LinearRouter: Simple sequential matching for small apps

- SmartRouter: Picks the best strategy automatically

Express routing gets slower with every route you add. I saw admin dashboards with 200+ routes take 50ms just to figure out which handler to call.

Hono's routing stays consistent whether you have 10 routes or 1000. Express... doesn't.