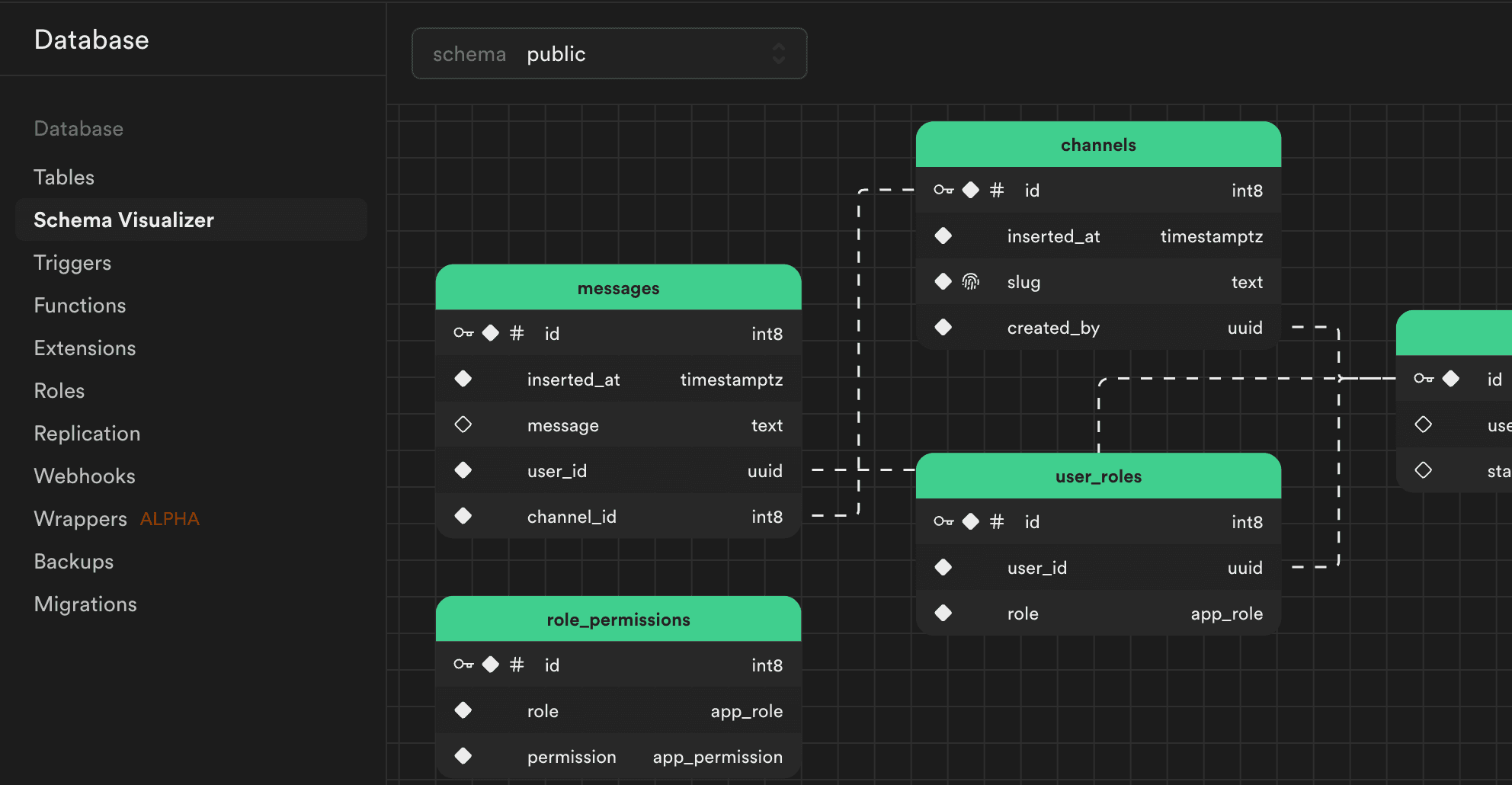

Express is slow as shit. Next.js API routes are overengineered for most use cases. GraphQL is a complexity nightmare that makes simple CRUD operations feel like rocket surgery. If you've ever spent a weekend trying to get PostgreSQL to work reliably in serverless environments, you know the pain.

After using this stack in production for 6 months, here's what actually matters: it's fast and it works. Real performance benchmarks from production systems show sub-10ms response times consistently, memory usage under 30MB, and zero cold start penalties.

What Makes This Different From Everything Else

Here's what I learned building SaaS apps with this over the past 6 months:

Type Safety That Actually Works: Change a database column in Drizzle and TypeScript immediately shits on every broken frontend component. No codegen bullshit that breaks your CI pipeline. No GraphQL schema drift that haunts you at 3am. Just TypeScript inference working as designed, with Drizzle's type-safe schema definitions and tRPC's end-to-end type safety.

Edge Performance That's Not Marketing Bullshit: Hono actually delivers sub-10ms responses on Cloudflare Workers. I'm talking real production metrics, not synthetic benchmarks. Our dashboard loads faster from Tokyo than most apps load from the same continent.

Setup That Doesn't Make You Want To Quit: Three packages. One config file. Deploy anywhere. No webpack hell, no Babel configuration, no "it works on my machine" syndrome. The T3 stack guys figured this out - you should be building features, not configuring build tools for 6 hours.

How This Actually Works in Practice

Forget the architecture theory bullshit. Here's what matters:

Edge Runtime That Doesn't Suck

Express assumes your server runs forever. That's not how Cloudflare Workers or Vercel Edge Runtime work - your code shuts down between requests and spins up in milliseconds. Most frameworks break horribly in this environment because they weren't designed for stateless execution models.

Hono's tiny 7KB bundle boots instantly. Drizzle actually works in edge environments unlike Prisma which requires connection pooling gymnastics. tRPC eliminates REST routing overhead entirely by generating type-safe client code that calls procedures directly. Benchmarks show 40% fewer round trips compared to traditional REST APIs with similar functionality.

One Repo, Zero Bullshit

Microservices fragment your codebase across 47 different repositories. This monorepo approach keeps your API, database, and frontend in one place with shared TypeScript types. No more "the frontend says it's a string but the backend sends a number" bugs.

Check the TER stack repo - one codebase, one deployment, zero schema drift between frontend and backend. It actually works.

Hosting Reality: Vercel's free tier will murder your database connections if you get any traffic. Learned this when our landing page hit HackerNews and we got a $300 Neon bill.

SQL When You Need It, ORM When You Don't

Prisma abstracts SQL until you need complex queries, then you're fucked. Drizzle lets you write raw SQL with full TypeScript types when you need it, and gives you a decent query builder for simple stuff. Performance benchmarks show Drizzle uses 30MB memory vs Prisma's 80MB - that matters in edge environments. Best of both worlds without the vendor lock-in nightmare.

What Breaks (And What Doesn't)

After 6 months in production, here's the real shit:

What Actually Works: Development is fast as hell. Change a database column and every broken frontend component lights up red instantly. Deploy to edge and your users in Singapore get the same 10ms response times as users in Ohio. The feedback loop is addictive.

What Will Piss You Off: TypeScript compilation gets slow as fuck with complex tRPC routers. Edge runtimes have weird memory limits - your 500MB CSV processing job won't work. Mobile apps can't consume tRPC directly, so you're writing REST endpoints anyway.

Migration Reality: We moved from Express + Prisma and the hardest part wasn't the code - it was convincing the team that 'new' doesn't mean 'broken'. Six months later, our API response times are 70% faster and nobody misses Prisma's generated types. But getting the frontend team to switch from REST to tRPC took three weeks of proving it works and one weekend of them fixing merge conflicts with the old API client.

Version Hell Alert: Pin your versions. I'm running Hono 4.6.3, Drizzle 0.44.5, tRPC 11.0.0 as of August 2024. These tools move fast and breaking changes will ruin your week if you let npm auto-update.

When This Stack Makes Sense

Use this for: Internal tools, SaaS dashboards, anything where you control both frontend and backend. Perfect for rapid prototyping that needs to scale.

Don't use this for: Public websites needing SEO, anything requiring server-side rendering, teams that hate TypeScript, or if you need to support every third-party integration under the sun.

OK, enough theory. Here's how to actually build this shit without losing your mind.