The Reality of GitLab Container Registry

GitLab's container registry solves one of the most annoying problems in DevOps: managing separate credentials for your code repo and your Docker images. Before this, you'd have to juggle Docker Hub credentials, set up separate authentication in CI/CD, and pray that the tokens didn't expire during a critical deployment.

The registry runs alongside your GitLab instance and uses the same permissions. If you can push code to the repo, you can push images to the registry. No more "docker login" commands scattered across your CI files, no more service accounts with mysterious permissions, no more authentication failures that break your builds at 2am.

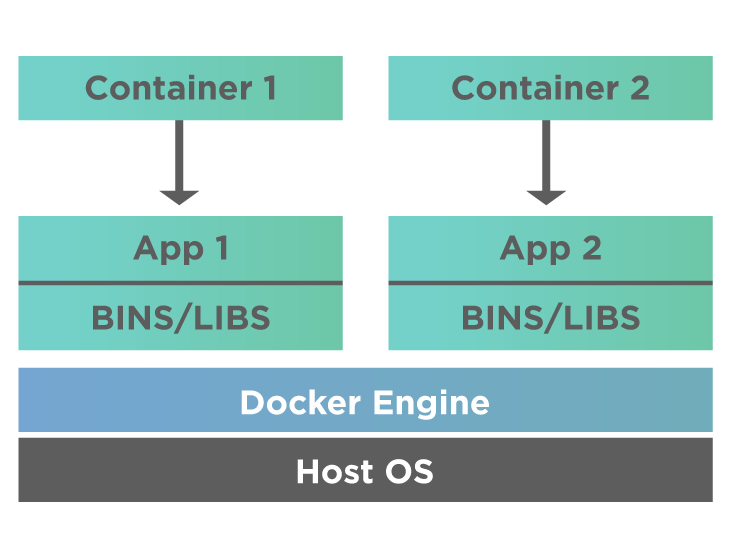

It's built on Docker Distribution, which means it talks the same Registry HTTP API V2 as everything else. Your existing docker commands work: docker push, docker pull, all the shit you're already used to. The difference is authentication just works because it's integrated with GitLab's JWT system.

How It Actually Works (And Where It Breaks)

The registry runs as a separate service, usually on port 5000, talking to GitLab through JWT tokens. When you docker push, GitLab generates a token with your repo permissions and hands it to the registry. Simple enough, until the clock skew between servers makes tokens invalid and you get hit with HTTP 401 Unauthorized: authentication required errors at 3am. Clock drift of more than 5 minutes breaks JWT validation completely.

Storage is where things get expensive fast. You can run it on local filesystem for dev, but production means S3 or equivalent. Enable lifecycle policies immediately or your storage bill will make you cry. I've seen orgs hit $50k/month in S3 costs because no one set up cleanup policies and developers kept pushing 2GB images with every commit. Use multi-stage builds and proper storage optimization to avoid this nightmare.

The registry authenticates through GitLab's main auth system, which sounds great until LDAP is down and no one can deploy. At least with Docker Hub, when their auth breaks, it's their problem.

The Metadata Database Finally Fixes the Garbage Collection Nightmare

GitLab 17.3 introduced a metadata database that moves registry metadata from object storage into PostgreSQL. This finally fixes the garbage collection problem that's been making ops teams miserable for years.

Before this, cleaning up old images meant taking the entire registry offline - coordinating with every team, setting maintenance windows, and inevitably someone's deployment would break because they didn't get the memo. Online garbage collection runs in the background now, cleaning up orphaned layers without downtime.

The migration to metadata database is scary as hell for production systems though. You're basically moving the registry's brain from file-based storage to PostgreSQL. The migration process can take hours or days depending on how much shit you've accumulated, and there's no rollback if something goes wrong.

Here's what actually works: the metadata database also gives you storage usage metrics that actually work. Before this, figuring out which projects were eating your storage budget meant parsing S3 logs like a caveman. You can finally get real-time storage reports, project-level usage breakdown, and automated cleanup alerts instead of surprise billing.

Security Scanning (And the False Positive Hell)

Security scanning runs automatically with Trivy built in. It'll find vulnerabilities in your images, then you'll spend 3 hours figuring out which ones actually matter and which are false positives. Container scanning happens during CI builds and dumps results into GitLab's security dashboard.

The good news is access control just works with GitLab's existing permissions. If you can push to the repo, you can push images. If you can read the project, you can pull images. No separate ACL bullshit to maintain. Project-level permissions control registry access automatically.

The bad news is vulnerability scanning can slow down your builds significantly, especially for large images. You can disable it, but then security teams get grumpy. You can configure it to only scan certain branches, but then you miss vulnerabilities in development. There's no perfect solution - just different levels of pain. The scanner finds CVE-2023-44487 (HTTP/2 Rapid Reset) in every image using Alpine 3.17, but you can't fix it because updating breaks your application dependencies. Configure security policies and vulnerability management for the full security theater experience.