Your container dies during shutdown because Bun's acting like a deaf kid when Docker tries to shut it down. When Bun runs as PID 1, it completely ignores the SIGTERM signals Docker's sending. Docker waits around for 10 seconds like a patient parent, then says 'fuck it' and kills everything with SIGKILL - hello exit code 143.

## Breaks during shutdown

CMD [\"bun\", \"run\", \"start\"]

## Actually handles signals

CMD [\"bun\", \"--init\", \"run\", \"start\"]

Add `--init` to your CMD and the crashes stop. This spawns tini - a tiny init process that actually handles shutdown signals and forwards them to Bun. Without it, Docker gives up after 10 seconds and kills your container. Classic PID 1 fuckery that bites every containerized app.

CPU Instruction Crashes on Older Hardware

Bun crashes with "Illegal instruction" on older hardware because it needs AVX2 CPU instructions. This hits GitHub Actions, older AWS instances (anything t2/t3, most m5 instances), Google Cloud Build, and basically any CI runner using budget CPUs. It also fucks over Docker Desktop on older Macs.

oh no: Bun has crashed. This indicates a bug in Bun, not your code.

Illegal instruction at address 0x2B11410

## This cryptic bullshit error means your CPU is too old for Bun's optimized build.

## Took forever to figure out this was AVX2 bullshit.

## Stack Overflow was useless. Found the answer buried in some GitHub issue.

## GitHub Actions is basically guaranteed to not have modern CPU instructions.

Use the baseline binary for older hardware. The baseline build targets x86-64-v1 without modern extensions:

FROM oven/bun:1-baseline

This solves compatibility with older VPS providers, legacy cloud instances, and shared hosting that don't support advanced vector extensions.

Lockfile Platform Issues Break CI

Lockfile problems happen when you develop on macOS but deploy to Linux containers. Bun's binary lockfile format stores platform-specific data that breaks CI builds when platforms don't match.

error: lockfile had changes, but lockfile is frozen

## This happens every damn time you go from Mac dev to Linux container.

## Works fine locally, breaks instantly on every single CI run.

## Took forever to figure out it was binary lockfile platform differences.

Skip the frozen flag or generate the lockfile in Docker:

## Just install normally

RUN bun install

## Or generate lockfile in container first

RUN bun install && bun install --frozen-lockfile

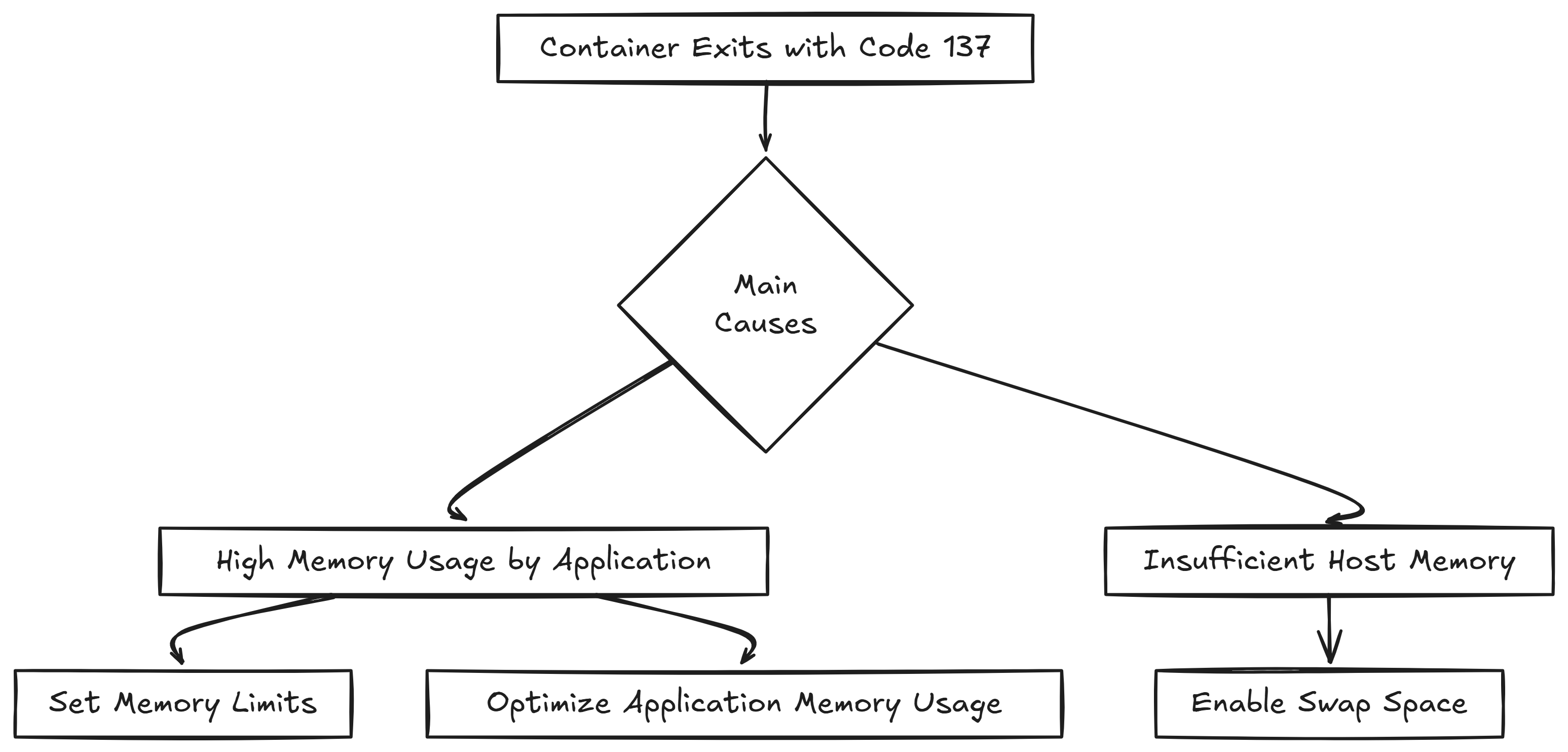

Memory Issues and OOMKilled Containers

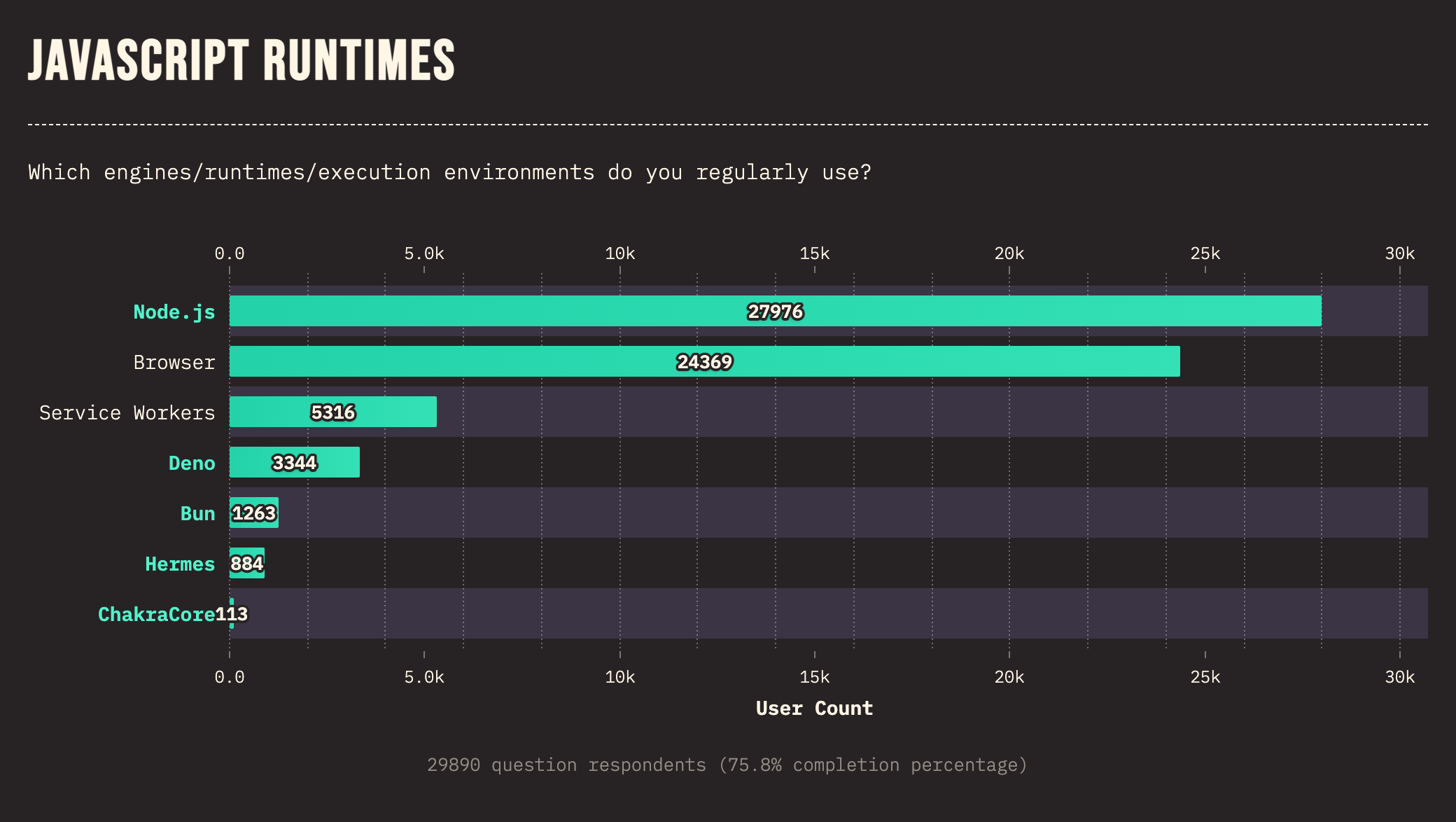

Bun's hungrier for memory than Node because it uses JavaScriptCore instead of V8. Same code that sips 300MB in Node suddenly wants 450MB+ in Bun. JavaScriptCore is hungry as hell.

## Exit code 137 = SIGKILL from OOM

## Had our production API running fine locally - using maybe 300MB.

## Deployed to production and everything started OOMing at 700MB.

## Spent hours thinking it was a memory leak before I realized

## Bun just uses way more RAM than Node. Same exact code, 40% more memory usage.

Set explicit memory limits and clear cache location:

ENV BUN_RUNTIME_TRANSPILER_CACHE_PATH=/tmp/bun-cache

RUN mkdir -p /tmp/bun-cache

Build Context Size Problems

Docker builds are slow because standard .dockerignore files don't exclude Bun's cache directories. The .bun folder grows to gigabytes and slows your builds.

.bun/

bun.lockb.old

node_modules/

dist/

build/

*.log

Health Check Failures and Restart Loops

Health checks fail because Bun takes longer to start than Node.js. Bun.serve() needs time to warm up the transpiler and initialize, but Docker expects the server to be ready immediately.

## Give Bun time to start

HEALTHCHECK --start-period=40s \

CMD curl -f http\://localhost\:3000/health || exit 1

90% of crashes are missing the --init flag. Add that first - 5 minutes if you're lucky, 2 hours if Docker decides to be a pain in the ass. Then worry about memory limits and health check timing. Everything else is just optimization bullshit that doesn't matter until you fix the basics.