I've spent the last 3 years building search systems that don't completely fail users. Here's what I learned after debugging why our "intelligent" search couldn't find shit.

The Problem: Keyword Search is Fucking Broken

Your users search for "Python crashes" and get zero results because your documents say "Python exceptions". They search "memory leak" and miss all the docs about "high memory usage". I spent 2 weeks trying to build synonym lists before realizing I was fighting a losing battle against human language.

The breakthrough came when I finally understood what embedding models actually do: they turn words into vectors where similar meanings end up close together mathematically. Instead of matching exact strings, you calculate which vectors are closest using cosine similarity.

Here's what nobody explains clearly: embedding models take your text and shove it into coordinates in math space. Similar words end up as neighbors. "Car" and "vehicle" live next to each other, "car" and "banana" are in different fucking universes.

Real Production Experience: What Actually Works

OpenAI's text-embedding-3-large: Costs about $0.13 per million tokens. I've embedded 50M+ documents with it. Quality is solid for English, decent for Spanish/French, garbage for anything else. Context window is 8,192 tokens which handles most documents without chunking hell. Their pricing structure changes frequently, so check current rates.

Cohere's Embed v4: More expensive at around $0.15-0.20 per million tokens but actually works with 100+ languages. Their latest model handles up to 128k context which means less chunking nightmares.

Voyage AI voyage-3: Expensive as fuck ($0.12 per million) but performs better on domain-specific content. Their documentation claims 67% on MTEB benchmark but your results will vary based on your data.

Chunking Will Destroy Your Search Quality

Documents get chopped into 1000-1500 token pieces with 150 token overlap - basically copy-paste the end of one chunk to the start of the next so you don't split concepts in half like an idiot.

Fuck up your chunking and even the best embedding model can't save you. I learned this when our legal team started bitching that contract searches returned random middle-of-document sentences instead of actual contract clauses.

I learned this when our legal team called me at 8am asking why searching for "contract termination" returned the word "termination" from random ass sentences about bug termination and server termination. Turns out our 512-token chunks were splitting legal concepts across boundaries like a fucking blender.

What actually works: 1000-1500 token chunks with 100-150 token overlap. I tested this on 50,000 legal documents after the contract disaster. Smaller chunks lose context, bigger chunks return entire pages when you want one clause. The overlap prevents concepts from getting chopped in half. LangChain's defaults will fuck you over in production.

What doesn't work: Splitting on sentences or paragraphs without considering token limits. Your chunks will be random sizes and performance will be inconsistent.

Vector Database Hell: The Expensive Lesson

Pinecone: Started at $70/month for our prototype. Hit 2M vectors and suddenly we're paying $800/month because their "serverless" pricing is three separate bills in a trenchcoat - queries, storage, AND compute. Performance is solid but their pricing will murder your runway faster than a Y Combinator pitch deck.

pgvector: Saved us $700/month by moving 90% of our workload to PostgreSQL with pgvector extension. Performance is decent up to 10M vectors, after that you need better hardware or multiple instances. The Timescale comparison shows real benchmarks.

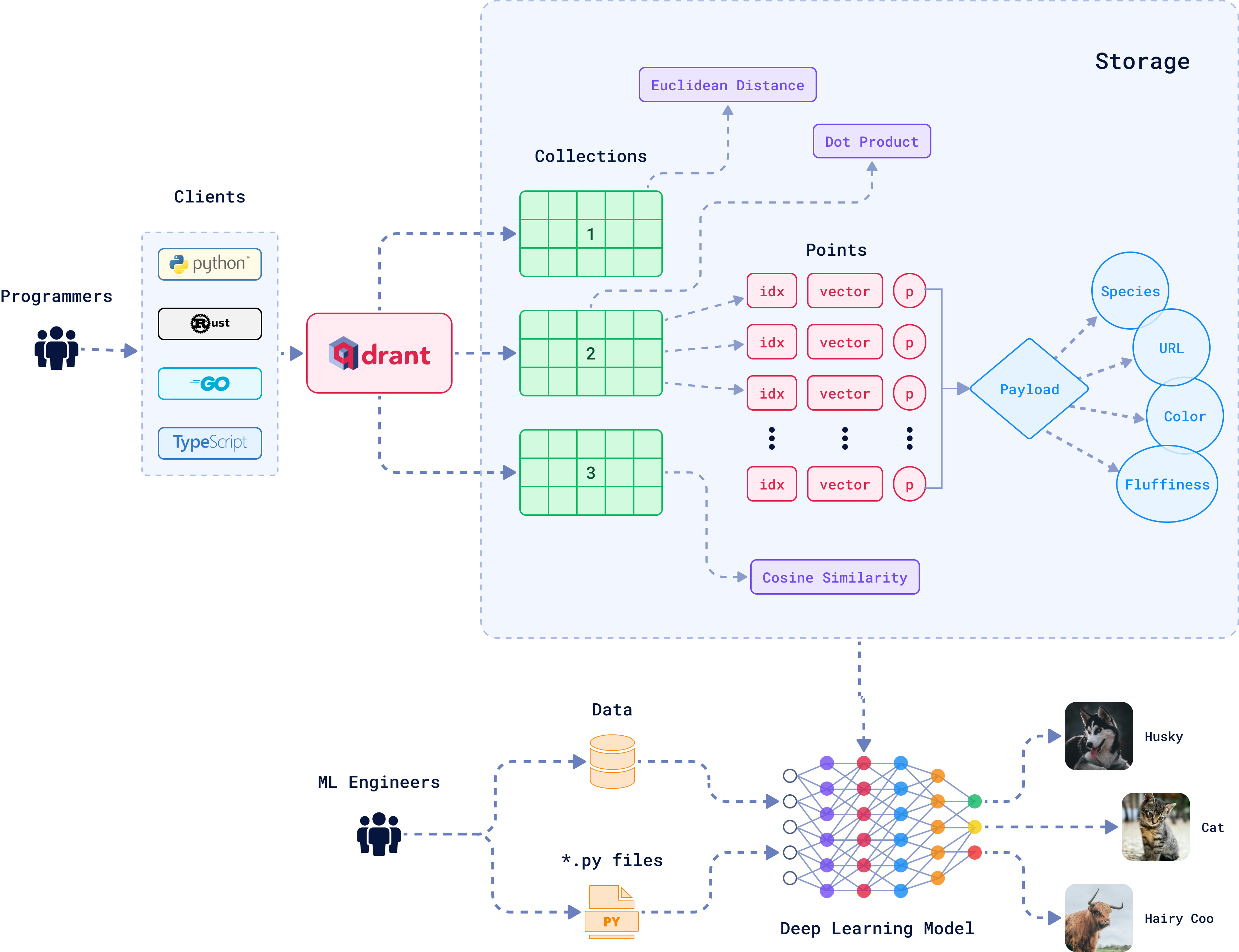

Qdrant: Fast as hell but you manage the infrastructure. Docker setup is straightforward, clustering is not. If you have DevOps bandwidth, it's worth it. Their documentation is actually good, unlike most startups.

The Hybrid Search Reality Check

Pure semantic search misses exact matches users expect. Pure keyword search is too literal. Hybrid search combining both works but adds complexity.

I implemented this using a weighted combination: 70% semantic similarity + 30% BM25 keyword score. Took 3 weeks to tune the weights for our data. Your mileage will vary - test with real user queries. Elasticsearch and Weaviate both support hybrid search natively.

Cost Warnings Nobody Mentions

Embedding costs scale non-linearly. 1M documents might cost $200/month. 10M documents cost $3,000/month because you need better infrastructure, more API calls, and higher-tier vector database plans.

Pre-compute embeddings for static content or you'll go broke on API calls. We batch process 100k documents overnight and cache the results. Real-time embedding is only for user queries.

Chunking:

Chunking: