Your PostgreSQL database works great for SELECT * WHERE user_id = 123. It falls apart completely when you need "find documents similar to this one" or "what products might this user like." Traditional databases think in exact matches. AI thinks in similarities and patterns.

That's where vector databases come in. They store embeddings - basically arrays of floating point numbers that represent the "meaning" of your data. Instead of exact SQL matches, you get similarity search based on mathematical distance between vectors.

The Real Problem Vector Databases Solve

I learned this the hard way building a document search system in 2023. We had 50,000 support articles in PostgreSQL with full-text search. Users would search for "password reset" and get nothing because the articles used terms like "credential recovery" or "login restoration."

The solution was embeddings. We used OpenAI's text-embedding-3-small to convert each article into a 1536-dimensional vector. Suddenly, "password reset" matched "forgot my login" because they're semantically similar - even with completely different keywords.

But here's the catch: storing 50,000 vectors × 1536 dimensions × 4 bytes = 300MB just for embeddings. And searching through them linearly took 2+ seconds per query. Vector databases fixed this nightmare.

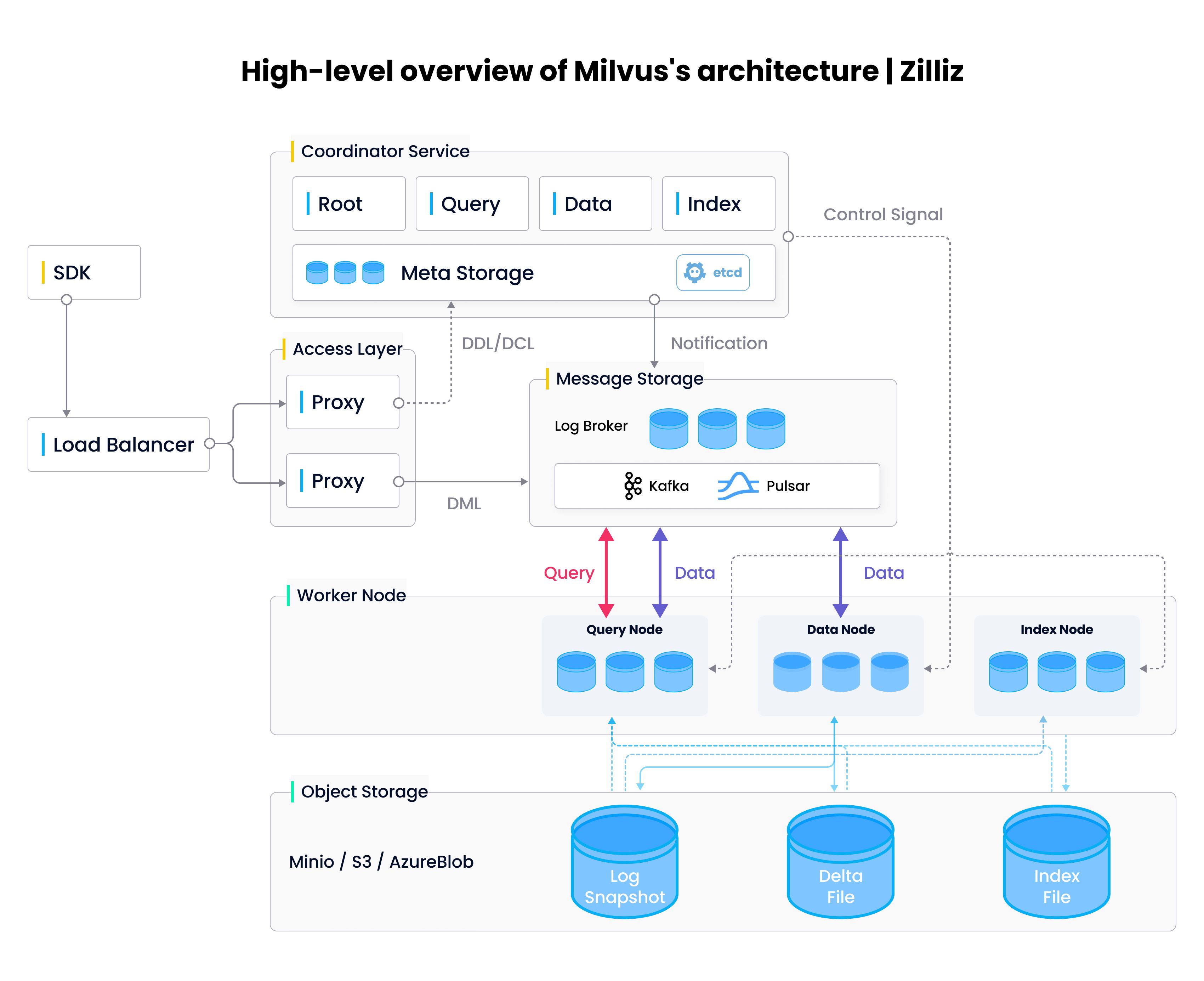

Traditional database indexes like B-trees are built for exact matches and range queries. They're useless when you need to find the "nearest neighbors" in high-dimensional space. You need specialized approximate nearest neighbor (ANN) algorithms like HNSW or IVF to make this shit fast.

What Actually Happens Under the Hood

Vector databases use algorithms like HNSW (Hierarchical Navigable Small World) to pre-build search graphs. Instead of checking every vector, they hop through the graph to find approximate nearest neighbors in milliseconds. Pinecone's deep dive explains this brilliantly.

The trade-off? Perfect accuracy goes out the window. Most systems give you 95-99% recall, meaning you might miss the actual best match. But in practice, the 5th best match is usually good enough - and 100x faster than checking every damn vector.

Here's what killed our first implementation: we tried running similarity search on raw PostgreSQL with pgvector. Worked fine with 1,000 documents. At 10,000+ documents, queries took 5+ seconds and pegged CPU at 100%. Started getting ERROR: canceling statement due to statement timeout errors in production. Had to restart the database twice because queries were locking up other connections. The HNSW index reduced query time to under 50ms, but rebuilding the index after adding new documents took 30 minutes and blocked all writes.

Other common algorithms include IVF (Inverted File Index) and Product Quantization. Each has different speed vs accuracy trade-offs. Facebook's Faiss library implements most of these if you want to experiment locally.

Your Embeddings Probably Suck (Here's Why)

Your search results are only as good as your embeddings. We spent 3 months debugging "irrelevant" search results before realizing our embedding model sucked at our domain-specific technical documentation. Our CFO literally asked "what the hell is an embedding?" when the bill hit $800/month.

OpenAI's general-purpose embeddings work great for consumer content but struggled with API documentation and error codes. We ended up fine-tuning our own embedding model, which improved relevance from 60% to 85% but added 6 weeks to the project.

Better alternatives exist now. BGE models often outperform OpenAI on MTEB benchmarks and cost nothing to run. Sentence Transformers has domain-specific models for legal text, scientific papers, and code search.

Shit embeddings = shit search results. No amount of fancy vector database optimization fixes garbage embeddings. Trust me, I've tried. Test different models with your actual data before choosing your stack. Embedding benchmarks help, but they don't replace testing with real user queries on your domain-specific content.