SSL Handshake Failures (The Time-Waster Champion)

This "Handshake read failed" error ate 4 hours of my life before I realized my laptop's clock was wrong. SSL is picky as hell - if anything's slightly off between your system and Pinecone's servers, it just dies.

What actually causes this:

- Your firewall blocks HTTPS (classic enterprise move)

- Your system clock is wrong (SSL hates time travelers)

- Your API key is copy-pasted with invisible characters (happens more than you think)

- Your Docker container's SSL certificates expired in 2018

- Pinecone's servers are down (check status.pinecone.io first)

- Corporate proxy stripping SSL headers (my personal favorite nightmare)

- Python SSL dependencies missing (happens on minimal Docker images)

I spent 4 hours debugging this once before realizing my laptop's clock was wrong. Don't be me.

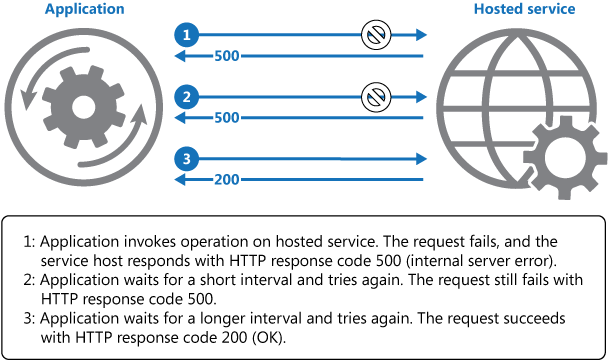

When Pinecone Just Says "Nope" (500 Errors)

Sometimes Pinecone's servers are having a bad day and return 500 errors. Before you rewrite your entire app like I almost did, check this stuff first:

- Pinecone is actually down - Check status.pinecone.io before you waste 3 hours debugging your code

- Your vectors are malformed - Wrong dimensions, fucked up metadata, or you're sending 50MB of data in one request

- You're on the free tier during peak hours - Shared resources get overwhelmed and your requests die

- Connection pool exhaustion - Too many concurrent connections hammering the API

- Regional connectivity issues - Wrong region configuration or network routing problems

Pro tip: If Pinecone returns 500 errors consistently, it's them, not you. Don't rewrite your entire codebase.

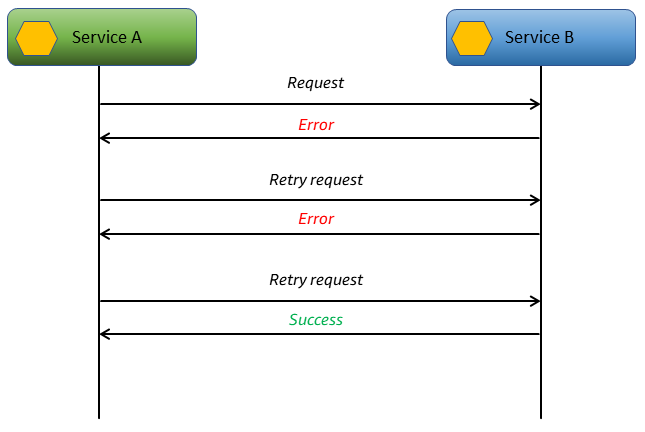

The Rate Limiting Blues

Hit rate limits? Welcome to the free tier experience. Pinecone will start blocking you after too many requests:

- 429 errors - You're making requests too fast

- Timeouts - Your batch sizes are stupidly large

- Random failures - Free tier resources are overloaded

Solution: Add time.sleep(1) between requests like it's 2005. Or upgrade to a paid plan.

Why It Works Locally But Fails in Production

Every developer's favorite nightmare. Your code runs fine on localhost but explodes in production because:

- Your production environment has no internet (Docker networking is a special kind of hell)

- Corporate firewalls hate everything (especially port 443 to random cloud services)

- Environment variables are fucked (PINECONE_API_KEY="your-api-key-here" doesn't work)

- Your prod system thinks it's 1970 (SSL certificates care about time)

- Hosting platform restrictions - Some platforms block external API calls

- DNS resolution failures in containerized environments

- Certificate authority bundles missing on minimal base images

I've had production deployments fail because the server was behind a proxy that stripped SSL headers. Good times.