LangChain is a Python library created by Harrison Chase in October 2022 that makes it easier to work with large language models. Instead of learning OpenAI's API, then Anthropic's API, then Google's API, LangChain gives you one interface that works with all of them.

The main problem it solves is this: you can call OpenAI and get a response, but what about when you need that response to search your database, remember previous conversations, or format data for your frontend? That's where LangChain comes in.

Companies like LinkedIn and Uber use it in production, though be warned - the 0.1 to 0.2 migration was brutal for most teams. Version 0.3 (released September 2024) dropped Python 3.8 support and switched to Pydantic 2, which completely fucked things up if you weren't ready.

And then just in August 2025, they changed peer dependencies again. Because apparently breaking changes every few months is a feature, not a bug.

Recently, they raised $100 million at a $1.1 billion valuation, which explains why they're pushing LangSmith so hard. Got to justify that valuation somehow. They also launched Open SWE in August 2025 - an async coding agent that's supposedly better than the existing AI coding tools.

What LangChain Actually Does

Instead of writing boilerplate code to:

- Switch between different AI providers

- Parse LLM responses into structured data

- Remember conversation history through memory components

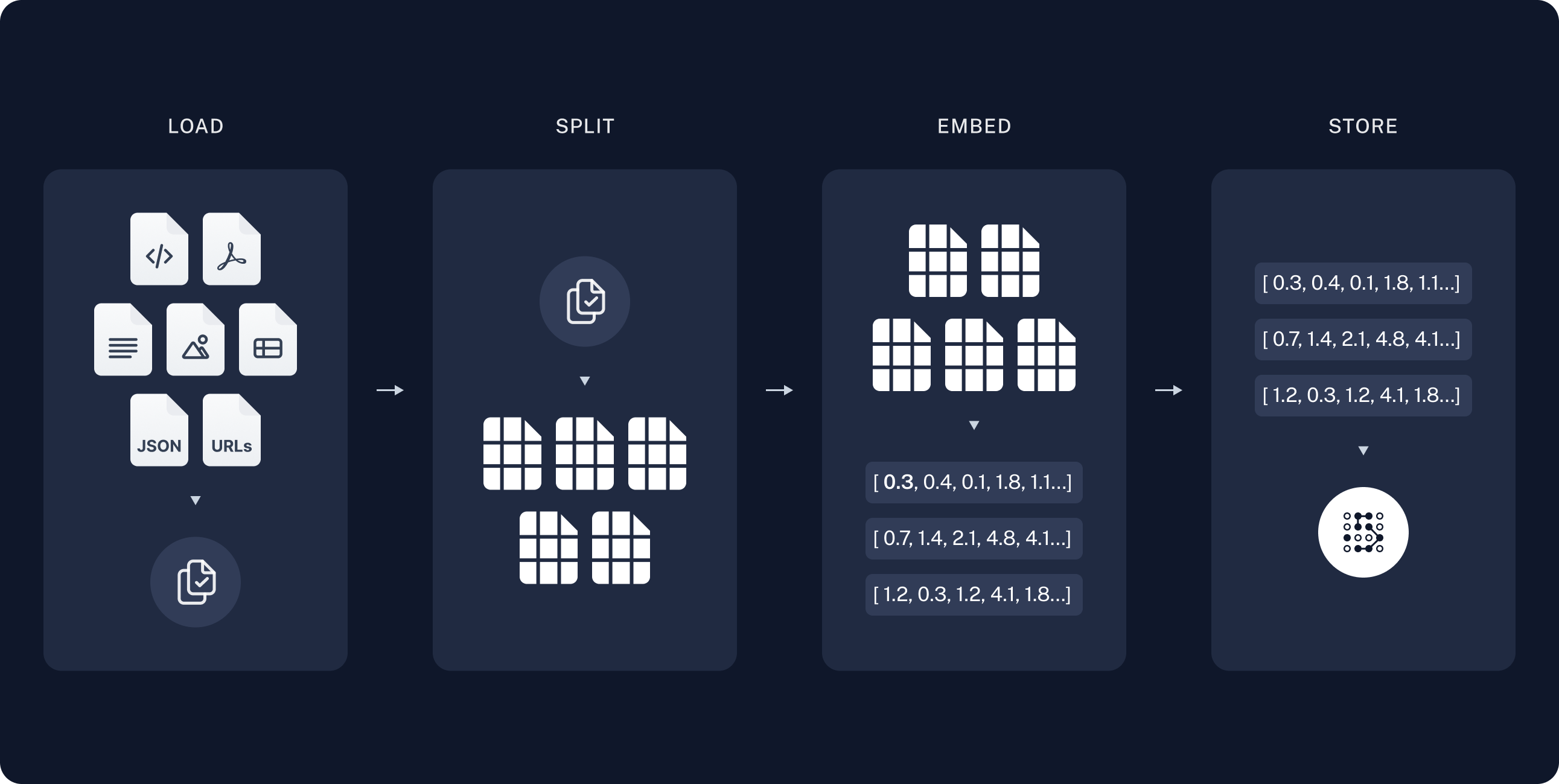

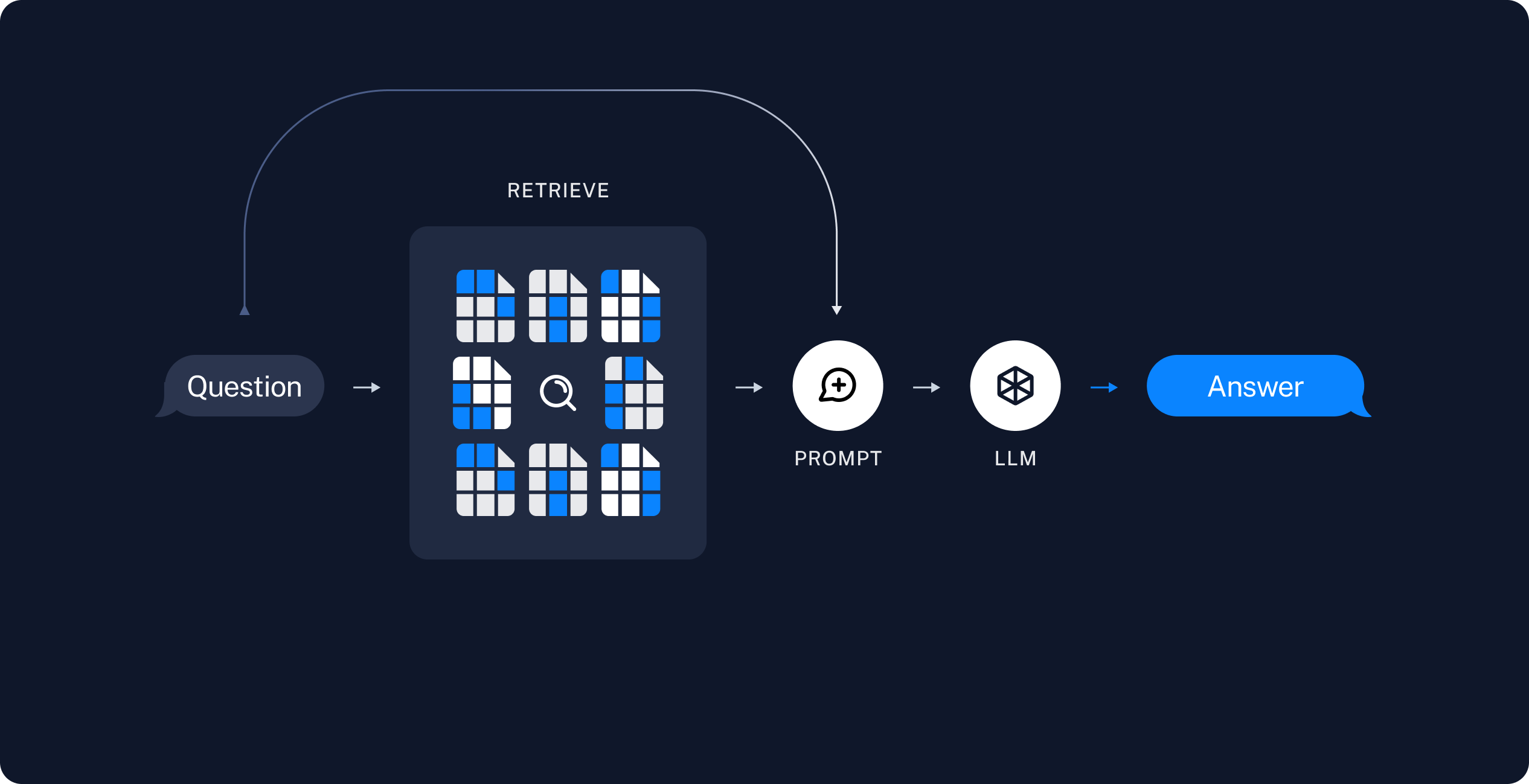

- Search through your documents with vector stores

- Chain multiple AI calls together using LCEL

LangChain gives you pre-built components for all of this. The tradeoff is complexity - LangChain can be overwhelming if you're just trying to build a simple chatbot. Trust me, I've seen teams spend 3 weeks trying to get a basic Q&A bot working when a simple OpenAI API call would've done the job.

The Three Core Parts

Providers: One interface for OpenAI, Anthropic, Google, Azure OpenAI, Cohere, Hugging Face, and dozens of other LLM providers. When OpenAI raises their prices (which they do regularly), you can switch to Claude without rewriting your app. In theory. In practice, each provider has subtle differences that'll bite you in production.

Chains: Building blocks you can connect together using LangChain Expression Language. Want to search documents, then summarize them, then ask follow-up questions? LangChain has components for each step. The problem is debugging these chains when they break - and they will break in weird, hard-to-diagnose ways.

Tools: Let your LLM call functions, search the web, query databases, perform calculations, or run Python code. The LLM decides when to use each tool based on the conversation. Which sounds awesome until your agent gets stuck in an infinite loop calling the same tool over and over, racking up thousands in API bills.

LangChain works great for complex use cases but can be overkill for simple ones. If you just need basic chat functionality, the OpenAI SDK might be simpler. With OpenAI's function calling improvements and structured outputs, a lot of developers are questioning whether they need the extra complexity.