Been using DuckDB with big datasets for a while now. Most optimization guides focus on stuff that doesn't actually help. There are 3 settings that actually fix slow queries.

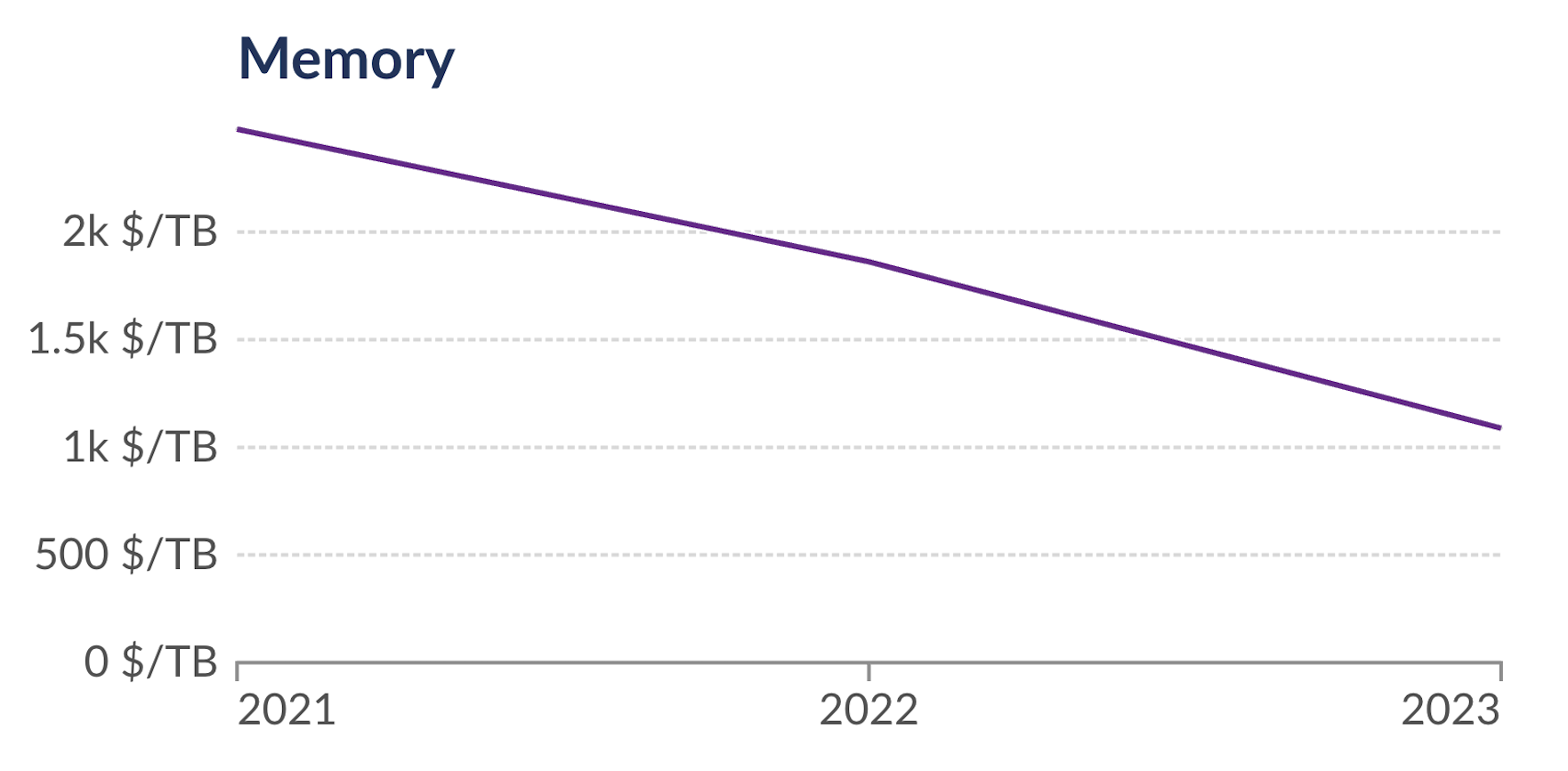

Memory Limit: Just Give It More RAM

DuckDB's being conservative with your RAM. It only grabs 80% by default, which is stupid when you have 64GB sitting there doing nothing:

SET memory_limit = '90%';

This lets DuckDB use more memory before spilling to disk. Makes queries way faster in most cases.

Watch out: Don't set it higher than 95%. I crashed a server once setting it to 98% - everything locked up when the OS needed memory for something else. Spent an hour figuring out what happened.

Thread Count: Your CPU Probably Sucks at Hyperthreading

DuckDB detects your CPU cores automatically but hyperthreading usually hurts performance. Your CPU probably has hyperthreading and DuckDB will use all logical cores by default:

SET threads = 32; -- Physical cores only

But if you're reading from S3 or remote files, use more threads since you're waiting on network anyway.

Temp Directory: Put It on Your Fastest Drive

When DuckDB runs out of memory, it spills to disk. Put the temp files on your fastest drive:

SET temp_directory = '/fast-storage/duckdb-temp';

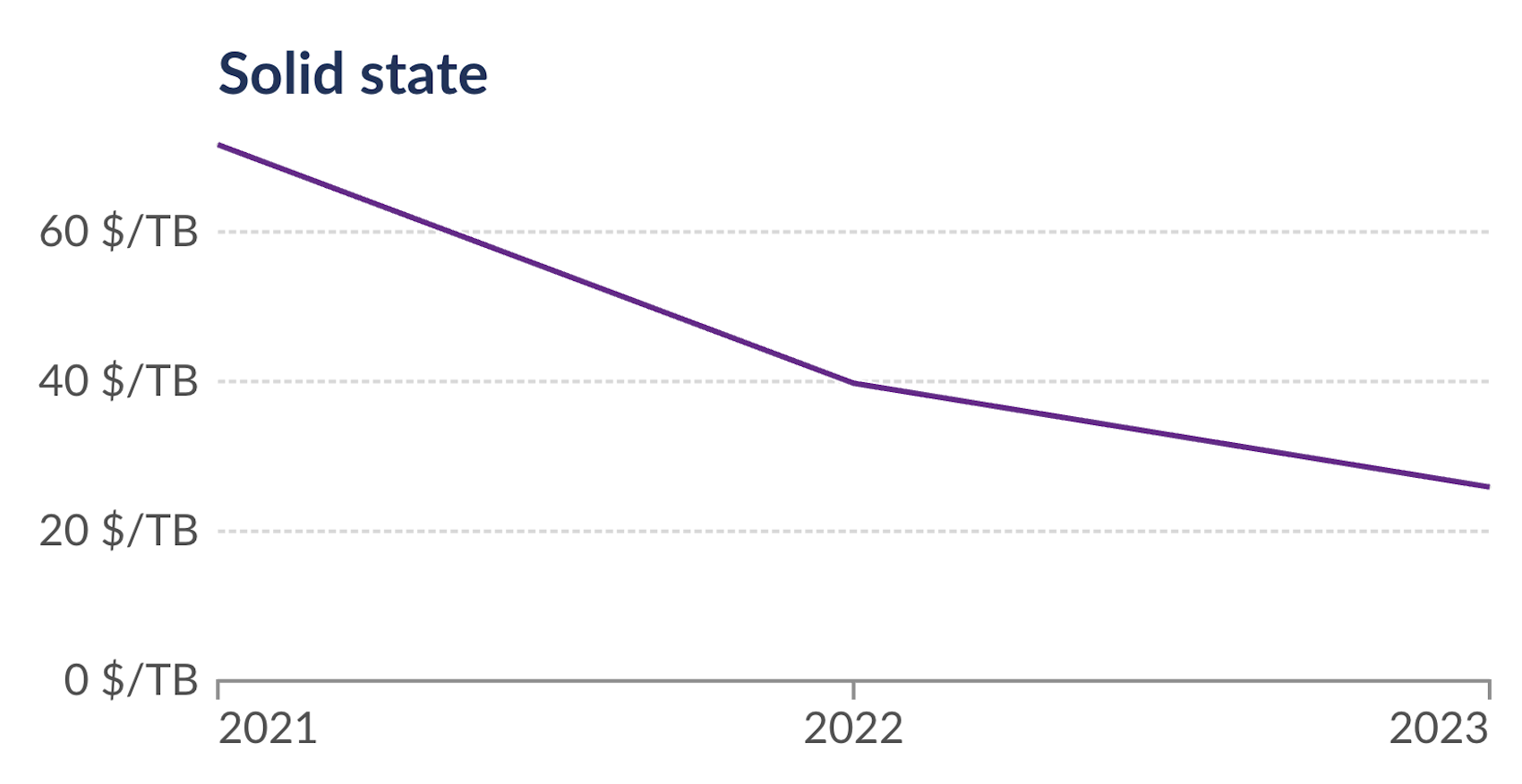

Spinning drives make spills really slow. SSD helps. NVMe is best but still slower than keeping everything in memory.

Here's What Nobody Tells You About Memory Monitoring

Use these commands to see what's actually happening:

FROM duckdb_memory(); -- Shows memory breakdown

FROM duckdb_temporary_files(); -- Lists temp files being created

If you see temp files being created, either give DuckDB more memory or fix your query. Usually the query is doing something inefficient.

Why preserve_insertion_order = false Sometimes Helps

This one's weird. DuckDB tries to keep your data in the same order, but that uses extra memory:

SET preserve_insertion_order = false;

Use this for ETL jobs where order doesn't matter. Saves memory on big imports.