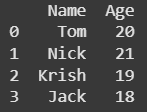

pandas is the data manipulation library that Python developers love to hate and hate to love. It's built on NumPy and gives you two main things: DataFrames (2D data like a spreadsheet) and Series (1D data like a column). Released in 2008 by Wes McKinney when he got fed up with financial data analysis, and we've been collectively debugging it ever since.

A pandas DataFrame is basically a fancy spreadsheet that doesn't crash when you have more than 65,536 rows

The latest version is 2.3.2 from August 2025, which means it's had 17 years to accumulate features, quirks, and warnings that make you question your life choices.

Why pandas Exists (And Why It Won't Die)

pandas fills the gap between "I have data" and "I can actually use this data." It handles the boring stuff - reading CSVs that Excel mangled, dealing with missing values, merging datasets that should probably fit together but don't quite.

The reason it won't die despite newer, faster alternatives is simple: legacy code. There's probably 50 million lines of pandas code running in production right now, and nobody wants to be the one to rewrite it all.

Companies like Netflix and JPMorgan use it because it works well enough, and when you're processing billions of records, "well enough" often beats "theoretically perfect." The devil you know and all that.

The Good, Bad, and Ugly

The Good: pandas makes data wrangling accessible. You can read a CSV, clean it up, do some aggregations, and export results without wanting to throw your laptop out the window. The API is mostly intuitive once you learn the pandas way of thinking.

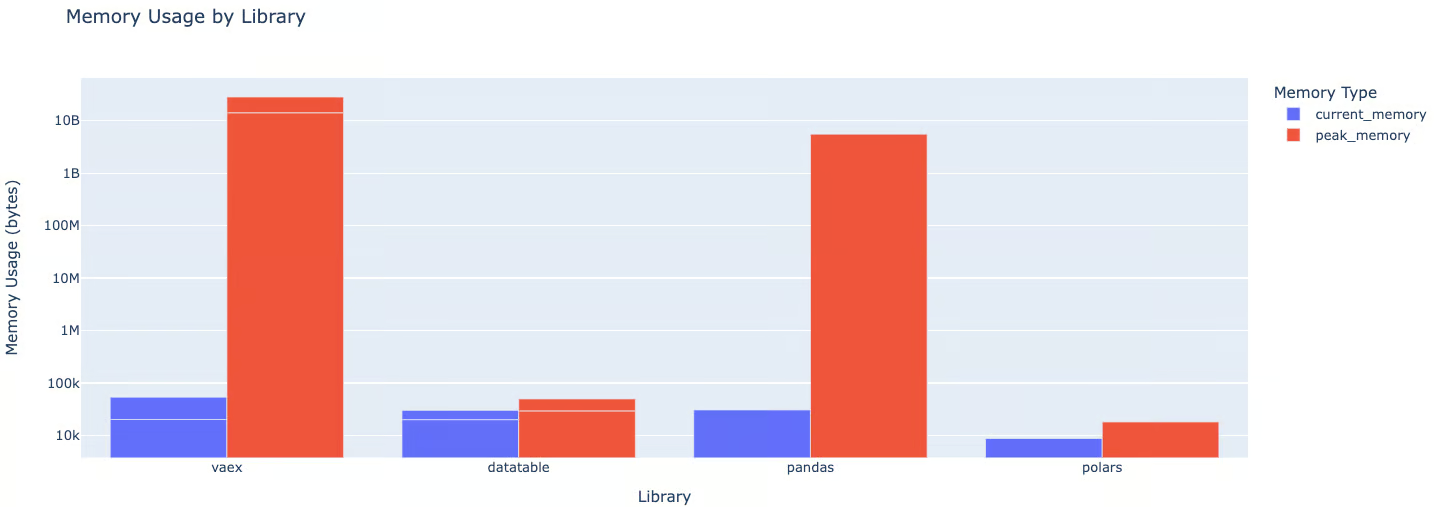

The Bad: It's slow as hell on large datasets, eats RAM like it's going out of style, and has approximately 47 different ways to do the same thing. String operations will make you go get coffee. Complex joins will make you question your career choices.

The Ugly: The SettingWithCopyWarning. If you've used pandas for more than 10 minutes, you've seen this warning and wanted to set your computer on fire. It's pandas trying to be helpful about memory management, but it feels like your library is judging your life decisions.

For a comprehensive overview of pandas capabilities, the official documentation covers everything from basic operations to advanced indexing. The pandas GitHub repository is actively maintained with regular releases and community contributions.