Why Manual Multi-Account Deployment Is Hell

Deploying infrastructure changes across 15+ AWS accounts manually will destroy your team's productivity and sanity. We learned this the hard way after our fourth production outage caused by deployment inconsistencies between accounts.

Why We Finally Automated Everything

The Jenkins incident: Someone changed an environment variable that started dumping debug logs to prod. Our AWS bill went nuts - think it was like $800 or something because we were logging way too much. Took me forever to figure out why monitoring was broken and costs were crazy.

Version nightmare: Dev team upgraded Terraform, prod was still on the old version. State got messed up during a weekend deploy. Spent most of Sunday manually importing resources while everyone kept asking when the site would be back up.

Audit fun: "Show us your change log for the last 6 months." We had accounts running different versions of everything, some with random hotfixes that nobody bothered committing to Git. Our change tracking was basically Slack messages and hoping someone remembered what they did.

The accidental destroy: One of our devs ran terraform destroy thinking they were in dev. They were in dev, but our backup process was broken so we lost half a day rebuilding stuff.

That's when I decided to spend the next few months building actual automation. Took way longer than I thought but will save you from going through the same pain. AWS Organizations best practices and multi-account strategy guides provide the foundation. GitHub Actions CI/CD patterns and OIDC integration examples show the secure automation approach. Terraform state management and disaster recovery strategies ensure reliability.

The Architecture That Actually Works

After way too many months debugging broken deployments, here's what actually works:

Centralized Pipeline with Account-Specific State

The key insight: one pipeline, multiple backends. Each account gets its own Terraform state file, but all deployments flow through the same GitOps pipeline. This prevents state conflicts between accounts while maintaining deployment consistency.

We use Terragrunt because Terraform workspaces are broken for multi-account. Workspaces share state files and make access control impossible. Multi-account deployment patterns and Terragrunt authentication guides provide comprehensive examples for AWS account management. Production-grade multi-environment setups demonstrate real-world patterns that scale. AWS multi-account best practices and Terragrunt Quick Start guides cover the foundational concepts.

## Example Terragrunt configuration that actually works

terraform {

source = "../../../modules/vpc"

}

remote_state {

backend = "s3"

config = {

bucket = "terraform-state-${get_env("ACCOUNT_ID")}"

key = "${path_relative_to_include()}/terraform.tfstate"

region = "us-east-1"

dynamodb_table = "terraform-locks-${get_env("ACCOUNT_ID")}"

encrypt = true

}

}

inputs = {

environment = get_env("ENVIRONMENT")

account_id = get_env("ACCOUNT_ID")

vpc_cidr = "10.${get_env("ACCOUNT_NUMBER")}.0.0/16"

}

Cross-Account Role Assumption That Doesn't Break

Managing IAM permissions across multiple accounts is where most teams give up and go back to manual deployments. The secret is using AWS OIDC integration with GitHub Actions instead of long-lived access keys that get leaked in Slack channels.

Each account has a deployment role that the CI/CD pipeline can assume. The roles are configured with trust policies that only allow specific GitHub repositories and branches to assume them. This prevents developers from accidentally deploying to production from their local machines.

AWS OIDC setup documentation sucks. You'll spend days debugging trust policies. The aws-actions/configure-aws-credentials docs don't mention that GitHub OIDC tokens need exact repository matches in your trust policy - learned that one the hard way.

Environment Promotion That Doesn't Suck

Most "multi-environment" pipelines are actually just the same code deployed with different variable files. This works until you need environment-specific customizations, then everything becomes a mess of conditional logic and feature flags.

Our approach: environment-specific branches with controlled promotion. Development changes merge to develop branch and auto-deploy to dev accounts. Staging changes merge to staging branch after dev validation. Production deployments require explicit promotion from staging branch to main branch with required approvals.

## GitHub Actions workflow that actually handles multi-account deployment

name: Multi-Account Infrastructure Deployment

on:

push:

branches: [develop, staging, main]

pull_request:

branches: [develop, staging, main]

jobs:

plan:

strategy:

matrix:

account:

- { name: "dev", id: "111111111111", role: "arn:aws:iam::111111111111:role/GitHubDeployment" }

- { name: "staging", id: "222222222222", role: "arn:aws:iam::222222222222:role/GitHubDeployment" }

- { name: "prod", id: "333333333333", role: "arn:aws:iam::333333333333:role/GitHubDeployment" }

exclude:

# Only deploy to prod from main branch

- ${{ github.ref != 'refs/heads/main' && matrix.account.name == 'prod' }}

# Only deploy to staging from staging/main branches

- ${{ !contains(fromJson('["staging", "main"]'), github.ref_name) && matrix.account.name == 'staging' }}

runs-on: ubuntu-latest

environment: ${{ matrix.account.name }}

steps:

- uses: actions/checkout@v4

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v4

with:

role-to-assume: ${{ matrix.account.role }}

aws-region: us-east-1

role-session-name: GitHubDeploy-${{ matrix.account.name }}

- name: Terraform Plan

working-directory: environments/${{ matrix.account.name }}

run: |

terragrunt run-all plan

The key insight: different branches deploy to different account combinations automatically. No manual intervention, no "remember to change the account variable," no accidentally deploying dev code to production.

Tool Integration That Doesn't Drive You Insane

Atlantis vs GitHub Actions vs Terraform Cloud

After wasting months testing every GitOps tool, here's what actually works:

Atlantis crashes every time you get busy. Don't bother.

GitHub Actions is what we use. OIDC setup takes 2 days and you'll hate every minute, but once it works it actually works.

HCP Terraform costs too much. $20+/user/month adds up fast and you're stuck with HashiCorp forever.

We went with GitHub Actions because we're already using GitHub and I'm not paying HashiCorp's enterprise tax.

State Management That Doesn't Corrupt

Multi-account Terraform state management is where most teams give up and go back to manual deployments. Here's what actually works:

Separate S3 buckets per account with DynamoDB locking per account. This prevents state corruption when multiple developers deploy simultaneously and isolates state file access by account boundaries.

## Terragrunt automatically generates backend configs like this:

terraform {

backend "s3" {

bucket = "terraform-state-dev-123456789012"

key = "vpc/terraform.tfstate"

region = "us-east-1"

encrypt = true

dynamodb_table = "terraform-locks-dev-123456789012"

}

}

Cross-region state replication for disaster recovery. S3 cross-region replication ensures state files survive region outages. We learned this lesson after a region outage corrupted our primary state bucket and we spent 8 hours rebuilding state from CloudFormation exports.

Terraform state encryption is mandatory. State files contain sensitive information like database passwords and API keys. Encrypt everything and use S3 bucket policies to restrict access to deployment roles only.

Monitoring That Actually Helps

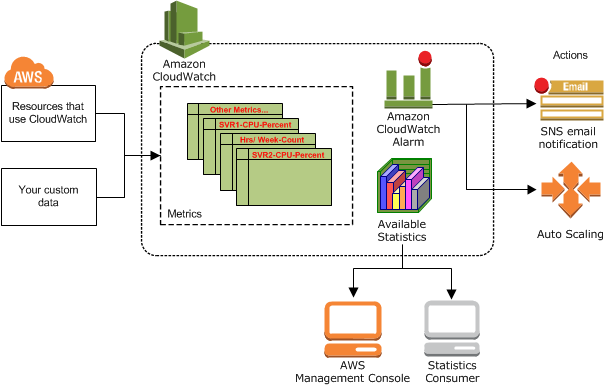

Standard CloudWatch monitoring is useless for multi-account infrastructure deployments. You need deployment-specific metrics that show what's changing across accounts and regions.

We use GitHub Actions monitoring for pipeline health and custom CloudWatch alarms for deployment failures. The key insight: monitor deployment patterns, not just infrastructure health.

Deployment frequency by account: Track how often each account receives deployments. Accounts that haven't been updated in weeks usually have configuration drift.

Plan vs Apply failures: Terraform plans fail for different reasons than applies. Plan failures indicate code issues, apply failures indicate AWS API problems or permission issues.

State lock duration: Long-running state locks usually indicate stuck deployments or manual interventions. Alert when locks exceed 30 minutes.

What Actually Happens (Reality Check)

Forget the bullshit "2-4 weeks" you see in blog posts. Here's the real deal:

Month 1-2: AWS OIDC setup was a fucking nightmare. Trust policies kept failing with "AssumeRoleWithWebIdentity" errors that told you nothing. GitHub's docs don't mention half the shit that breaks. Spent like 3 weeks debugging random failures and wanted to quit.

Month 3-4, maybe 5: Converting all our existing crap to Terragrunt. Had to import a bunch of resources that someone created manually and never documented. Some imports worked, others failed for no reason I could figure out. VPCs were especially painful - took me like 8 tries to get one working properly. Lost count honestly.

Month 4-6: GitHub Actions kept breaking in new and creative ways. Rate limits, timeouts, random failures that made no sense. Just when I thought everything was stable, something else would break. Those "resource not found" errors were the worst - completely random and impossible to debug.

Still happening: People still try to bypass the automation when they're stressed about deadlines. Always find some way to "just this once" deploy manually when things get crazy and we're trying to push a hotfix.

Took us like 4 months, maybe 5? Hard to remember because it was such a shit show. Plan for longer if you're starting from scratch or don't have someone who's done this before.