I've been building AI applications since GPT-3 came out, and I've tried every combination of tools imaginable. Most fail spectacularly when real users hit them. This stack is the first one that actually survives contact with production.

Claude API: The AI That Doesn't Lose Its Mind

Claude API is the only AI service I trust with production workloads. Not because it's perfect - it's slower than GPT-4 sometimes (anywhere from 3 to like 8 seconds for complex queries) - but because it actually follows instructions and doesn't make shit up.

Real problems it solves:

- Handles complex business logic without going completely off the rails

- Tool use that actually works (unlike early GPT function calling that was basically random)

- Rate limits that make sense for real applications (not the insane restrictions from other providers)

- Error messages that occasionally help you figure out what went wrong

What sucks about it:

- Painfully slow for simple queries - sometimes 8 seconds for "what's 2+2?"

- API errors are spectacularly useless (

Request failed- gee thanks, very helpful) - Costs destroy your budget if you're not watching (learned the hard way: $1200 bill in week 2 when I forgot rate limiting existed)

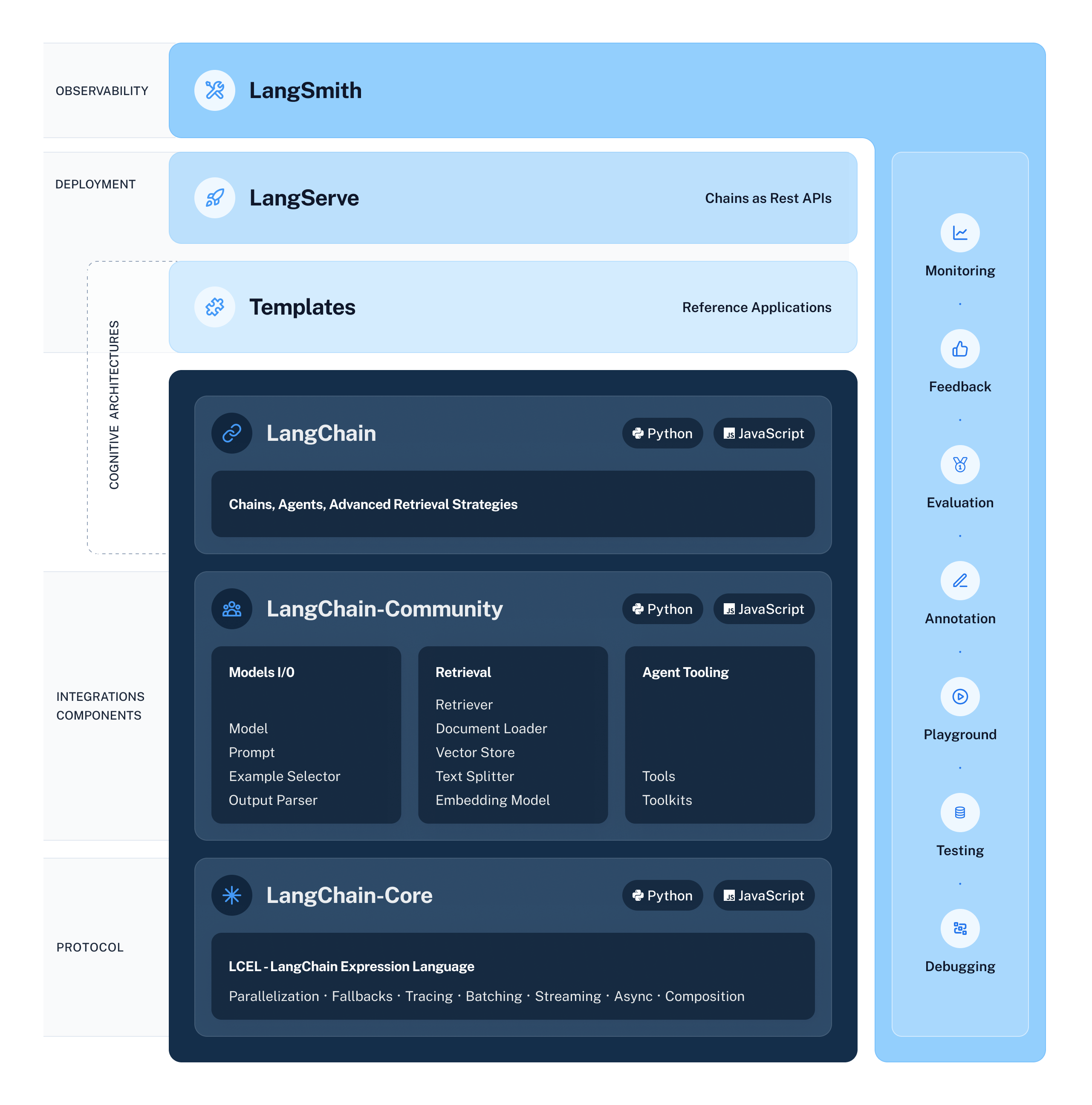

LangChain: Amazing When It Works, Hell When It Doesn't

LangChain is great until it breaks. When it works, it's magical - you can build complex multi-step AI workflows that actually remember context and handle edge cases. When it breaks, you'll spend days debugging execution graphs that make no fucking sense.

Why I use it anyway:

- LangGraph (their new stuff) is actually pretty solid for stateful workflows

- Abstracts away the messy details of chaining multiple AI calls

- LangSmith debugging is clutch when everything goes sideways (which it will)

- Works with any LLM, so you're not locked into one provider

- Memory management for conversation history

- Tool integration that actually works with modern APIs

What will drive you insane:

- Documentation assumes you already know how everything works

- Updates break your code in subtle ways (pin your versions or suffer)

- Error messages that tell you something failed but not where or why

- Memory management is still weird - sometimes it remembers everything, sometimes nothing

Real shit: I spent 3 weeks getting LangGraph working for our customer support bot. The tutorials are bullshit - real user conversations with context switching and tool calls are a nightmare. I rewrote the state management like 6 times, maybe 7. Every time I thought it worked, some edge case would break everything. But once it actually worked? Fucking magical. Users can have real conversations instead of starting over every goddamn message.

The debugging hell nobody mentions: LangGraph execution graphs are impossible to debug when they shit the bed. You get errors like StateGraph execution failed at node 'process_user_input' with zero fucking context about what actually broke. I ended up logging every single node transition just to figure out where things went sideways. Pro tip: 9 times out of 10, the error is in your state schema, not wherever the error message pretends it is.

FastAPI: The Only Web Framework That Doesn't Suck for AI

FastAPI is the one piece of this stack that actually works like the docs say it will. Fast async handling for AI requests that take forever? Check. Automatic API docs that don't lie? Check. Type validation that catches errors before they hit production? Double check.

Why it's perfect for AI:

- Async/await actually handles Claude's variable response times (200ms to 8+ seconds)

- Pydantic validation catches malformed AI responses before they break everything

- Built-in OpenAPI docs make testing and debugging way easier

- Dependency injection keeps your code clean when dealing with multiple AI services

- Background tasks for async AI processing

- WebSocket support for streaming AI responses

- Request validation prevents malformed inputs from reaching your AI models

Minor annoyances:

- Can be too strict with type checking sometimes (just use

Anyand move on) - Documentation is almost too good - makes other frameworks look lazy

- Startup time can be slow in development with lots of imports

Production reality check: Our FastAPI app handles 500+ concurrent AI requests without breaking a sweat. Contrast that with our previous Flask setup that would randomly timeout under load. The async handling is legitimately good.

What You Can Actually Build (And How Much It Hurts)

Simple Stuff: Just Works

Direct FastAPI → Claude API calls. Takes an afternoon to set up, works exactly like you'd expect. Perfect for content generation, document summarization, basic chatbots. If you need more than "send prompt, get response," move on.

Medium Complexity: LangChain Workflows

Multi-step processes with conversation memory. Setup time: 2-3 weeks if you're lucky, 2 months if you're not. Customer support bots, document processing pipelines, anything that needs to remember context. Debugging is painful but the end result is worth it.

Advanced: Multi-Agent Hell

Multiple AI agents talking to each other. Only attempt this if you have dedicated DevOps support and a high tolerance for 3am debugging sessions. The architecture diagrams look impressive in slides, the reality is constant firefighting.

Enterprise: Just Use a Service

If you need multi-region deployments, compliance reporting, and enterprise SSO, just pay someone else to handle it. Building this yourself is a full-time job for a team of 5+ engineers. The ROI math rarely works out unless you're doing something truly unique.

The Reality Check

This stack works, but it's not magic. You'll still spend weeks debugging weird edge cases, Claude will occasionally return nonsense, and LangChain will break in creative new ways every time you update.

What actually matters:

- Async/await patterns save your ass when AI responses take forever

- Proper error handling prevents one bad request from taking down everything

- Rate limiting keeps your API bills from bankrupting you

- Monitoring tells you when things break (not if - when)

Time investment reality:

- Simple API: 1-2 days to working prototype, 1-2 weeks to production-ready

- Complex workflows: 1-3 months of active development, ongoing maintenance nightmare

- Enterprise deployment: Just hire someone who's done it before

Cost reality check:

Small application (1K users/month): $200-500ish/month mostly Claude API costs

Medium application (10K users/month): $1K-5K/month depending on usage patterns

Enterprise (100K+ users/month): $10K+/month plus infrastructure and DevOps overhead

The stack works. Whether it's worth the complexity depends on what you're building and how much you value your sanity.